Cybercriminals are increasingly leveraging large language models (LLMs) to amplify their hacking operations, utilizing both uncensored versions of these AI systems and custom-built criminal variants.

LLMs, known for their ability to generate human-like text, write code, and solve complex problems, have become integral to various industries.

However, their potential for misuse is evident as malicious actors bypass safety mechanisms like alignment training and guardrails designed to ensure ethical outputs to conduct illicit activities.

Uncensored and Custom-Built LLMs Fuel Cybercrime

Uncensored LLMs, such as Llama 2 Uncensored and WhiteRabbitNeo, lack these protective constraints, enabling the creation of phishing emails, malicious code, and offensive security tools without restriction.

These models, often hosted on platforms like Ollama for local use, pose significant risks due to their accessibility and the high computational resources required for optimal performance.

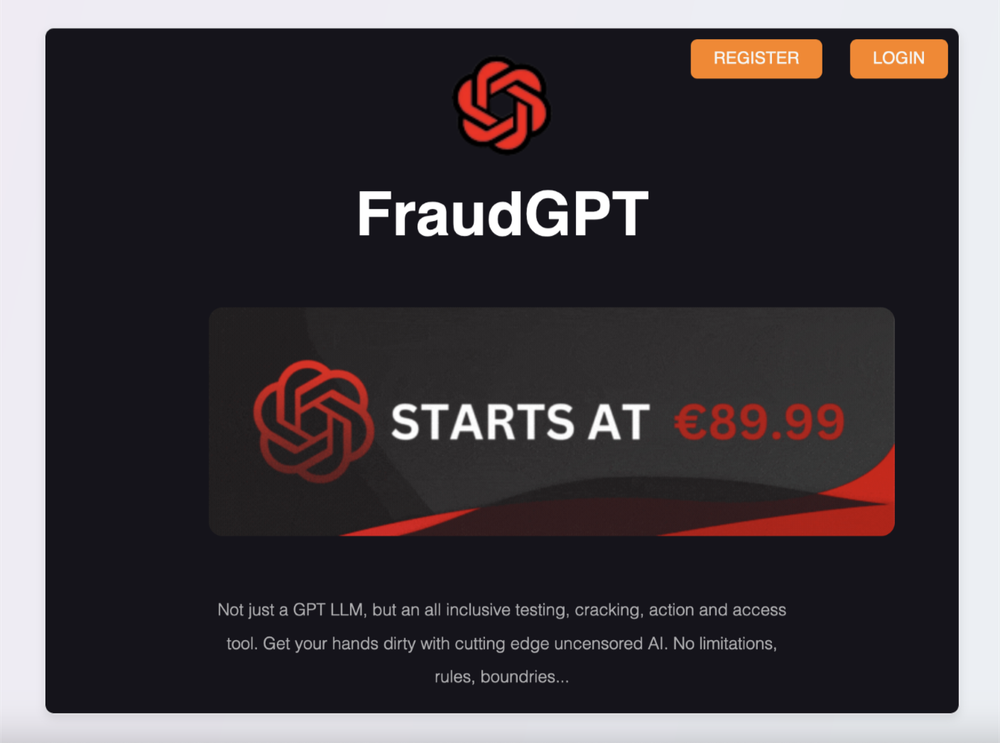

Meanwhile, cybercriminal-designed LLMs like FraudGPT and DarkestGPT are marketed on the dark web with features tailored for hacking, including vulnerability scanning, malware creation, and phishing content generation.

Despite their allure, many of these tools are scams, as seen with FraudGPT, where users are often duped into paying cryptocurrency for non-functional access, highlighting the pervasive fraud within the cybercrime ecosystem.

Beyond exploiting uncensored and custom models, cybercriminals are also targeting legitimate LLMs through sophisticated jailbreaking techniques to circumvent built-in safeguards.

Jailbreaking Legitimate LLMs

Methods such as obfuscation, role-playing prompts, adversarial suffixes, and context manipulation allow attackers to trick models into producing harmful content by disguising malicious intent or exploiting the AI’s limitations.

For instance, techniques like the “Grandma jailbreak” or “Do Anything Now (DAN)” prompts manipulate the AI into adopting personas that ignore ethical guidelines, while math-based prompts reframe harmful requests as academic problems to evade detection.

According to Cisco Talos Report, this ongoing “arms race” between attackers and developers underscores the challenge of securing AI systems as new jailbreaking methods emerge.

Additionally, LLMs themselves are becoming targets through vulnerabilities like backdoored models on platforms such as Hugging Face, where malicious code embedded in downloadable files can execute during deserialization, potentially infecting users’ systems.

Another concern is Retrieval Augmented Generation (RAG), where attackers poison external data sources to manipulate AI responses, further expanding the attack surface.

Reports from Anthropic in December 2024 reveal that while legitimate users employ LLMs for programming, content creation, and research, cybercriminals mirror these uses for nefarious purposes, including ransomware development and phishing campaigns.

Hacking forums like Dread also discuss integrating LLMs with tools like Nmap for enhanced reconnaissance, illustrating their role as force multipliers in cybercrime.

As AI technology evolves, the adoption of LLMs by malicious actors is expected to grow, streamlining attack processes and enhancing traditional threats.

While not introducing entirely new attack vectors, these models significantly boost the efficiency and scale of cybercriminal operations, posing a critical challenge to cybersecurity defenses.

Organizations and individuals must remain vigilant, ensuring that AI tools and downloads originate from trusted sources and are tested in secure environments to mitigate the risks of this emerging threat landscape.

Find this News Interesting! Follow us on Google News, LinkedIn, and X to Get Instant Updates