Cloudflare has revealed that a 62-minute global outage of its popular 1.1.1.1 DNS resolver service on July 14, 2025, was caused by an internal configuration error rather than an external attack, though the incident coincided with an unrelated BGP hijack that complicated the situation.

The outage, which lasted from 21:52 UTC to 22:54 UTC, affected millions of users worldwide who rely on the 1.1.1.1 public DNS resolver service.

During the disruption, most internet services became unavailable for affected users as they were unable to resolve domain names.

The incident represents one of the most significant DNS outages in recent years, given 1.1.1.1’s status as one of the world’s most popular DNS resolver services since its launch in 2018.

Root Cause and Timeline

The outage originated from a configuration error introduced on June 6, 2025, during preparations for a future Data Localization Suite (DLS) service.

The misconfiguration inadvertently linked the 1.1.1.1 resolver’s IP addresses to a non-production service, but remained dormant for over a month without causing any immediate impact

The crisis was triggered on July 14 when engineers made a routine configuration change to attach a test location to the same DLS service.

This change caused a global refresh of network configuration, inadvertently withdrawing the 1.1.1.1 resolver prefixes from production Cloudflare data centers worldwide. The withdrawal affected multiple IP ranges, including 1.1.1.0/24, 1.0.0.0/24, and several IPv6 ranges.

BGP Hijack Complication

As Cloudflare’s systems withdrew the routes, an unexpected development occurred: Tata Communications India (AS4755) began advertising the 1.1.1.0/24 prefix, creating what appeared to be a classic BGP hijack scenario.

However, Cloudflare emphasized that this hijack was not the cause of the original outage but rather an unrelated issue that became visible once Cloudflare withdrew its own route announcements.

Cloudflare detected the impact at 22:01 UTC and declared an incident. The company initiated a revert to the previous configuration at 22:20 UTC, which immediately restored approximately 77% of traffic levels.

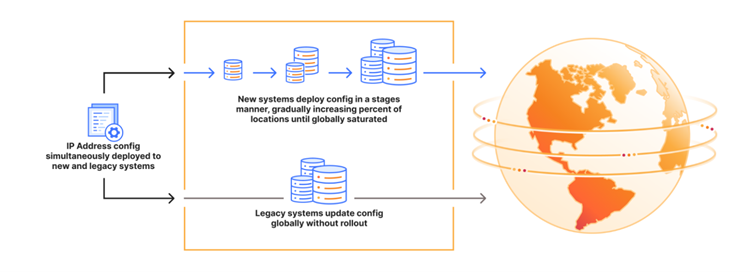

However, complete restoration required additional time as about 23% of edge servers had automatically removed required IP bindings during the topology change.

To accelerate recovery, Cloudflare manually triggered and validated changes in testing locations before executing them globally, bypassing their normal progressive rollout process. Normal traffic levels were fully restored by 22:54 UTC.

The incident highlights the complexity of managing global DNS infrastructure and the cascading effects that configuration errors can have on internet accessibility worldwide.

Get Free Ultimate SOC Requirements Checklist Before you build, buy, or switch your SOC for 2025 - Download Now