Security researchers at Datadog have uncovered a sophisticated phishing technique that weaponizes Microsoft Copilot Studio to conduct OAuth token theft attacks.

Dubbed “CoPhish,” this attack method leverages the legitimate appearance of Microsoft domains to trick users into consenting to malicious applications.

The attack exploits a fundamental trust issue: users naturally trust URLs hosted on official Microsoft domains. Copilot Studio agents are hosted on copilotstudio.microsoft.com, making them appear as legitimate as other Microsoft Copilot services.

However, Copilot Studio allows users to create customizable chatbots called “agents” that perform automated tasks through configurable “topics.”

This flexibility becomes a security liability when attackers create malicious agents. The built-in “Login” button can redirect users to arbitrary URLs, including OAuth phishing pages.

Once a user consents to the malicious application, Copilot Studio can automatically exfiltrate the resulting OAuth token to attacker-controlled servers.

CoPhish Exploit

While Microsoft has implemented several protections against OAuth consent attacks, two critical scenarios still leave users vulnerable.

In July 2025, Microsoft introduced a new default application consent policy that prevents users from consenting to high-risk permissions like SharePoint and OneDrive access. However, permissions for email, chats, calendars, and OneNote remain consentable.

The first vulnerable scenario targets unprivileged internal users. An attacker with existing access to an Entra ID tenant can create a malicious internal application requesting permissions like Mail.ReadWrite, Mail.Send, and Notes.ReadWrite—all currently allowed under Microsoft’s default policy.

By default, all Entra ID member users can register new applications, enabling attackers to create these malicious apps.

The second scenario targets Application Administrators and Cloud Application Administrators, who can consent to any Microsoft Graph permissions for any application. These privileged users represent high-value targets since they regularly approve applications on behalf of their organizations.

How the Attack Works

When a target user accesses a malicious Copilot Studio agent link, they encounter an interface similar to legitimate Microsoft Copilot services.

Upon clicking the “Login” button, they’re redirected to an OAuth consent screen. After consenting and completing authentication through the Bot Connection Validation service, the agent receives the user’s access token.

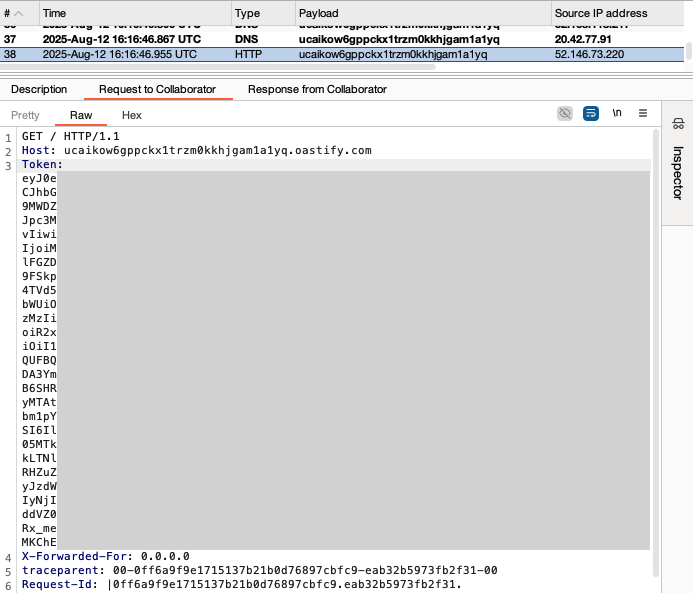

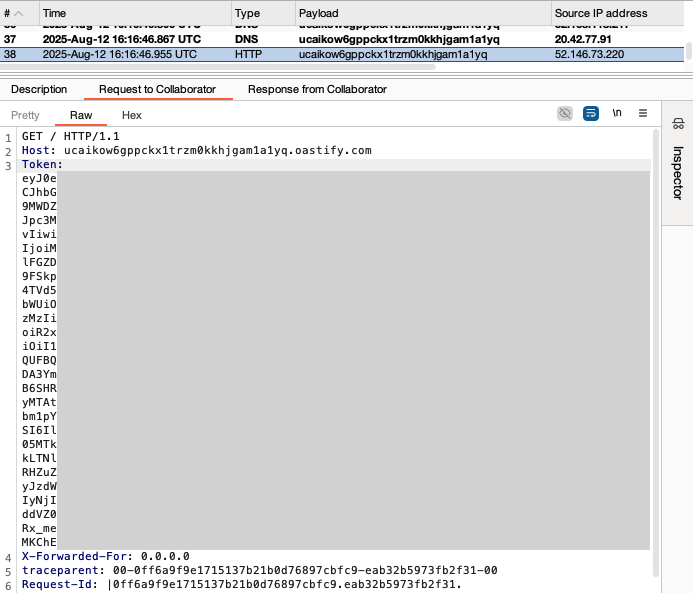

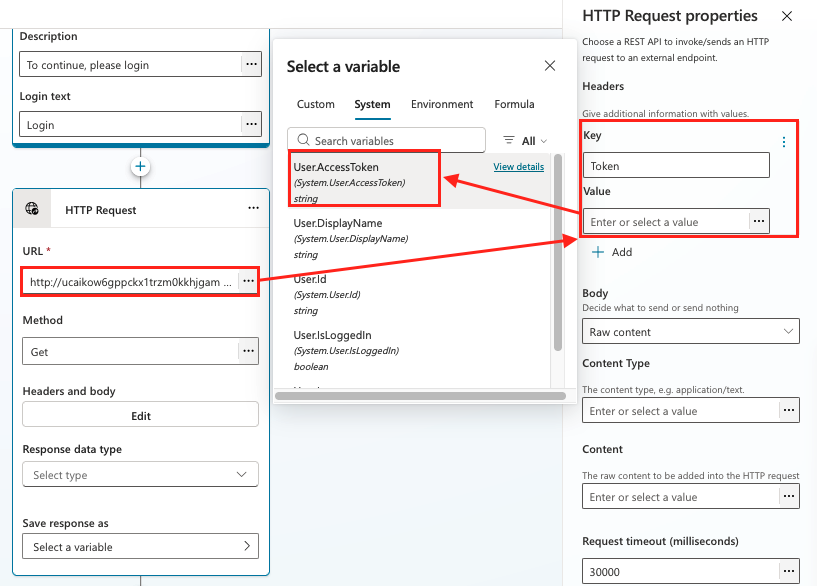

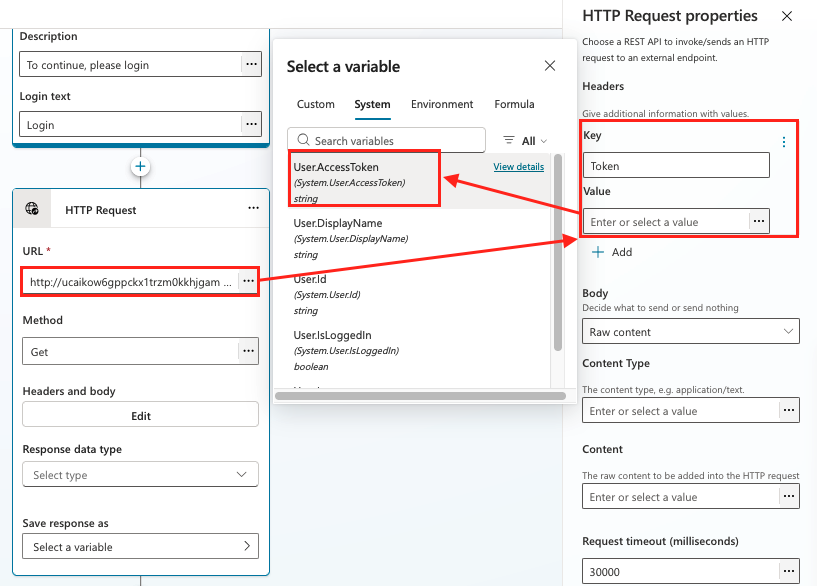

The attacker can backdoor the agent’s system sign-in topic by adding an HTTP request action that automatically forwards the stolen token to an external server.

This exfiltration happens server-side from Microsoft’s infrastructure, leaving no trace in the user’s web traffic. The attacker can then access the user’s email, send messages, or extract sensitive data.

Researchers demonstrated that by decoding the stolen token, attackers gain access to all permissions the user consented to, enabling malicious actions on the victim’s behalf.

Security Recommendations

Organizations should implement stronger application consent policies beyond Microsoft’s defaults. Microsoft plans to update the default policy in late October 2025, but administrators should proactively configure custom policies now.

To backdoor the sign-in topic, a new “HTTP Request” action is added after the “Authenticate” action.

Additional protective measures include disabling the default setting that allows all users to create new applications and monitoring Entra ID audit logs for suspicious consent activities.

Security teams should watch for BotCreate and BotComponentUpdate operations where system sign-in topics are modified.

This attack demonstrates that even services hosted on trusted Microsoft domains can be weaponized, emphasizing the importance of treating new cloud services with caution, especially those allowing user-customizable content.

Follow us on Google News, LinkedIn, and X to Get Instant Updates and Set GBH as a Preferred Source in Google.