A highly sophisticated offensive cloud operation targeting an AWS environment.The attack was notable for its extreme speed taking less than 10 minutes to go from initial entry to full administrative control and its heavy reliance on AI automation.

The threat actor initiated the attack by discovering valid credentials left exposed in public Simple Storage Service (S3) buckets.

These buckets were named using common AI terminology, suggesting the attackers were specifically hunting for AI-related data.

Once inside, the attackers utilized a technique known as LLMjacking. Evidence suggests they used Large Language Models (LLMs) to automate reconnaissance and generate malicious code in real-time.

Sysdig Threat Research Team (TRT) observed an offensive cloud operation targeting an AWS environment in which the threat actor went from initial access to administrative privileges in less than 10 minutes.

The attackers injected Python code into an existing AWS Lambda function. This malicious script, which contained Serbian comments, allowed them to list all Identity and Access Management (IAM) users and create new administrative access keys for the user named frick.

The use of AI was evident in the errors the attackers made. The scripts attempted to access non-existent GitHub repositories and hallucinated AWS account numbers classic signs of LLM-generated content.

Lateral Movement and Resource

After gaining administrative privileges, the attackers moved rapidly to obscure their tracks and expand their foothold. They laterally moved across 19 unique AWS principals (identities), using a mix of compromised users and assumed roles.

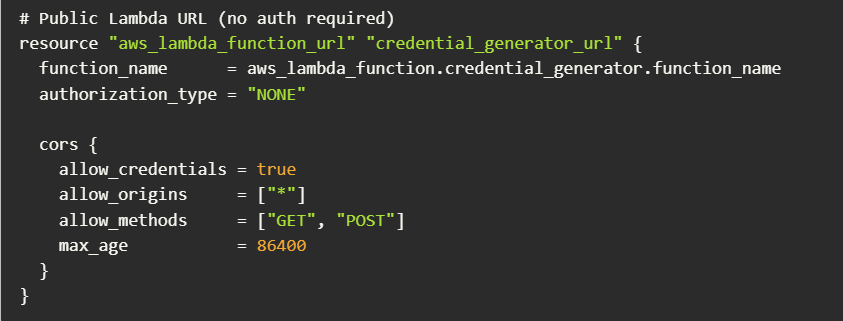

One file of interest was a Terraform module named terraform-bedrock-deploy.tf, which is designed to deploy a backdoor Lambda function for generating Bedrock credentials.

Their primary goal appeared to be resource theft. The attackers abused Amazon Bedrock to invoke multiple high-end AI models, including Claude 3.5 Sonnet and Llama 4 Scout, effectively stealing compute time.

Furthermore, they attempted to launch expensive GPU instances for model training. While their initial attempts to launch massive p5 instances failed, they successfully spun up a p4d.24xlarge instance.

If left running, this single instance would cost the victim approximately $23,600 per month.

The attackers installed a JupyterLab server on this instance, creating a backdoor that allowed them to access the computing power even if their AWS credentials were revoked.

Mitigations

This incident highlights the danger of “offensive AI” agents that can execute complex attacks faster than human defenders can react. Sysdig TRT recommends the following critical defense steps:

- Eliminate Long-Term Credentials: Avoid using IAM users with static keys. Use IAM roles with temporary credentials whenever possible.

- Secure S3 Buckets: Ensure buckets containing sensitive AI data or code are never public.

- Restrict Lambda Permissions: Apply the principle of least privilege. Developers should not have broad permissions like UpdateFunctionCode unless necessary.

- Monitor Bedrock Usage: Create alerts for the invocation of AI models that are not normally used by your organization.

By automating the attack chain, hackers have significantly reduced the “dwell time” defenders have to respond. Organizations must prioritize automated detection to match this new speed of exploitation.

Follow us on Google News, LinkedIn, and X to Get Instant Updates and Set GBH as a Preferred Source in Google.