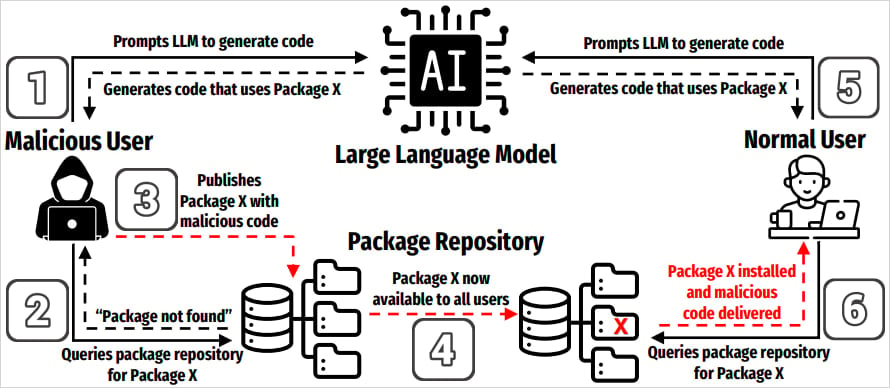

A new class of supply chain attacks named ‘slopsquatting’ has emerged from the increased use of generative AI tools for coding and the model’s tendency to “hallucinate” non-existent package names.

The term slopsquatting was coined by security researcher Seth Larson as a spin on typosquatting, an attack method that tricks developers into installing malicious packages by using names that closely resemble popular libraries.

Unlike typosquatting, slopsquatting doesn’t rely on misspellings. Instead, threat actors could create malicious packages on indexes like PyPI and npm named after ones commonly made up by AI models in coding examples.

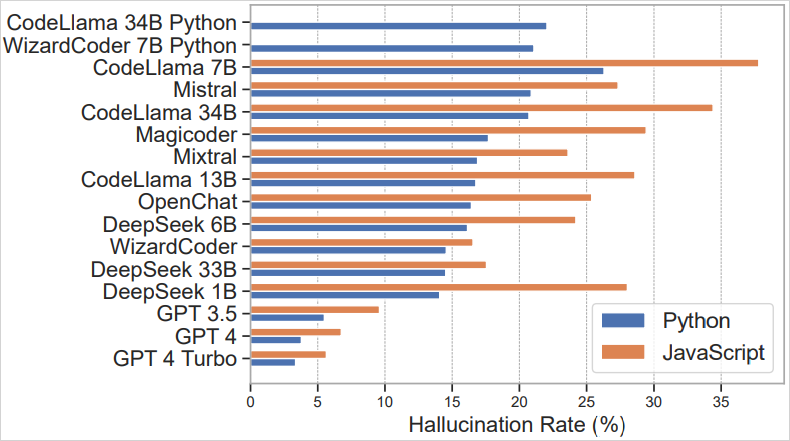

A research paper about package hallucinations published in March 2025 demonstrates that in roughly 20% of the examined cases (576,000 generated Python and JavaScript code samples), recommended packages didn’t exist.

The situation is worse on open-source LLMs like CodeLlama, DeepSeek, WizardCoder, and Mistral, but commercial tools like ChatGPT-4 still hallucinated at a rate of about 5%, which is significant.

Source: arxiv.org

While the number of unique hallucinated package names logged in the study was large, surpassing 200,000, 43% of those were consistently repeated across similar prompts, and 58% re-appeared at least once again within ten runs.

The study showed that 38% of these hallucinated package names appeared inspired by real packages, 13% were the results of typos, and the remainder, 51%, were completely fabricated.

Although there are no signs that attackers have started taking advantage of this new type of attack, researchers from open-source cybersecurity company Socket warn that hallucinated package names are common, repeatable, and semantically plausible, creating a predictable attack surface that could be easily weaponized.

“Overall, 58% of hallucinated packages were repeated more than once across ten runs, indicating that a majority of hallucinations are not just random noise, but repeatable artifacts of how the models respond to certain prompts,” explains the Socket researchers.

“That repeatability increases their value to attackers, making it easier to identify viable slopsquatting targets by observing just a small number of model outputs.”

Source: arxiv.org

The only way to mitigate this risk is to verify package names manually and never assume a package mentioned in an AI-generated code snippet is real or safe.

Using dependency scanners, lockfiles, and hash verification to pin packages to known, trusted versions is an effective way to improve security

The research has shown that lowering AI “temperature” settings (less randomness) reduces hallucinations, so if you’re into AI-assisted or vibe coding, this is an important factor to consider.

Ultimately, it is prudent to always test AI-generated code in a safe, isolated environment before running or deploying it in production environments.

Based on an analysis of 14M malicious actions, discover the top 10 MITRE ATT&CK techniques behind 93% of attacks and how to defend against them.