AI malware may be in the early stages of development, but it’s already being detected in cyberattacks, according to new research published this week.

Google researchers looked at five AI-enabled malware samples – three of which have been observed in the wild – and found that the malware was often lacking in functionality and easily detected. Nonetheless, the research offers insight into where the use of AI in threat development may go in the future.

“Although some recent implementations of novel AI techniques are experimental, they provide an early indicator of how threats are evolving and how they can potentially integrate AI capabilities into future intrusion activity,” the researchers wrote.

AI Malware Includes Infostealers, Ransomware and More

The AI-enabled malware samples included a reverse shell, a dropper, ransomware, a data miner and an infostealer.

The researchers said malware families like PROMPTFLUX and PROMPTSTEAL are the first to use Large Language Models (LLMs) during execution. “These tools dynamically generate malicious scripts, obfuscate their own code to evade detection, and leverage AI models to create malicious functions on demand, rather than hard-coding them into the malware,” they said. “While still nascent, this represents a significant step toward more autonomous and adaptive malware.”

“[A]dversaries are no longer leveraging artificial intelligence (AI) just for productivity gains, they are deploying novel AI-enabled malware in active operations,” they added. “This marks a new operational phase of AI abuse, involving tools that dynamically alter behavior mid-execution.”

However, the new AI malware samples are only so effective. Using hashes provided by Google, they were all detected by roughly a third or more of security tools on VirusTotal, and two of the malware samples were detected by nearly 70% of security tools.

AI Malware Samples and Detection Rates

The reverse shell, FRUITSHELL (VirusTotal), is a publicly available reverse shell written in PowerShell that establishes a remote connection to a command-and-control (C2) server and enables a threat actor to launch arbitrary commands on a compromised system. “Notably, this code family contains hard-coded prompts meant to bypass detection or analysis by LLM-powered security systems,” the researchers said.

It was detected by 20 of 62 security tools (32%), and has been observed in threat actor operations.

The dropper, PROMPTFLUX (VirusTotal), was written in VBScript and uses an embedded decoy installer for obfuscation. It uses the Google Gemini API for regeneration by prompting the LLM to rewrite its source code and saving the new version to the Startup folder for persistence, and the malware attempts to spread by copying itself to removable drives and mapped network shares.

Google said the malware appears to still be under development, as incomplete features are commented out and the malware limits Gemini API calls. “The current state of this malware does not demonstrate an ability to compromise a victim network or device,” they said.

The most interesting feature of PROMPTFLUX may be its ability to periodically query Gemini to obtain new code for antivirus evasion.

“While PROMPTFLUX is likely still in research and development phases, this type of obfuscation technique is an early and significant indicator of how malicious operators will likely augment their campaigns with AI moving forward,” they said.

It was detected by 23 of 62 tools (37%).

The ransomware, PROMPTLOCK (VirusTotal), is a proof of concept cross-platform ransomware written in Go that was developed by NYU researchers. It uses an LLM to dynamically generate malicious Lua scripts at runtime, and is capable of filesystem reconnaissance, data exfiltration, and file encryption on Windows and Linux systems.

It was detected by 50 of 72 security tools on VirusTotal (69%).

The data miner, PROMPTSTEAL (VirusTotal), was written in Python and uses the Hugging Face API to query the LLM “Qwen2.5-Coder-32B-Instruct” to generate Windows commands to gather system information and documents.

The Russian threat group APT28 (Fancy Bear) has been observed using PROMPTSTEAL, which the researchers said is their “first observation of malware querying an LLM deployed in live operations.”

It was detected by 47 of 72 security tools (65%).

The infostealer, QUIETVAULT (VirusTotal), was written in JavaScript and targets GitHub and NPM tokens. The credential stealer uses an AI prompt and AI CLI tools to look for other potential secrets and exfiltrate files to GitHub.

It has been observed in threat actor operations and was detected by 29 of 62 security tools (47%).

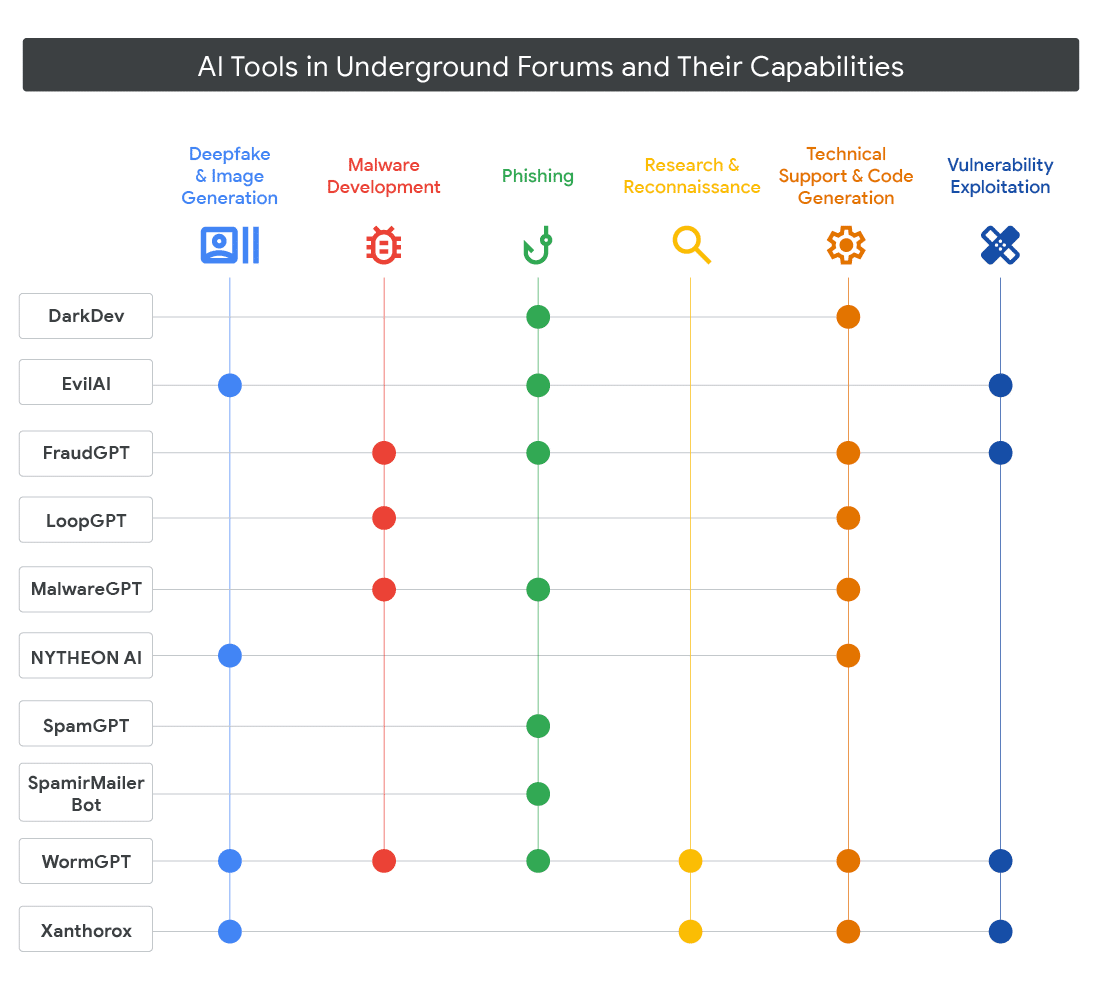

The full Google report also looks at advanced persistent threat (APT) use of AI tools, and also included this interesting comparison of malicious AI tools such as WormGPT: