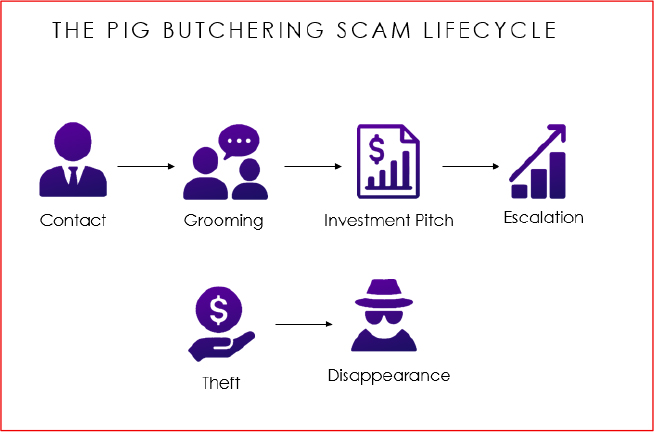

Pig-butchering scams, the sophisticated long-con investment fraud schemes that have plagued millions globally, have reached unprecedented scale through the strategic deployment of artificial intelligence technologies.

Once reliant on labor-intensive social engineering, these cybercriminal enterprises now leverage AI-generated identities, automated messaging systems, and deepfake video synthesis to orchestrate operations at an industrial scale, generating estimated annual losses of tens of billions of dollars.

The evolution marks a critical inflection point in cybercrime sophistication. What began as small-scale networks operating simple scripts via social media has transformed into structured criminal syndicates managing sprawling scam compounds staffed by hundreds or thousands of individuals across Southeast Asia, East Asia, and beyond.

Central to this evolution is the strategic integration of AI technologies that amplify the psychological exploitation at the heart of these schemes.

AI-generated imagery has become the foundation of initial contact operations. Scammers deploy AI-assisted tools to manufacture entirely believable online personas, generating synthetic profile photographs that bypass visual authenticity checks on dating platforms, social networks, and messaging applications.

Unlike recycled or stolen photos, AI-generated images leave minimal forensic traces and can be customized rapidly to target specific victim demographics.

This technological advantage eliminates one of the traditional friction points in scaling romance-based recruitment sourcing enough convincing visual materials.

Advanced deepfake synthesis has introduced an additional layer of manipulation. Scammers can now create fabricated video content whether brief voice messages or short video clips further cementing emotional connection with victims.

These technological artifacts provide a sense of authenticity that text-alone interaction cannot achieve, accelerating the grooming timeline and increasing manipulation success rates.

Automated Message Generation

Natural language processing and large language models have revolutionized the capacity of scam operators to manage victim pools. Pig-butchering scams are not opportunistic one-off frauds; they represent an entire cybercrime industry.

Operators no longer require human scammers to craft every message manually. AI-assisted systems generate contextually appropriate responses, maintain conversation consistency across multiple personas, and adapt messaging based on victim psychology profiles.

This automation enables individual operators to manage dozens or hundreds of simultaneous conversations a scaling improvement that would be impossible through traditional labor.

The sophistication of these systems means conversations appear natural and emotionally resonant.

AI systems trained on relationship psychology can identify optimal moments for investment introduction, calibrate pressure escalation, and respond to victim resistance with contextually appropriate reassurance.

The result is that grooming cycles that historically required months of human effort can now be compressed through intelligent automation.

AI capabilities extend beyond victim interaction to infrastructure management. Machine learning systems optimize domain registration patterns, automate platform deployment workflows, and enable rapid recreation of fake trading applications when legitimate authorities block existing infrastructure.

Addressing AI-amplified pig-butchering requires coordinated action: platform deployment of advanced content detection, financial institution integration of blockchain forensics, international policy harmonization around cryptocurrency AML frameworks, and public awareness initiatives.

Automated backend systems manage fake dashboard generation, simulating market activity that appears consistent with legitimate exchange behavior.

Law Enforcement Challenges

The integration of AI has addressed pig-butchering from a cybercrime category affecting individual victims into an institutional threat.

The Kansas case involving Heartland Tri-State Bank’s collapse where CEO Shan Hanes was manipulated into transferring $47 million demonstrates that even highly educated decision-makers fall victim to these sophisticated campaigns. The scalability enabled by AI increases the likelihood of additional high-impact organizational targeting.

Law enforcement agencies face compounding challenges. Traditional detection methods relying on behavioral pattern recognition struggle against AI-generated content and automated messaging.

Cross-border enforcement remains complicated by fragmented cryptocurrency regulation and the operational shelter provided by scam compounds in weak-governance regions.

Without rapid intervention, these operations will continue exploiting AI’s capacity to scale psychological manipulation at unprecedented scope and velocity.

Follow us on Google News, LinkedIn, and X to Get Instant Updates and Set GBH as a Preferred Source in Google.