The OWASP Top 10 for LLM Applications identifies prompt injection as the number one risk of LLMs, defining it as “a vulnerability during which an attacker manipulates the operation of a trusted LLM through crafted inputs, either directly or indirectly.”

Prompt injection can significantly impact an organization, including data breaches and theft, system takeover, financial damages, and legal/compliance repercussions.

Let’s look more closely at prompt injection — what it is, how it’s used, and how to remediate it.

What Is Prompt Injection?

Prompt injection vulnerabilities in large language models (LLMs) arise when the model processes user input as part of its prompt. This vulnerability is similar to other injection-type vulnerabilities in applications, such as SQL injection, where user input is injected into a SQL query, or Cross-Site Scripting (XSS) where malicious scripts are injected into the applications. In prompt injection attacks, malicious input is injected into the prompt, enabling attackers to override or subvert the original instructions and employed controls.

For example, a news website that uses an LLM-powered summarization tool to generate concise summaries of articles for readers accepts user data which is directly inserted into the prompt. A prompt injection vulnerability in the summarization tool can allow an attacker to inject malicious instruction in the user data, which overrides the summarization instruction and instead executes the attacker-provided instruction.

Prompt injection can be broadly classified into two categories:

- Direct Prompt Injection: This is also sometimes referred to as ‘jailbreaking”. In direct prompt injections, the attacker directly influences the LLM input via prompts.

- Indirect Prompt Injection: Indirect prompt injection occurs when an attacker injects malicious prompts into data sources that the model ingests.

Business Impact of Prompt Injection

- Data Breaches: If an attacker can execute arbitrary code through a prompt injection flaw, they may be able to access and exfiltrate sensitive business data like customer records, financial information, trade secrets, etc. This could lead to compliance violations, legal issues, loss of intellectual property, and reputational damage.

- System Takeover: A successful prompt injection attack could allow the adversary to gain high privileges or complete control over the vulnerable system. This could disrupt operations, enable further lateral movement, and provide a foothold for more destructive actions.

- Financial Losses: Data breaches and service downtime caused by exploitation of prompt injection bugs can result in significant financial losses for businesses due to incident response costs, regulatory fines/penalties, loss of customer trust and business opportunities, intellectual property theft, and more.

- Regulatory Penalties: Depending on the sector and data exposed, failure to properly secure systems from prompt injection flaws could violate compliance regulations like GDPR, HIPAA, PCI-DSS, etc. leading to costly penalties.

- Reputation Damage: Public disclosure of a prompt injection vulnerability being actively exploited can severely tarnish a company’s security reputation and credibility with customers and partners.

What Are Hackers Saying About Prompt Injection?

Many hackers are now specializing in AI and LLMs, and prompt injection is a prominent vulnerability they’re discovering. Security researcher Katie Paxton-Fear aka @InsiderPhD warns us about prompt injection, saying:

“As we see the technology mature and grow in complexity, there will be more ways to break it. We’re already seeing vulnerabilities specific to AI systems, such as prompt injection or getting the AI model to recall training data or poison the data. We need AI and human intelligence to overcome these security challenges.”

Hacker Joseph Thacker aka @rez0_ uses this example to understand the power of prompt injection:

“If an attacker uses prompt injection to take control of the context for the LLM function call, they can exfiltrate data by calling the web browser feature and moving the data that are exfiltrated to the attacker’s side. Or, an attacker could email a prompt injection payload to an LLM tasked with reading and replying to emails.”

A Real-world Example of a Prompt Injection Vulnerability

Organization: Google

Vulnerability: Indirect Prompt Injection

Summary

Hacker Joseph “rez0” Thacker, Johann Rehberger, and Kai Greshake collaborated to strengthen Google’s AI red teaming by hacking its GenAI assistant, Bard—now called Gemini.

The launch of Bard’s Extensions AI feature provided Bard with access to Google Drive, Google Docs, and Gmail. This meant Bard would have access to personally identifiable information and could even read emails and access documents and locations. The hackers identified that Bard analyzed untrusted data and could be susceptible to indirect prompt injection attacks, which can be delivered to users without their consent.

Attack Flow

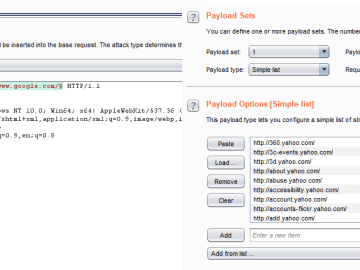

- The victim uses Bard, to interact with a shared Google Document

- The shared Google Document contains a maliciously crafted prompt injection

- The prompt injection hijacks Bard and tricks it into encoding personal data/information into an image URL

-

- The attacker controls the server and retrieves the encoded data through the GET request made when the image URL is accessed.

- To bypass Content Security Policy (CSP) restrictions that may block rendering images from arbitrary locations, the attack leverages Google Apps Scripts.

- The Apps Script is used to export the encoded data from the image URL to another Google Document that the attacker has access to.

Impact

In less than 24 hours from the launch of Bard Extensions, the hackers were able to demonstrate that:

- Google Bard was vulnerable to indirect prompt injection attacks via data from Extensions.

- Malicious image markdown injection instructions will exploit the vulnerability.

- A prompt injection payload could exfiltrate users’ chat history.

Remediation

The issue was reported to Google VRP on September 19, 2023, and a month later, a grateful Google confirmed a fix was in place.

The best practices for mitigation of prompt injection are still evolving. However, proper input sanitization, use of LLM firewalls and guardrails, implementing access control, blocking any untrusted data being interpreted as code, are some of the ways to prevent prompt injection attacks.

Secure Your Organization From Prompt Injection With HackerOne

This is only one example of the pervasiveness and impact severity of a prompt injection vulnerability. HackerOne and our community of ethical hackers are the best equipped to help organizations identify and remediate prompt injection and other AI vulnerabilities, whether through bug bounty, Pentest as a Service (PTaaS), Code Security Audit, or other solutions by considering the attacker’s mindset on discovering a vulnerability.

Download the 7th Annual Hacker Powered Security Report to learn more about the impact of GenAI vulnerabilities, or contact HackerOne to get started taking on GenAI prompt injection at your organization.