Apple unveiled its new ‘Apple Intelligence’ feature today at its 2024 Worldwide Developer Conference, finally unveiling its generative AI strategy that will power new personalized experiences on Apple devices.

“Apple Intelligence is the personal intelligence system that puts powerful generative models right at the core of your iPhone, iPad, and Mac,” explained Apple during the WWDC keynote.

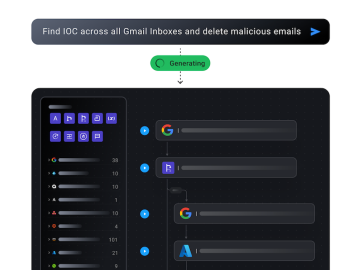

The feature is deeply integrated into iOS 18, iPadOS 18, and macOS Sequoia to analyze, retrieve, and conduct actions on the data in your device.

The AI-powered feature works by creating an on-device semantic index to store data retrieved from your emails, images, websites you visit, and apps you use.

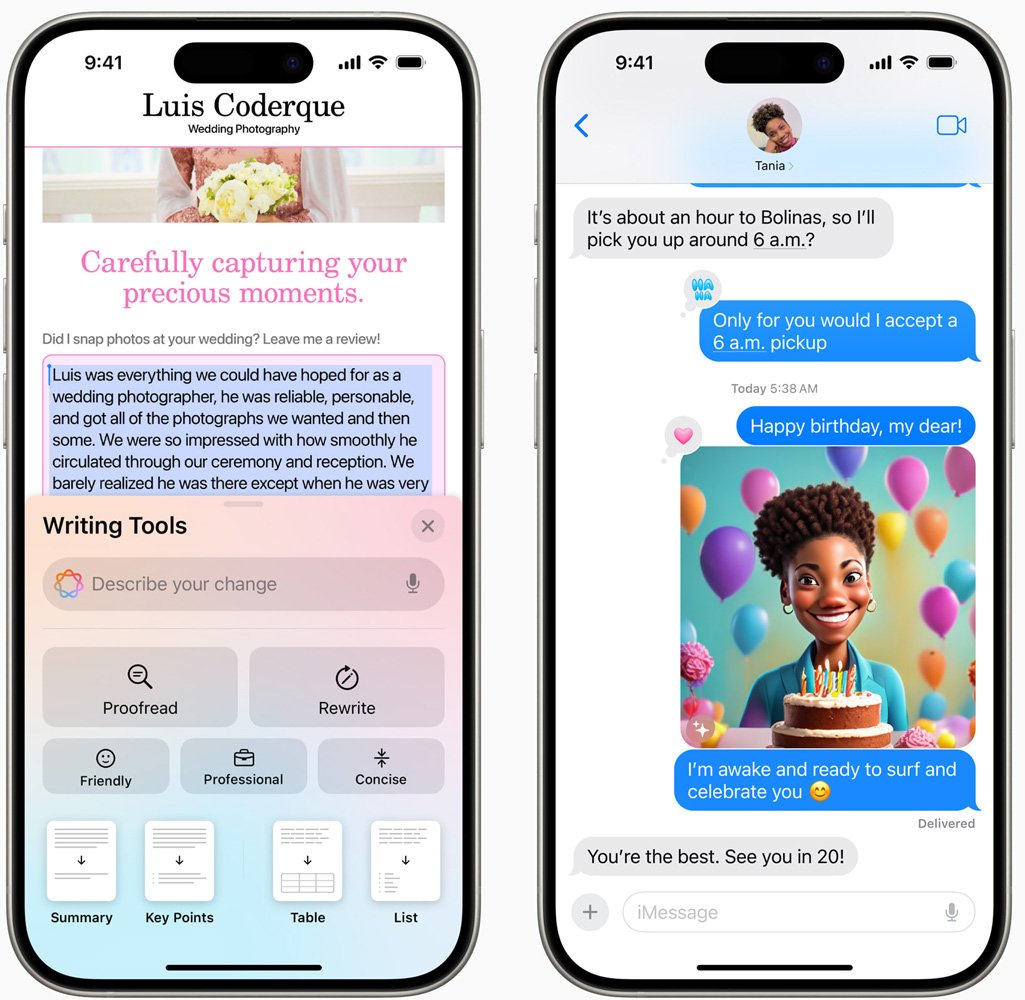

You can then use human language queries to generate AI-generated images, help you write and proofread your content, and retrieve data stored in your apps.

Apple Intelligence will also enhance the usability of Siri by allowing you to make human language requests to access your information, like asking Siri to “Open the email I received yesterday from Mom.”

Apple also said it was partnering with other AI platforms to provide more information from external sources. According to Axios, one of these partners is OpenAI, which will allow Siri to ask ChatGPT for more challenging queries.

Axios reports that Apple will privatize these requests by obscuring IP addresses, and OpenAI won’t store the queries. However, for those who wish to link their OpenAI accounts with iOS, OpenAI’s data policies will be used instead.

As the AI models used to power this feature run directly on the device, it will only be available on iPhone 15 Pro, and iPad and Mac with M1 chips and later.

Privacy, privacy, privacy

For years, big tech has been running rampant with its users’ data, using it as fuel to power its features, advertisements, and marketing and development strategies.

When Microsoft announced Windows Recall, there was an immediate backlash, with many thinking the feature was a privacy nightmare. The uproar ultimately led to Microsoft adding enhancements to protect the data stored on devices from information-stealing malware and other threats.

Apple says they built Apple Intelligence from the ground up with privacy in mind by performing almost all of its processing locally on the device. If a query requires a more complex AI model that can’t be run locally, the query would be sent to special cloud servers called ‘Private Cloud Compute.’

The company says that only the data required to process the query would be sent and never stored on its servers or accessible to Apple employees.

Apple says that independent experts will verify all devices used in Private Cloud Compute and that iPhones, Macs, and iPads won’t connect to a server unless it has been publicly verified to be inspected.

While it is true that macOS and iOS devices are not as targeted with malware as Windows, that does not mean that they are not affected at all.

Information-stealing malware exists for Mac devices, and high-risk iPhone users, such as politicians, human rights activists, government employees, and journalists, are known to be targeted by spyware.

Therefore, malware on these devices could theoretically attempt to steal the Apple Intelligence semantic index for offline viewing, if files will be unencrypted when a user is logged in.

However, not much information is known about how the semantic index is secured and whether it will be easy to access this data, as we saw with Windows Recall.

Furthermore, Apple has also not shared if they plan on turning Apple Intelligence off when a device is in Lockdown mode, a feature that strictly restricts various features on Apple devices to increase high-risk users’ privacy and security.

BleepingComputer contacted Apple with questions about these potential privacy risks but has not heard back.