Cyber risk management has many components. Those who do it well will conduct comprehensive risk assessments, enact well-documented and well-communicated processes and controls, and fully implemented monitoring and review requirements.

Processes and controls typically comprise policies, which will include detailed explanations of the acceptable use of company technology. There will usually be examples of the types of activity that are specifically not allowed – such as using someone else’s login credentials or sharing your own. To make this “stick”, there will almost certainly be training – some on “the basics” and on specific systems, but also other related matters – perhaps the requirements of data protection legislation, for example.

Yet despite all these precautions, people will still make mistakes. No level of controls, processes or training can overcome the reality that humans are fallible. The precautions can only reduce the probability – or, as we might think of it, the frequency. Why is this?

One reason mistakes happen is that the processes and controls themselves are inadequate. For example, it is all too easy for even a moderately determined scammer to learn the maiden name of their mark’s mother, or their place of birth, or their date of birth. Is it appropriate to still require your customers to use these for security? And whose fault is it if a scammer can evade security measures by providing correct answers to these? Certainly not the poor customer service operative, who simply follows the process.

And we are all, I fear, very aware of the rise of attacks that exploit aspects of human nature that weren’t foreseen: highly skilled scammers are using guile and just enough elements of truth to social-engineer people who are well-trained.

Don’t shoot the messenger

Those “well-documented and well-communicated processes and controls” mentioned earlier frequently contain words such as “disciplinary action” and “gross misconduct”. These are detailed as possible consequences for any individual failing to conform to the rules. To me, if someone follows procedures exactly but the procedure itself has been poorly designed (or has been outflanked by new types of attacks), apportioning any blame to the individual is both unfair and counterproductive.

Genuine negligence or deliberate actions should be handled appropriately, but apportioning blame and meting out punishment must be the final step in an objective, reasonable investigation. It should certainly not be the default reaction.

So far, so reasonable, yes? But things are a little more complicated than this. It’s all very well saying, “don’t blame the individual, blame the company”. Effectively, no “company” does anything; only people do. The controls, processes and procedures that let you down were created by people – just different people. If we blame the designers of controls, processes and procedures… well, we are just shifting blame, which is still counterproductive.

A solution: change the environment

Consider the following examples.

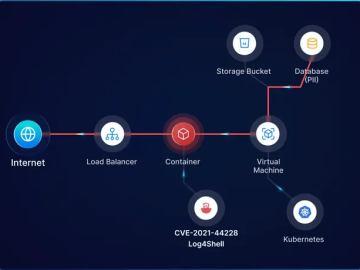

Imagine a salesperson, whose job is dealing with requests for information (RFI) or requests for offers (RFO). Answers to such enquiries often come either as a link or an attachment – it is, then, essentially the salesperson’s job to open attachments and click links. Is it reasonable for a security team to expect this individual to be able to differentiate between a benign and a malicious attachment? Perhaps it was, 10 years ago. Today, people are routinely exposed to remarkably high-quality scams, and humans are ill-equipped to differentiate between good and bad links or attachments. Policies and controls that demand our salesperson doesn’t click links or open attachments are, essentially, telling them to not do their job. So what happens? Exactly what happens now: our salesperson will keep opening those documents, because that’s how they (and their company) make money.

Let’s assume that this organization uses Microsoft Windows. If our salesperson clicks on a malicious attachment, something malicious may be installed. If they’re lucky, it will be solely on one device; if they’re unlucky it may spread throughout the organization, causing chaos. Changing the environment in this specific example could be as simple as swapping out Windows devices for iPads or Chromebooks. Suddenly that salesperson is still able to do their job because most of the tools they use will be web-based. When our salesperson clicks and opens that would-be malicious attachment, nothing happens.

Let’s consider another example. Many large enterprises forbid the use of specific tools. Let’s imagine that the use of Dropbox isn’t permitted by an organization. There will be a policy against using it, for sure – and, yes, there may also be some technical controls. Most often there are just policies and some training, telling staff: “Don’t do that.” But let’s imagine you’re a designer at this organization and your tool of choice requires you regularly to exchange large data or large files with someone or an entity outside your organization, because – guess what! – it’s part of your job. You’re familiar with using Dropbox, and the internally-provided tool is inadequate – so what do you do? By not understanding what your job is and assuming they know what kind of tools you need, “Security” makes it difficult for you to do your job. But you’re hired to do your job, forcing you to break policy – for which you are blamed.

What could they do instead? Well, they could simply come out and ask you: “What tools are you using? Why those and not the officially approved ones?”

Sometimes, you’ll still be told no and be threatened with consequences as described earlier. But a growing number of CISOs are starting to ask the question with a different motive: what happens if we change the approved tools? Will that make it easier for you to do the “right things”? Perhaps our designer will get a Dropbox replacement.

Time to rebalance your cyber spending?

Current research suggests that 95% of cyber security incidents can be traced back to human error. Leaving aside that this is a terrible way of expressing the situation – implying, as it does, fault and blame – this number does not correlate well at all with another statistic that suggests 85% of security budgets go to technology, 12% goes to policies, and a miserly 3% goes on people.

Now, I’m not naïve, and I’m not saying that spending ought to be in exact proportion. But, to me, there’s a clear lack of balance. It would be far better to divert some of that technology spending into making it easier for those employees to do the job while also doing the right things, instead of ignoring the fact that it’s difficult for them.

Managers should use the additional resources to figure out how to genuinely change the work environment in which employees operate and make it easier for them to do their job in a secure practical manner. Managers should implement a circular, collaborative approach to creating a frictionless, safer environment, working positively and without blame. These steps are far more productive than slowing down employees with difficult to implement policies and then blaming them for not following security policy.

Here’s the rub: this is not a new approach. In fact, it’s 60 years old and is called the People Process Technology Framework. It remains widely accepted and widely used and is even more relevant today because of the increased prevalence of technology and digital transformation. Perhaps security teams need to reacquaint themselves with it, or remind themselves of its benefits, so we can get away from the blame game and do a better job of preventing incidents involving humans.