In this Help Net Security interview, Ian Swanson, CEO of Protect AI, discusses the concept of “secure AI by design.” By adopting frameworks like Machine Learning Security Operations (MLSecOps) and focusing on transparency, organizations can build resilient AI systems that are both safe and trustworthy.

The idea of “secure AI by design” is becoming more prominent. What does this look like in practice? Can you give specific examples of how organizations can embed security from the earliest stages of AI development?

Ensuring that AI systems are safe, secure, and trustworthy starts by building AI/ML securely from the ground up. Embedding security in AI development requires addressing unique threats, from model serialization attacks to large language model jailbreaks.

Organizations can start by cataloging their ML models and environments using a machine learning bill-of-materials (MLBOM) and continuously scanning for vulnerabilities.

Machine learning models, which power AI applications, can be vulnerable and introduce risk at multiple stages of the development lifecycle. In April 2024, America’s Cyber Defense Agency issued a Joint Guidance on Deploying AI Systems Securely that included a warning to not run models right away in the enterprise environment and to use AI-specific scanners to detect potential malicious code. Scanning machine learning models for malicious code at every step of the lifecycle as part of your CI/CD pipeline is critical. This continuous risk assessment ensures that models aren’t compromised at any stage of their lifecycle.

A secure approach also involves training ML engineers and data scientists in secure coding practices, enforcing strict access controls, and avoiding unsecured Jupyter Notebooks as development environments. Implementing a code-first approach ensures visibility and accountability throughout the AI pipeline.

Finally, building red team capabilities helps test for vulnerabilities such as prompt injection attacks in AI applications. With an MLSecOps approach, collaboration across security, data science, and operations teams creates resilient AI systems capable of withstanding emerging threats.

What key principles should organizations consider when designing secure AI systems, according to frameworks like NCSC or MITRE?

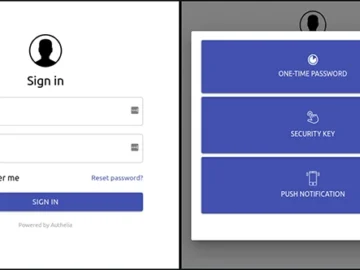

To design secure AI systems according to key principles outlined in frameworks like those from NCSC, MITRE, NIST, and OWASP, organizations should focus on transparency and auditability, adhering to privacy regulations like GDPR, and conducting thorough risk assessments. Securing training data, continuously monitoring models for vulnerabilities, and addressing supply chain threats is essential.

Additionally, adopting practices like red-team testing, threat modeling, and following a secure development lifecycle, such as MLSecOps, will help protect AI systems against emerging security risks.

As AI systems become more widely adopted, what new or emerging threats do you see on the horizon? How can organizations best prepare for these evolving risks?

As AI systems become more widely adopted, we’re seeing emerging threats in the supply chain, invisible attacks hidden in machine learning models, and GenAI applications being compromised, resulting in technical, operational, and reputational enterprise risk.

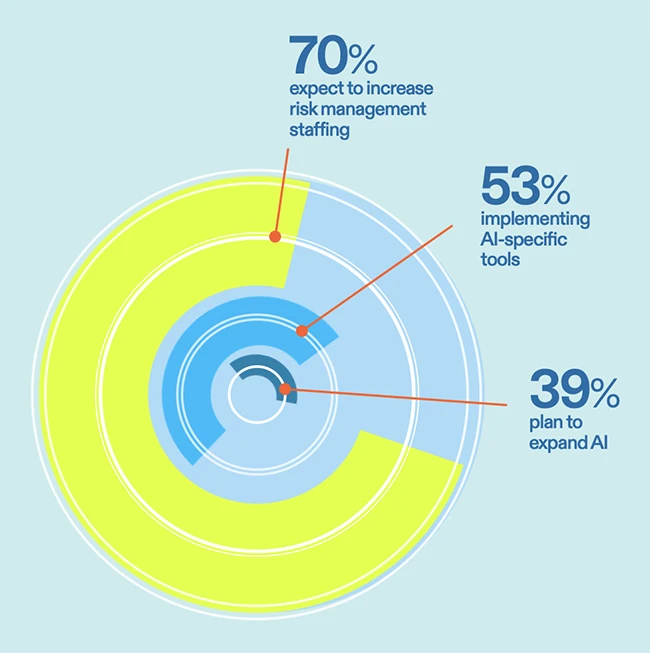

Organizations can best prepare by adopting a robust MLSecOps strategy, integrating security early in the AI lifecycle, continuously scanning ML models and AI applications for risks, and in educating their team with courses such as MLSecOps foundations. By updating your build processes to include secure by design, using AI specific security solutions, and upleveling AI security knowledge, organizations can build resilience to evolving AI/ML threats.

How should public sector organizations, which often handle critical infrastructure and sensitive data, approach AI security differently from the private sector?

Public sector organizations, responsible for critical infrastructure and sensitive data, must approach AI security with even more vigilance compared to the private sector. The potential consequences of breaches—such as disruptions to national security, public safety, or essential services—require a more proactive stance.

These organizations should adopt stricter compliance frameworks, such as NIST guidelines or specific government directives like FedRAMP for cloud security, ensuring end-to-end encryption and robust access controls. Moreover, public sector entities need to build AI models with transparency and auditability in mind, following “secure by design” principles from the earliest stages of development.

Public sector AI systems should also have robust incident response plans to rapidly address new vulnerabilities. Regular red-team testing, threat modeling, and cross-agency collaboration are essential to mitigating risks. In fact, our work with various government agencies helps equip them with MLSecOps processes tailored to managing secure AI/ML development and responding to emerging threats.

I’ve personally contributed to the development of standards and recommendations designed to protect AI systems in government settings, helping public sector organizations stay ahead of evolving risks.

By staying engaged in these collaborative efforts and adopting security-first approaches, the public sector can effectively safeguard their critical AI systems.

Explainability and transparency are increasingly viewed as essential to AI security. How can organizations ensure their AI systems are secure and explainable to stakeholders?

To ensure AI systems are both secure and explainable, organizations must implement transparent model development and governance practices. First, they should adopt frameworks that make model decisions interpretable, such as Explainable AI (XAI) techniques that clarify how outputs are derived. Additionally, using a MLSecOps approach ensures continuous monitoring of the system’s behavior, enhancing both security and transparency.

Collaboration between data scientists, security teams, and business stakeholders is key—ensuring that risks, limitations, and decisions are communicated in a way that’s understandable to non-technical stakeholders.

Lastly, organizations should document their models’ training data, assumptions, and decision pathways to provide accountability and traceability, helping stakeholders trust the system’s outputs.