ChatGPT from OpenAI is a conversational chatbot that was recently released in preview mode for research purposes. It takes natural language as an input and aims to solve problems, provide follow up questions or even challenge assertions depending on what you ask it. Surprisingly, as many security researchers have discovered, it makes a good infosec companion as it can do many tasks quite well, all while one is interacting with it naturally.

Though there are limitations to this current iteration, I found that it can be used as an all-round assistant that can do a bit of everything but isn’t good at any specific thing. Still, it does offer up lots of potential for future integration with SecOps teams, especially those dealing with scripting, malware analysis and forensics.

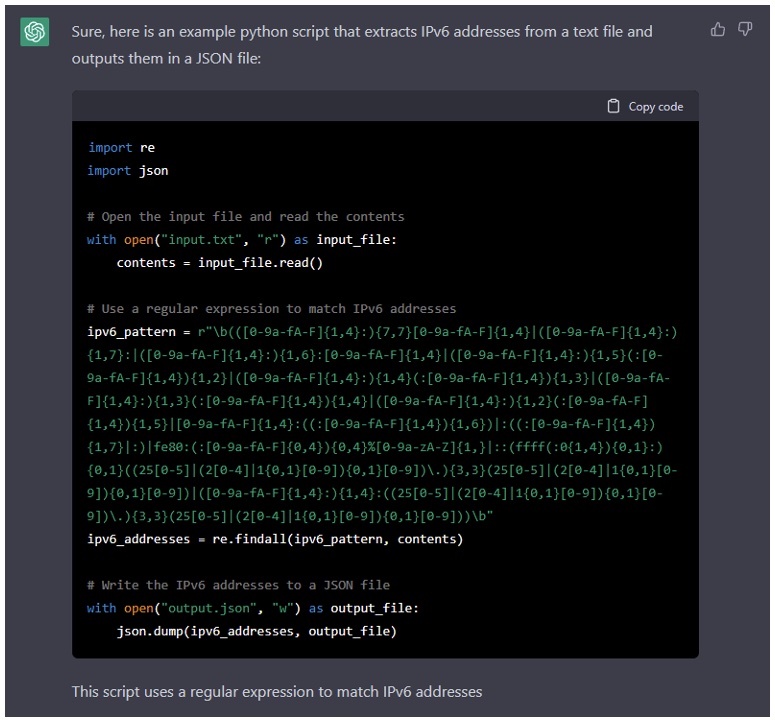

Writing code

You can ask ChatGPT to write code, but the results can be mixed. A common task of any SecOps analyst is sometimes having to process specific log files, grep for certain patterns and export them to gain meaningful insight into an incident or issue. While this traditionally means they need to know a scripting language (e.g., Python), ChatGPT can write these scripts for them, or even better, just do the work directly if they paste the input.

I’ve listed the query and the output below so you get an idea of how it interprets them. There were occasional bugs and sometimes it didn’t understand what I was asking or couldn’t process the input. Some of this was clearly due to the sheer volume of requests it’s currently receiving due to its popularity.

First, I asked it to:

“Write a python script that would extract ipv6 addresses from a text file and output them in a json file”

Not bad: a common script you could use to convert logs, parse log files or just grep for certain patterns.

Next up is converting payloads in a pentesting scenario. I’ve taken shellcode for an iOS exploit from exploit-db (here).

I asked it to convert it into a Python payload:

“Convert this shellcode into Python”

Great! So, it can help with the messing around with payloads without having to re-write and debug exploits from scratch.

Now for some log analysis. ChatGPT is great and can manage a whole host of formats (LEAF, CEF, Syslog, JSON, XML, etc.) so it can absorb raw data and tell you what happened. This is especially useful if an analyst wants a quick run through of some log data related to an incident to know what happened. For reference, this was a sample log file was taken from Juniper.

“What happened in this log file?”

The interesting thing about the log analysis is that it explains it clearly in human-readable language and is quite concise, giving all the key information, such as source and destination of the threat and what the threat was. It gives useful follow up information such as hashes, which can be useful if the analyst wants to perform additional analysis cross-referencing this with threat sources (e.g., VirusTotal).

Here’s another analysis of a sample log file which processes an attempted command and control (C2) callback from a malware infection:

Again: good human-readable information and a detailed breakdown of everything required for the analyst to decide on what to do next.

Moving away from SecOps for a minute, what about vulnerability analysis within code? You can paste a piece of vulnerable code, and without explaining what it does you can simply ask it if it’s vulnerable to cross-site scripting or any other type of vulnerability. What it won’t do is just answer a vague question like “Is this vulnerable code?” (at least not yet!). This sample was taken from Brightsec and is vulnerable to cross-site scripting:

“Is this code vulnerable to XSS?”

As you can see, it gives a very detailed and clear description of why it’s vulnerable, pinpoints the parameter, and tells you how to fix the issue. What’s more: it re-writes the code for you!

Finally, as ChatGPT processes natural language interactions, it can write lots of things for you, in whatever style. I challenged it to help me with that dreaded infosec task: communicating to employees that they must stop sending passwords via email. As a twist, I asked it to make it “light-hearted”:

The response looks informal and seems to match the tone I was aiming for. Not to mention, the created text explains why sending passwords via email is a bad idea and gives a few alternatives for good password hygiene. All in all, it reduces the busywork related to drafting communications and can generate these in a few seconds for any scenario.

To summarize

ChatGPT has a lot of potential. The current iteration doesn’t connect to the internet so while it could theoretically start doing things like scans and DNS lookups, this functionality isn’t available yet. Looking at what it can do now though is impressive, especially for businesses that may be lacking certain technical skillsets, and this will go a long way to covering that shortfall. There are also lots of efficiency savings it can produce in day-to-day SecOps and infosec tasks. The impact this will have on the infosec industry will be interesting to observe.