It’s been less than 18 months since the public introduction of ChatGPT, which gained 100 million users in less than two months. Given the hype, you would expect enterprise adoption of generative AI to be significant, but it’s been slower than many expected. A recent survey by Telstra and MIT Review showed that while 75% of enterprises tested GenAI last year, only 9% deployed it widely. The primary obstacle? Data privacy and compliance.

This rings true; I’ve spoken with nearly 100 enterprise CISOs in the first half of 2024, and their primary concerns are how to get visibility over employee AI use, how to enforce corporate policies on acceptable AI use, and how to prevent loss of customer data, intellectual property, and other confidential information.

A great deal of effort has gone into protecting the models themselves, which is a real problem that needs to be solved. But the related problem of protecting user activity around and across models poses a potentially larger problem for privacy and compliance. Whereas model security focuses on preventing bias, model drift, poisoning, etc., protecting user activity of models centers on visibility and control, as well as data and user protection.

Protecting user activity across models

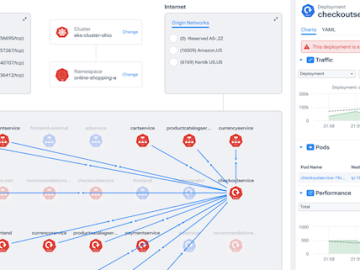

So, what do you need to get visibility and control? Visibility of user activity around internal models is a manageable problem. Today, most organizations have stood up very few LLMs and employee access to those is limited. Gaining visibility to externally hosted AI and AI-enabled destinations is much more difficult.

To get full visibility into both internal and external user activity, you must:

a) Capture all outbound access

b) Make sense of it

For example, what exactly is this employee accessing, where is it hosted, where does it store its data, and… is it safe to use? Of course, that only identifies the destination. Next, you’ll need to capture and analyze all employee activity there. What prompts are they entering, and is that okay? What response do they get back, and is that okay? During those two months between initial release and the 100 million user mark, ChatGPT received a lot of enterprise employee access. Source code, financial plans, marketing campaigns, and much more was uploaded as employees tested this new technology. Very few CISOs seem to be confident that they fully understand what was sent and what is still being sent.

Even with good visibility, controlling employee activity via policy is not easy. Where does the enforcement mechanism live? On an endpoint agent? A proxy? Gateway? The cloud?

How is AI acceptable use policy expressed? Consider an AI data access policy: a law or consulting firm might require that LLM data from client A can’t be used to generate answers for client B. A public company’s general counsel might want an AI topic access policy: employees outside of finance and below the VP level can’t ask about earnings info. The chief privacy officer may require an AI intention policy: if you use external GenAI to write a contract, redact all PII before the prompt is sent.

Gaining visibility and control of employee AI use

With eighteen months of experience using generative AI, enterprises have started to learn what’s necessary to gain visibility and control of user activity. Whether you build or buy, a solution will need to perform specific functions:

- Build a destination database: First, you’ll need to build and maintain a database of GenAI destinations (i.e., domains and apps with embedded AI). Without this, you can’t do much more than block or allow access based on DNS.

- Capture desired destinations: Next, you’ll need the actual domains that employees are trying to access. There are multiple options for capturing the DNS activity: from the endpoint (i.e., agent), from the firewall, from the proxy, CASB, etc.

- Catalog actual activity: To get visibility, you’ll need to continuously map that employee activity to the destination database, to build a catalog of employee activity – specifically, where are your employees going, relative to AI? The basic version can happen after the fact, to provide only visibility into which AI models, chatbots, etc. employees are accessing. A more advanced version might add risk analysis of those destinations.

- Capture the conversation: Of course, visibility includes more than just destinations, it includes the actual prompts, data, and responses into and out of those AI destinations. You’ll want to capture those and apply additional risk analysis.

- Apply active enforcement: All the above visibility capability can happen after the fact. If you want to apply acceptable use policies to external AI systems, you’ll need a policy mechanism that can enforce at inference time. It needs to intercept prompts and apply policies, to prevent unwanted data loss, unsafe use, etc.

- Enforce policy everywhere: Ensure that the policy mechanism applies to internal and external LLM access and across clouds, LLMs, and security platforms. Since most organizations use a mix of clouds and security products, your policy engine will work better if it’s not restricted to a single platform or cloud.

State of the market today

The steps above can be complex, but some enterprises have made progress. Certainly larger, more advanced organizations have built some set of controls to perform some of the above.

I have spoken with a few that have built much of this AI visibility and control from scratch. Others have used a mix of their EDR, SIEM, CASB, proxy, and firewalls to capture data, do some basic blocking, and post-facto visibility reporting. Of course, as with any new security concern, new startups are bringing solutions to market, as well. This is a very active and fast-evolving area of enterprise security and privacy.

A final note: Occasionally, major IT shifts occur that significantly impact the way employees (and customers) use IT. Enterprise web deployment in the late 90s drove a massive evolution of enterprise infrastructure, as did mobile apps in the 2010s. Both of those major computing shifts opened new potential for data loss and attacks, and each required significant new technology solutions. GenAI certainly looks to be the next major cause of an IT security evolution, and most organizations will be looking for ways to see, control, and protect their users and their data as AI becomes embedded in every process.