Hackers develop a new attack (Conversation Overflow) to bypass AI security. Learn how this technique fools Machine Learning and what businesses can do to stay protected.

A recent discovery by SlashNext threat researchers reveals a dangerous cyberattack method termed “Conversation Overflow.” This technique involves cloaked emails designed to bypass machine learning (ML) security controls, allowing malicious payloads to infiltrate enterprise networks.

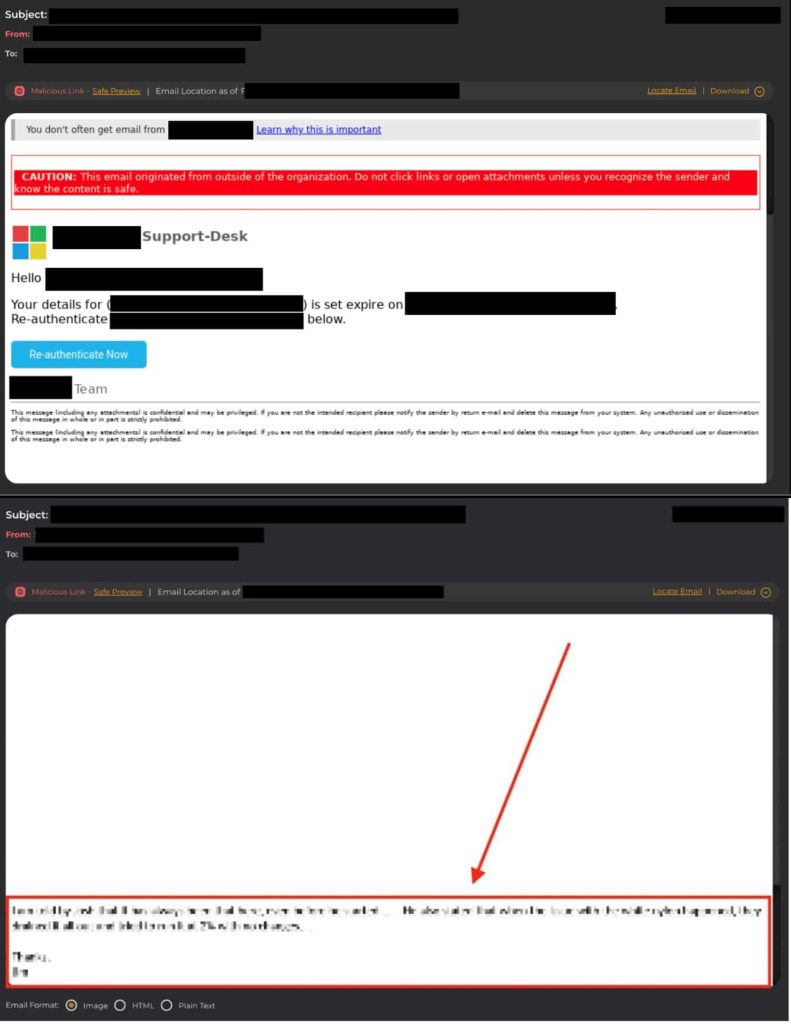

In a typical Conversation Overflow attack, cybercriminals employ cloaked emails designed to deceive ML tools into categorizing them as harmless. These emails contain two distinct sections: one visible to the recipient, prompting them to take action such as entering credentials or clicking links and another hidden portion filled with innocuous text.

Threat actors exploit ML algorithms by strategically inserting blank spaces to separate these sections, often focusing on deviations from “known good” communications rather than identifying malicious content.

According to SlashNext’s research shared with Hackread.com ahead of publication on Tuesday, this novel approach poses a significant challenge to traditional cybersecurity measures, which rely on databases of “known bad” signatures. Unlike these methods, ML systems analyze patterns to detect anomalies, making them susceptible to manipulation by attackers mimicking legitimate communication styles.

Once a Conversation Overflow attack breaches security defences, attackers can deliver seemingly genuine messages requesting credential reauthentication, particularly targeting high-level executives. The stolen credentials are then sold on dark web forums, highlighting the lucrative nature of such cybercrime.

SlashNext threat researchers express deep concern regarding the potential ramifications of this attack becoming widespread. There is a significant risk that cybercriminals are actively developing an entirely new toolkit, which could exacerbate the impact of such attacks, potentially leading to devastating consequences for organizations and individuals alike.

“This is not your same old credential harvesting attack, because it is smart enough to confuse certain sophisticated AI and ML engines. From these findings, we should conclude that cyber crooks are morphing their attack techniques in this dawning age of AI security. As a result, we are concerned that this development reveals an entirely new toolkit being refined by criminal hacker groups in real-time today.”

SlashNext

The implications of this discovery are profound, signalling a shift in cybercriminal tactics to exploit vulnerabilities in AI and ML-driven security platforms. As cyber criminals adapt and refine their techniques, it becomes increasingly imperative for organizations to remain vigilant and proactive in fortifying their defences.