Note: This is the “text notes” version of my DEF CON 30 Cloud Village Lightning Talk. The talk was not recorded so this is the only public version of it. Many thanks to AWS for reviewing the presentation prior to public discussion.

Countless applications rely on Amazon Web Services’ Simple Notification Service for application-to-application communication such as webhooks and callbacks. To verify the authenticity of these messages, these projects use certificate-based signature validation based on the SigningCertURL value. Unfortunately, a loophole in official AWS SDKs allowed attackers to forge messages to all SNS HTTP subscribers.

Amazon Simple Notification Service (SNS) is one of those technologies that has become so widespread as to be almost foundational in the serverless ecosystem today. The idea itself is not new: publisher-subscriber messaging software has existed for a long time, from Apache Kafka to RabbitMQ. SNS allows different applications to communicate remotely by delivering messages between them. While other messaging software often include queues, SNS keeps it as simple as possible (AWS sells queue functionality separately as Amazon Simple Queue Service – yeah…). At the same time, it’s a lot more useful than simply implementing a simple HTTP webhook yourself – SNS supports FIFO messaging, easy scalability, wide publisher/subscriber support, message filtering, and more.

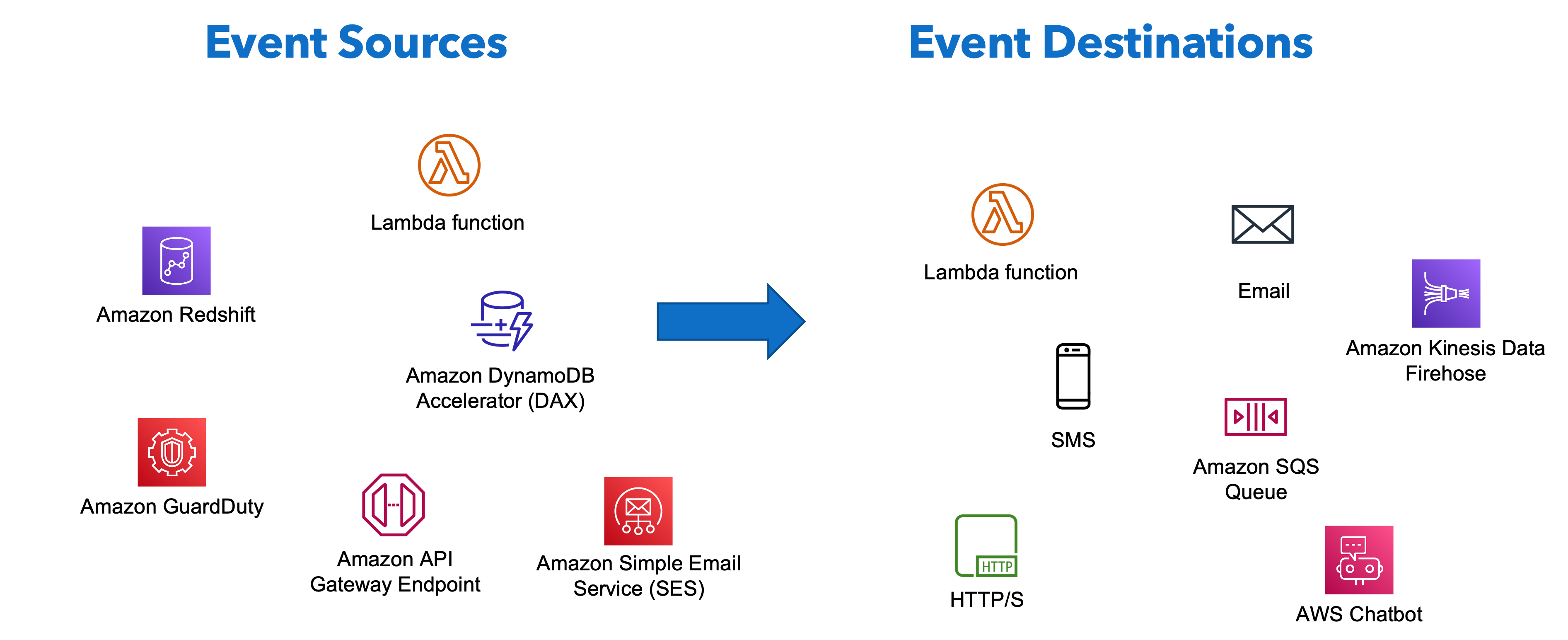

A typical Amazon SNS use case is the application-to-application fanout pattern. For example, an image upload and conversion workflow can be abstracted into an S3 ingest/upload event notification message that’s published to an SNS topic. The SNS topic forwards this message to several different AWS Lambda subscribers that convert the image into different formats and sizes before storing them in separate S3 buckets.

In short, SNS acts as the glue (not to be confused with AWS Glue – yeah…) between various event sources and destinations. Some of the most popular destinations supported by SNS are email, SMS, and HTTP/S – for example, notifying developers if a user has unsubscribed from their app or triggering a webhook workflow.

Of course, behind the scenes SNS is basically a more feature-filled HTTP webhook service. When you configure SNS to publish to a HTTP/S destination, it sends the following request to your endpoint:

POST / HTTP/1.1

x-amz-sns-message-type: Notification

x-amz-sns-message-id: 22b80b92-fdea-4c2c-8f9d-bdfb0c7bf324

x-amz-sns-topic-arn: arn:aws:sns:us-west-2:123456789012:MyTopic

x-amz-sns-subscription-arn: arn:aws:sns:us-west-2:123456789012:MyTopic:c9135db0-26c4-47ec-8998-413945fb5a96

Content-Length: 773

Content-Type: text/plain; charset=UTF-8

Host: example.com

Connection: Keep-Alive

User-Agent: Amazon Simple Notification Service Agent

{

"Type" : "Notification",

"MessageId" : "22b80b92-fdea-4c2c-8f9d-bdfb0c7bf324",

"TopicArn" : "arn:aws:sns:us-west-2:123456789012:MyTopic",

"Subject" : "My First Message",

"Message" : "Hello world!",

"Timestamp" : "2012-05-02T00:54:06.655Z",

"SignatureVersion" : "1",

"Signature" : "EXAMPLEw6JRN...",

"SigningCertURL" : "https://sns.us-west-2.amazonaws.com/SimpleNotificationService-f3ecfb7224c7233fe7bb5f59f96de52f.pem",

"UnsubscribeURL" : "https://sns.us-west-2.amazonaws.com/?Action=Unsubscribe&SubscriptionArn=arn:aws:sns:us-west-2:123456789012:MyTopic:c9135db0-26c4-47ec-8998-413945fb5a96"

}

Depending on your event source, the subject and message contains the data you need. However, this begs the question: if your webhook endpoint is public, how do you trust that the requests you are receiving are coming from Amazon SNS? After all:

Almost no one got JWT tokens and webhooks right on the first try. With webhooks, people almost always forgot to authenticate incoming requests…”

Ken Kantzer, “Learnings from 5 years of tech startup code audits”

This is where the Signature and SigningCertURL values come into play. The AWS documentation has a straightforward description of the verification algorithm; relevant name-value pairs (such as Subject and Message) are extracted from the JSON body and arranged in a canonical format, then hashed using SHA1 to create the derived hash value.

Next, the Signature value is base64-decoded, then decrypted using the public key downloaded from SigningCertURL to create the asserted hash value.

Finally, the asserted and derived hash values are compared to ensure that they match. This is a standard signature verification scheme which Computerphile explains very well.

At this point you might have spotted a possible weakness: we are supposed to use the certificate at SigningCertURL to generate the supposedly correct hash value, but how do we trust SigningCertURL? Ay, there’s the rub…

The AWS knowledge center provides this answer:

To help prevent spoofing attacks, make sure that you do the following when verifying Amazon SNS message signatures:

- Always use HTTPS to get the certificate from Amazon SNS.

- Validate the authenticity of the certificate.

- Verify that the certificate was sent from Amazon SNS.

- (When possible) Use one of the supported AWS SDKs for Amazon SNS to validate and verify messages.

Okay, so it tells us to Verify that the certificate was sent from Amazon SNS without telling us how. It also provides an example Python script that performs the signature validation *without verifying SigningCertURL!

import base64

from M2Crypto import EVP, RSA, X509

import requests

cache = dict()

# Extract the name-value pairs from the JSON document in the body of the HTTP POST request that Amazon SNS sent to your endpoint.

def processMessage(messagePayload):

print ("Start!")

if (messagePayload["SignatureVersion"] != "1"):

print("Unexpected signature version. Unable to verify signature.")

return False

messagePayload["TopicArn"] = messagePayload["TopicArn"].replace(" ", "")

signatureFields = fieldsForSignature(messagePayload["Type"])

print(signatureFields)

strToSign = getSignatureFields(messagePayload, signatureFields)

print(strToSign)

certStr = getCert(messagePayload)

print("Printing the cert")

print(certStr.text)

print("Using M2Crypto")

# Get the X509 certificate that Amazon SNS used to sign the message.

certificateSNS = X509.load_cert_string(certStr.text)

#Extract the public key from the certificate.

public_keySNS = certificateSNS.get_pubkey()

public_keySNS.reset_context(md = "sha1")

# Generate the derived hash value of the Amazon SNS message.

# Generate the asserted hash value of the Amazon SNS message.

public_keySNS.verify_init()

public_keySNS.verify_update(strToSign.encode())

# Decode the Signature value

decoded_signature = base64.b64decode(messagePayload["Signature"])

# Verify the authenticity and integrity of the Amazon SNS message

verification_result = public_keySNS.verify_final(decoded_signature)

print("verification_result", verification_result)

if verification_result != 1:

print("Signature could not be verified")

return False

else:

return True

# Obtain the fields for signature based on message type.

def fieldsForSignature(type):

if (type == "SubscriptionConfirmation" or type == "UnsubscribeConfirmation"):

return ["Message", "MessageId", "SubscribeURL", "Timestamp", "Token", "TopicArn", "Type"]

elif (type == "Notification"):

return ["Message", "MessageId", "Subject", "Timestamp", "TopicArn", "Type"]

else:

return []

# Create the string to sign.

def getSignatureFields(messagePayload, signatureFields):

signatureStr = ""

for key in signatureFields:

if key in messagePayload:

signatureStr += (key + "n" + messagePayload[key] + "n")

return signatureStr

#**** Certificate Fetching ****

#Certificate caching

def get_cert_from_server(url):

print("Fetching cert from server...")

response = requests.get(url)

return response

def get_cert(url):

print("Getting cert...")

if url not in cache:

cache[url] = get_cert_from_server(url)

return cache[url]

def getCert(messagePayload):

certLoc = messagePayload["SigningCertURL"].replace(" ", "")

print("Cert location", certLoc)

responseCert = get_cert(certLoc)

return responseCert

The SigningCertURL/url value is simply fetched directly without any verification step. Meanwhile, if we turn to StackOverflow, the top Google result advises to verify that the URL matches the format sns.${region}.amazonaws.com. Sensible enough, but there are various subtleties to take note of.

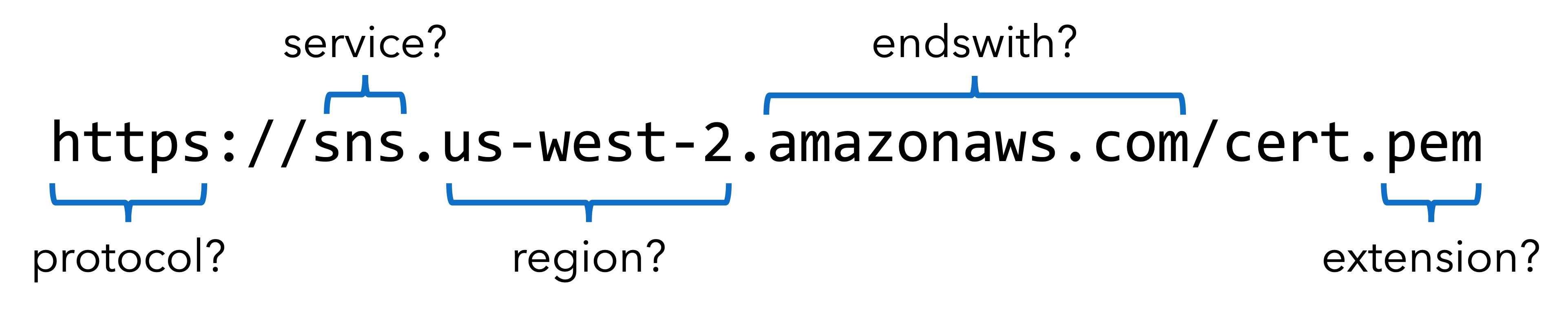

For example, a developer may want to verify that the URL starts with https://, includes sns as the first slug in the subdomain, includes a valid region string in the second slug, then ends with the amazonaws.com domain. Furthermore, the developer may want to ensure that the path ends with the .pem extension.

On the surface, this looks sufficient to ensure that the URL indeed belongs to one of the default AWS certificate locations. However, there is one critical loophole: Amazon S3.

More specifically, Amazon S3 bucket resources can be accessed at https://, which easily passes the checks described above! By uploading their own generated public key at https://mysns.s3-us-west-2.amazonaws.com/evil.pem, an attacker can easily forge a signed SNS message to the victim’s webhook endpoint.

In a quick open-source code review of custom SNS SigningCertURL validation routines, on top of such broken algorithms, I also found weak regexes such as https?://sns.(.+).amazonaws.com (bypassed by http://sns.evil.s3.amazonaws.com/evil.pem) and sns.[a-z0-9-]+.amazonaws.com (bypassed by snsthisismyamazonaws.com).

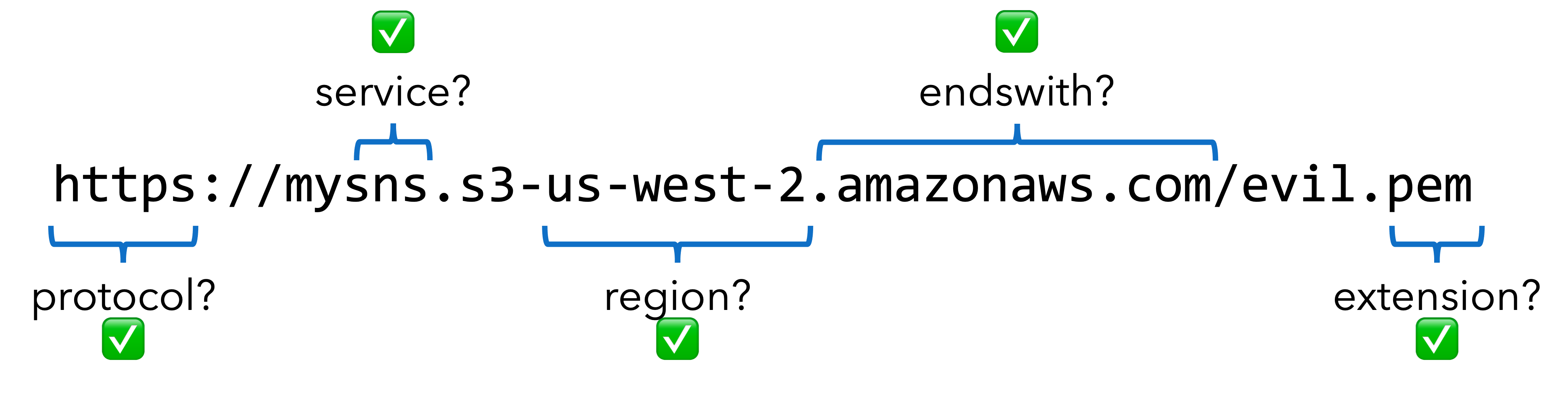

Okay, so URL verification is hard. However, AWS helpfully provides AWS SDKs for Amazon SNS to validate and verify messages. For example, AWS publishes the sns-validator package on NPM. The package code uses the following regex:

defaultHostPattern = /^sns.[a-zA-Z0-9-]{3,}.amazonaws.com(.cn)?$/,

// hostPattern defaults to defaultHostPattern

var validateUrl = function (urlToValidate, hostPattern) {

var parsed = url.parse(urlToValidate);

return parsed.protocol === 'https:'

&& parsed.path.substr(-4) === '.pem'

&& hostPattern.test(parsed.host);

};

^sns. checks that the first slug matches rather than includes sns; this blocks bucket names such as mysns. However, the rest of the regex still allows for the .s3-us-west-2.amazonaws.com subdomain suffix. Fortunately, s3-us-west-2.amazonaws.com works (worked?) just the same as s3.amazonaws.com, therefore passing the minimum 3 character requirement for the second slug in the domain ([a-zA-Z0-9-]{3,}). This leaves the sns S3 bucket as the only possible match for this regex.

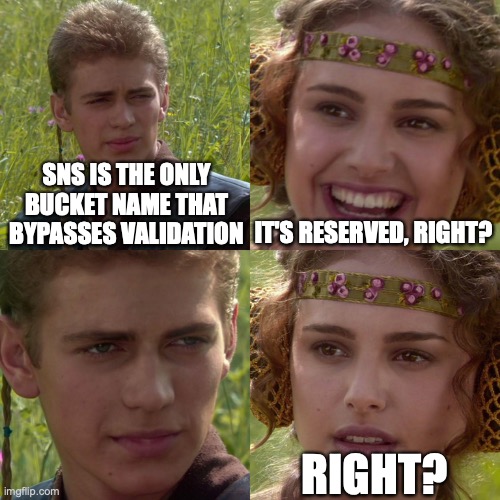

Surely such a critical bucket name would be reserved, right?

- Problem 1:

snsis not reserved. - Problem 2:

snsis a publicly-readable bucket. - Problem 3:

snsis a publicly-writable bucket.

Through this loophole, an attacker could forge messages to any official SDK SNS validator user. The impact depends on the application’s webhook handler. For example, Firefox Monitor, a tool that allows users to register their emails to monitor for online data breaches, has a publicly-accessible SNS webhook endpoint at https://monitor.firefox.com/ses/notification that uses sns-validator to validate incoming POST messages:

'use strict'

const MessageValidator = require('sns-validator')

const DB = require('../db/DB')

const mozlog = require('../log')

const validator = new MessageValidator()

const log = mozlog('controllers.ses')

async function notification (req, res) {

const message = JSON.parse(req.body)

return new Promise((resolve, reject) => {

validator.validate(message, async (err, message) => {

if (err) {

log.error('notification', { err })

const body = 'Access denied. ' + err.message

res.status(401).send(body)

return reject(body)

}

await handleNotification(message)

res.status(200).json(

{ status: 'OK' }

)

return resolve('OK')

})

})

}

After validating the message, it then deletes users from the database using values taken from the validated message:

async function handleNotification (notification) {

log.info('received-SNS', { id: notification.MessageId })

const message = JSON.parse(notification.Message)

if (message.hasOwnProperty('eventType')) {

await handleSESMessage(message)

}

if (message.hasOwnProperty('event')) {

await handleFxAMessage(message)

}

}

async function handleFxAMessage (message) {

switch (message.event) {

case 'delete':

await handleDeleteMessage(message)

break

default:

log.info('unhandled-event', { event: message.event })

}

}

async function handleDeleteMessage (message) {

await DB.deleteSubscriberByFxAUID(message.uid)

}

async function handleSESMessage (message) {

switch (message.eventType) {

case 'Bounce':

await handleBounceMessage(message)

break

case 'Complaint':

await handleComplaintMessage(message)

break

default:

log.info('unhandled-eventType', { type: message.eventType })

}

}

async function handleBounceMessage (message) {

const bounce = message.bounce

if (bounce.bounceType === 'Permanent') {

return await removeSubscribersFromDB(bounce.bouncedRecipients)

}

}

async function handleComplaintMessage (message) {

const complaint = message.complaint

return await removeSubscribersFromDB(complaint.complainedRecipients)

}

async function removeSubscribersFromDB (recipients) {

for (const recipient of recipients) {

await DB.removeEmail(recipient.emailAddress)

}

}

As such, an attacker could delete arbitrary users from the Firefox Monitor database by forging SNS messages.

After reporting, I was impressed by AWS’ response. They quickly resolved the vulnerability through an elegant solution on their infrastructure end, preventing future subdomain namespace clashes from S3. By avoiding the need to patch the regex from the SDK side, AWS prevented triggering thousands of alerts and ensured backward compatibility. Creating such S3 bucket names is also no longer possible. Nevertheless, this solution applies only to AWS SDK users; if developers spin their own SigningCertURL validation algorithm (or fail to validate it at all), attackers can forge ahead…

Overall, this was a fruitful journey of code review and Reading the Funky Manual on the plumbing of today’s serverless cloud. Take a look at AWS’ excellent developer documentation for any service – you might just find something interesting.