On internal pens, it’s really common for me to get access to the Domain Controller and dump password hashes for all AD users. A lot of tools make this super easy, like smart_hashdump from Meterpreter, or secretsdump.py from Impacket.

But occasionally, I end up with a hard copy of the NTDS.dit file and need to manually extract the information offline. This came up today and I decided to document the process. I’m not going to go into the details on how to obtain the files, but am going to assume I have everything I need already offline:

- a copy of NTDS.dit (

ntds.dit) - a copy of the SYSTEM registry hive (

systemhive)

|

|

Update: @agsolino, the creator of Impacket just told me on Twitter that secretsdump.py has a LOCAL option that makes this incredibly easy! Can’t believe I never realized that, but it makes sense that Impacket saves me time and trouble again 😉

If you have the NTDS.dit file and the SYSTEM hive, simply use the secretsdump.py script to extract all the NT hashes:

|

|

It takes a little while, but it will spit out nicely formatted NTLM hashes for all the Domain users:

This is definitely the easiest method. If you want to go through the exercise of exporting the tables and using ntdsxtract, the following steps can be taken too:

The first step is to extract the tables from the NTDS.dit file using esedbexport, which is part of libesedb.

To install, download the latest release of source code from the releases page:

https://github.com/libyal/libesedb/releases

I used the latest pre-release “libesedb-experimental-20170121”.

Download and extract the source code:

|

|

Now install the requirements for building:

|

|

And configure, make and install libesedb:

|

|

If all went well, you should have the export tool available at /usr/local/bin/esedbexport

Dumping Tables

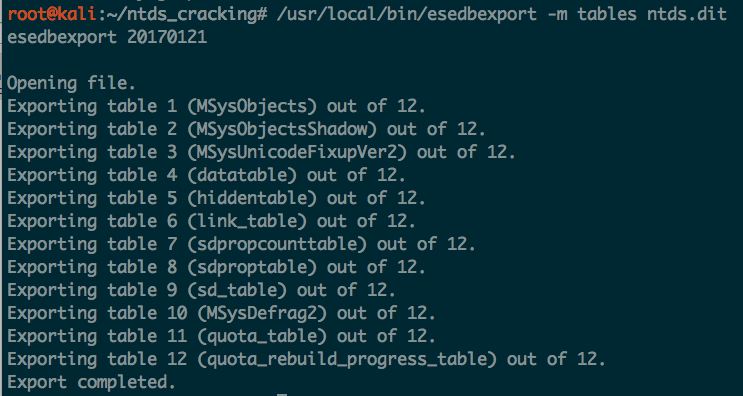

Now that the tool is installed, use it to dump the tables from the ntds.dit file. This will create a new directory, called ntds.dit.export with the dumped tables:

|

|

This step can take quite a while (20-30 minutes for me). At the end though, you should see it successfully extracted the tables:

The two important tables are the datatable and link_table, and both will be in ./ntds.dit.export/

Extracting Domain Info with ntdsxtract

Once the tables are extracted, there is a great set of Python tools that can be used to interact with the data and dump valuable data: ntdsxtract

Clone the repository and the python scripts should be usable as-is. Or they can be installed system wide:

|

|

Dumping User Info and Password Hashes

The ntdsxtract tool dsusers.py can be used to dump user information and NT/LM password hashes from an extracted table. It requires three things:

- datatable

- link_table

- system hive

The syntax is:

|

|

The --pwdformat option spits out hash formats in either John format (john), oclHashcat (ocl) or OphCrack (ophc).

It will also spit out all the User information to stdout, so it’s helpful to tee the output to another file.

To extract all NT and LM hashes in oclHashcat format and save them in “ntout” and “lmout” in the “output” directory:

|

|

After it runs, the NT hashes will be output in oclHashcat ready format:

|

|

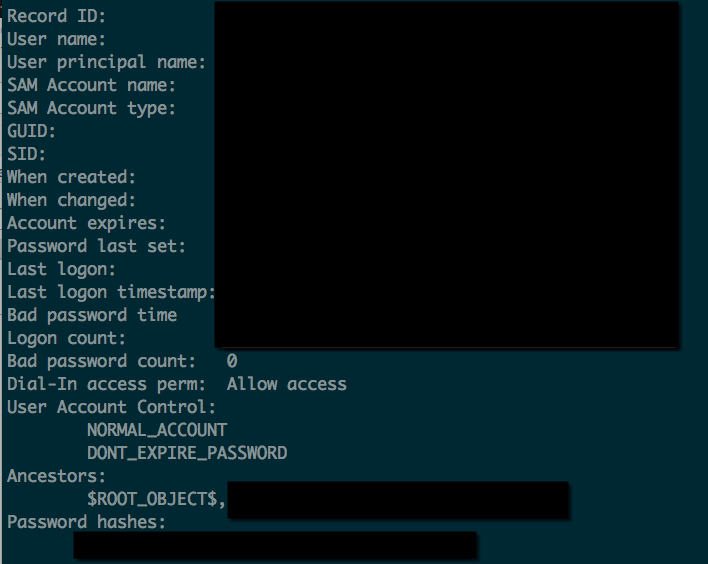

Looking at the file we tee‘d into, we can see other information about the users, such as SID, when the password was created, last logons, etc:

To crack the NT hashes with hashcat, use mode 1000:

|

|

Bonus: Extracting Domain Computer Info

Ntdsxtract also has a tool to extract domain computer information from the dumped tables. This can be useful for generating target lists offline.

To use, supply it the datatable, output directory, and a csvfile to write to:

|

|

It generates a nice CSV of all computers in the domain, with the following columns:

|

|

It’s a lot easier and faster to just use secretsdump.py or other authenticated methods of domain reconaissance to dump user info, passwords hashes, etc.

But if you end up with a copy of the NTDS.dit file and the SYSTEM hive and want to extract info offline, use this guide.

Hope this helps someone out there!

-ropnop