Bing Chat continues to enrich its user experience by rolling out a new feature – Visual Search in Chat.

This function combines the power of OpenAI’s GPT-4 model with image search abilities to offer a more interactive way of browsing the web.

With Visual Search in Chat, users can upload pictures and start web searches based on what’s in those pictures. This could be a photo taken on holiday, or an image of what’s inside your fridge – Bing Chat can analyze these images and provide useful information based on them.

The tool understands the image context and can answer related questions, changing the way users find information and use the web.

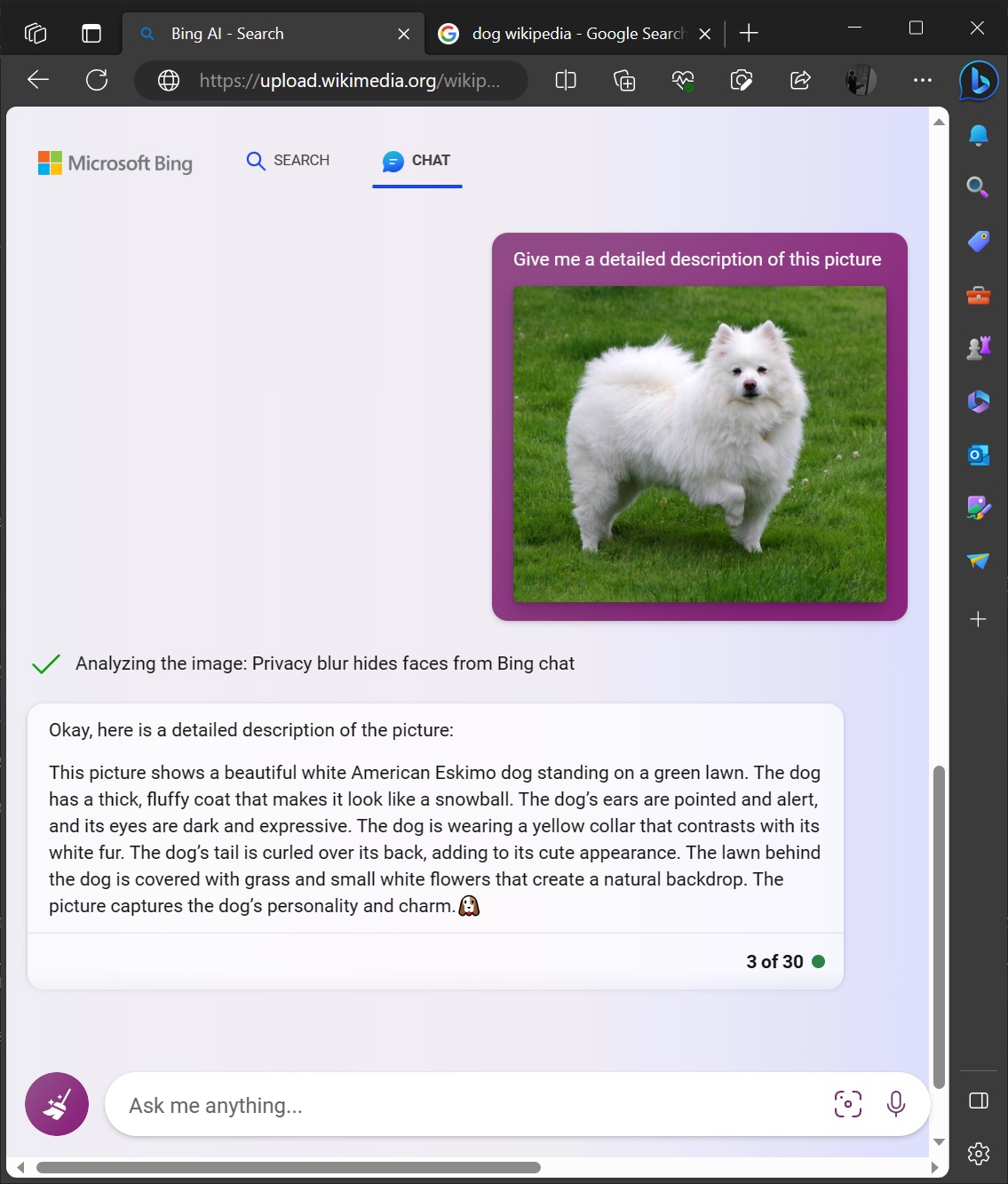

In our tests, Bing Chat showed promising results in accurately understanding images.

For example, when a picture of a dog was uploaded from Wikipedia, Bing Chat provided an accurate description stating, “This picture shows a beautiful white American Eskimo dog standing on a green lawn. The dog has a thick, fluffy coat that makes it look like a snowball. The dog’s ears are pointed and alert, and its eyes are dark and expressive…”

The AI successfully recognized the dog’s breed and painted a vivid picture of the scene, showing off its deep understanding and interpretation skills.

This feature is now available on desktop and Bing’s mobile app, and will soon be added to Bing Chat Enterprise. It’s a big step forward for smart search tools, showing how AI technology is changing our everyday interactions with the web.