Artificial intelligence is changing industries from finance and healthcare to entertainment and cybersecurity. As AI adoption grows so do the risks to its integrity. AI models are being targeted by cybercriminals to manipulate, steal or exploit sensitive data. From adversarial attacks that mess with AI decision-making to large-scale data breaches the security landscape is more complex than ever.

The very nature of AI which relies on massive datasets, cloud computing power and decentralized processing creates vulnerabilities. Securing AI systems is not just about protecting data; it’s about reliability, trust and ethical usage.

This article looks at the measures AI solutions take to secure their offering with insights from platforms like OORT and Filecoin which are creating new security models for their AI infrastructure.

The Growing Threats to AI

AI is a target for cybercriminals. AI models process sensitive data from financial transactions to medical records so are a treasure trove for hackers. Breaches not only expose confidential information but also raise ethical concerns about privacy violations and data misuse.

The biggest security risks are data breaches, model theft and infrastructure vulnerabilities. AI models rely on proprietary datasets and unauthorized access to these datasets can cause financial and reputational damage. Attackers can steal AI models or feed them adversarial data to mess with outputs and cause incorrect decision-making. Centralized AI cloud solutions are single points of failure and can be taken down and cause service disruptions.

To address these risks many AI platforms are moving away from traditional centralized models to decentralized architectures. By distributing AI workloads and data across multiple nodes companies can increase security, reduce failure risks and create more robust AI-driven ecosystems.

How These Two Decentralized AI Brands Tackle Cybersecurity

AI solutions use different strategies to keep systems safe from cyber threats like hacking, data leaks, and unauthorized access. By building strong security measures, they help protect sensitive information and ensure AI runs smoothly and securely. Let’s take a look.

OORT: Protecting Data with Blockchain

OORT secures its holistic AI offering with blockchain and decentralization to build a more powerful cloud. One of its key patented innovations is the Proof of Honesty (PoH) algorithm which makes AI computations verifiable and tamper-proof.

Unlike traditional cloud systems that rely on centralised servers that can be targeted, OORT distributes data across a decentralized network, so the risk of breaches or unauthorised access is greatly reduced. Even if one part of the network is compromised the rest remain secure so continuity and reliability are maintained.

To add more security, OORT uses Olympus Protocol, a DAG-based system (PDF), so transactions are faster, cheaper, and more scalable. Instead of miners, it uses trusted committees to validate transactions and secure the network. This allows for zero fees, high speed, and easy interoperability with other chains, it’s a good fit for Web3.

Since the blockchain is immutable any attempt to alter data leaves a trail so attackers can’t cover their tracks.

Additionally, OORT recently partnered with DeTaSECURE to add AI security and data integrity using advanced threat intelligence to fortify decentralized AI infrastructure against cyber threats and support trustless, verifiable AI models in Web3.

By combining blockchain verification with distributed validation OORT creates a trustless AI infrastructure where users don’t have to rely on a single entity to verify computations. Nodes across the network validate AI processes so it’s fair, accurate and secure.

This is particularly useful for industries that require high trust such as finance, healthcare and autonomous systems where AI-driven decisions are critical. Through these mechanisms, OORT secures AI applications reduces risk and provides a safer environment for advanced AI solutions.

Filecoin: Eliminating Single Points of Failure

AI models need large datasets which are often stored in the cloud. Traditional cloud storage has risks due to centralization which creates a single point of failure. Decentralized storage is a more secure option.

Decentralized storage is key for AI because it eliminates single points of failure, and reduces the impact of a cyber attack. It uses cryptographic proofs to ensure data integrity and authenticity and allows users to control encryption and access permissions.

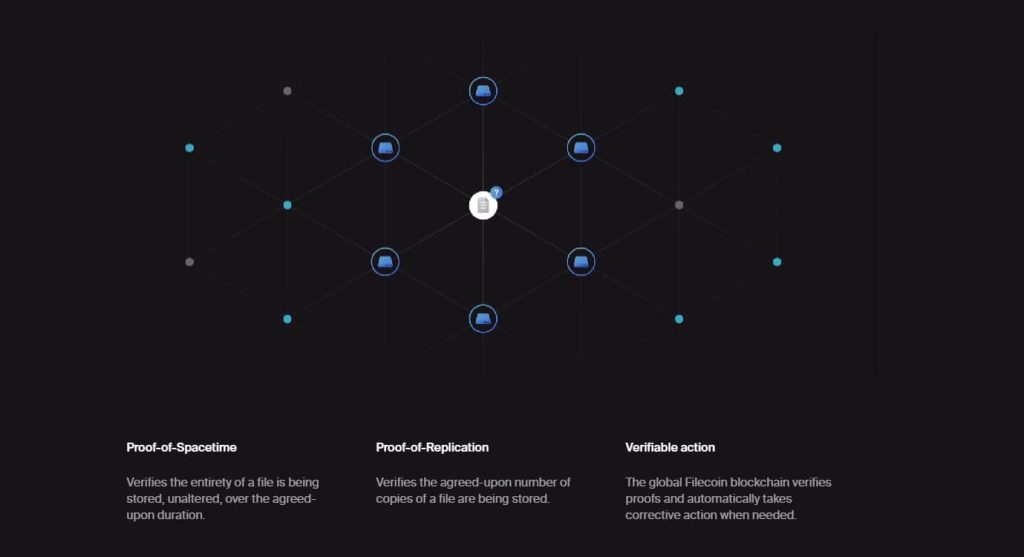

Filecoin is a decentralized storage network that provides secure and scalable data storage solutions. It secures AI-related data through Proof-of-Replication and Proof-of-Spacetime, cryptographic methods that verify data integrity and prevent unauthorized modifications.

Distributed storage nodes prevent centralization breaches by distributing AI datasets across multiple storage providers.

Client-controlled encryption allows users to encrypt their data before storing it so only authorized parties can access it. By using decentralized storage AI platforms can reduce their dependence on vulnerable cloud providers and build a more resilient infrastructure.

AI and Cybersecurity Future

As AI adoption grows so will the sophistication of attacks on AI systems. Solving these security challenges requires continuous innovation and collaboration between AI developers, security experts and regulatory bodies.

Several trends are shaping AI security. Federated learning is emerging as a method where AI models are trained across decentralized devices without sharing raw data, more privacy. Blockchain-powered AI security uses smart contracts and distributed ledgers to ensure transparency and prevent unauthorized modifications. AI is being used to detect security threats in real-time, more resilience against attacks.

With cyber threats getting more sophisticated, AI platforms need to get proactive with security to be trusted and reliable. Companies need to realize that security is not an afterthought but a foundation of AI innovation.

Whether through decentralized infrastructure, encryption protocols or secure AI training environments, the future of AI security is resilience, transparency and bleeding-edge tech. By security from the start, AI developers and companies can build systems that are intelligent and threat-proof.