Thorough, independent tests are a vital resource for analyzing provider’s capabilities to guard against increasingly sophisticated threats to their organization. And perhaps no assessment is more widely trusted than the annual MITRE Engenuity ATT&CK Evaluation.

This testing is critical for evaluating vendors because it’s virtually impossible to evaluate cybersecurity vendors based on their own performance claims. Along with vendor reference checks and proof of value evaluations (POV) — a live trial — the MITRE results add additional objective input to holistically assess cybersecurity vendors.

Let’s dive into the 2023 MITRE ATT&CK Evaluation results. In this blog, we’ll unpack MITRE’s methodology to test security vendors against real-world threats, offer our interpretation of the results and identify top takeaways emerging from Cynet’s evaluation.

How does MITRE Engenuity test vendors during the evaluation?

The MITRE ATT&CK Evaluation is performed by MITRE Engenuity and tests endpoint protection solutions against a simulated attack sequence based on real-life approaches taken by well-known advanced persistent threat (APT) groups. The 2023 MITRE ATT&CK Evaluation tested 31 vendor solutions by emulating the attack sequences of Turla, a sophisticated Russia-based threat group known to have infected victims in over 45 countries.

An important caveat is that MITRE does not rank or score vendor results. Instead, the raw test data is published along with some basic online comparison tools. Buyers then use that data to evaluate the vendors based on their organization’s unique priorities and needs. The participating vendors’ interpretations of the results are just that — their interpretations.

So, how do you interpret the results?

That’s a great question — one that a lot of people are asking themselves right now. The MITRE ATT&CK Evaluation results aren’t presented in a format that many of us are used to digesting (looking at you, magical graph with quadrants).

And independent researchers often declare “winners” to lighten the cognitive load of figuring out which vendors are the top performers. In this case, identifying the “best” vendor is subjective. Which, if you don’t know what to look for, can feel like a hassle if you’re already frustrated with trying to assess which security vendor is the right fit for your organization.

With these disclaimers issued, let’s now review the results themselves to compare and contrast how participating vendors performed against Turla.

MITRE ATT&CK Results Summary

The following tables present Cynet’s analysis and calculation of all vendor MITRE ATT&CK test results for the most important measurements: Overall Visibility, Detection Accuracy, and Overall Performance. There are a lot of other ways to look at the MITRE results, but we consider these to be most indicative of a solution’s ability to detect threats.

Overall Visibility is the total number of attack steps detected across all 143 sub-steps. Cynet defines Detection Quality as the percentage of attack sub-steps that included “Analytic Detections – those that identify the tactic (why an activity may be happening) or technique (both why and how the technique is happening).

Additionally, it’s important to look at how each solution performed before the vendor adjusted configuration settings due to missing a threat. MITRE allows vendors to reconfigure their systems to attempt to detect threats that they missed or to improve the information they supply for detection. In the real world we don’t have the luxury of reconfiguring our systems due to missed or poor detection, so the more realistic measure is detections before configuration changes are implemented.

How’d Cynet do?

Based on Cynet’s analysis, our team is proud of our performance against Turla in this year’s MITRE ATT&CK Evaluation, outperforming the majority of vendors in several key areas. Here are our top takeaways:

- Cynet delivered 100% Detection: (19 of 19 attack steps) with NO CONFIGURATION CHANGES

- Cynet delivered 100% Visibility: (143 of 143 attack sub-steps) with NO CONFIGURATION CHANGES

- Cynet delivered 100% Analytic Coverage: (143 of 143 detections) with NO CONFIGURATION CHANGES

- Cynet delivered 100% Real-time Detections: (0 Delays across all 143 detections)

See the full analysis of Cynet’s performance in the 2023 MITRE ATT&CK Evaluation.

Let’s dive a little deeper into Cynet’s analysis of some of the results.

Cynet was a top performer when evaluating both visibility and detection quality. This analysis illustrates how well a solution does in detecting threats and providing the context necessary to make the detections actionable. Missed detections are an invitation for a breach, while poor quality detections create unnecessary work for security analysts or potentially cause the alert to be ignored, which again, is an invitation for a breach.

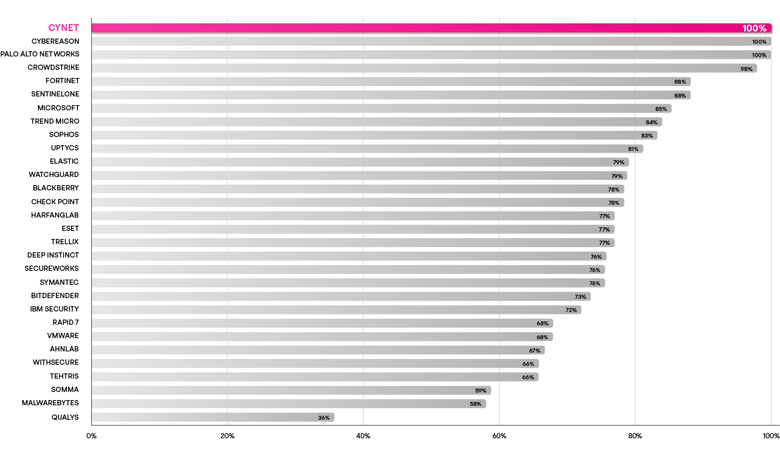

Cynet delivered 100% visibility and perfectly detected every one of the 143 attack steps using no configuration changes. The following chart shows the percentage of detections across all 143 attack sub-steps before the vendors implemented configuration changes. Cynet performed as well as two very large, well known, security companies despite being a fraction their size and far better than some of the biggest names in cybersecurity.

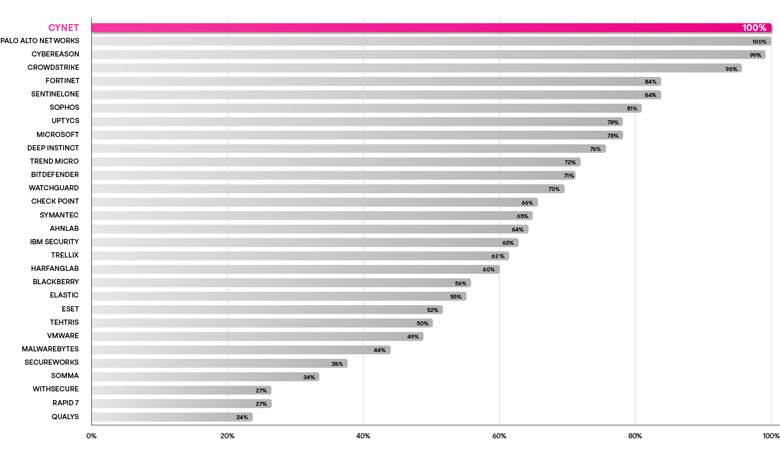

Cynet provided analytic coverage for 100% of the 143 attack steps using no configuration changes. The following chart shows the percentage of detections that contained important tactic or technique information across the 143 attack sub-steps, again before configuration changes were implemented. Cynet performed as well as Palo Alto Networks, a $76 billion publicly traded company with 50 times the number of employees and far better than many established, publicly traded brands.

Still have questions?

Understandable.

In this webinar, Cynet CTO Aviad Hasnis and ISMG SVP Editorial Tom Field review the recently released results and share expert advice for cybersecurity leaders to interpret the results to find the vendor that best fits the specific needs of their organization. He’ll also share more details on Cynet’s performance during the tests and how that could translate to your team’s unique goals.

Author: George Tubin, Director of Product Strategy, Cynet