ChatGPT and similar large language models (LLMs) have added further complexity to the ever-growing online threat landscape. Cybercriminals no longer need advanced coding skills to execute fraud and other damaging attacks against online businesses and customers, thanks to bots-as-a-service, residential proxies, CAPTCHA farms, and other easily accessible tools.

Now, the latest technology damaging businesses’ bottom line is ChatGPT.

Not only have ChatGPT, OpenAI, and other LLMs raised ethical issues by training their models on scraped data from across the internet. LLMs are negatively impacting enterprises’ web traffic, which can be extremely damaging to business.

3 Risks Presented by LLMs, ChatGPT, & ChatGPT Plugins

Among the threats ChatGPT and ChatGPT plugins can pose against online businesses, there are three key risks we will focus on:

- Content theft (or republishing data without permission from the original source)can hurt the authority, SEO rankings, and perceived value of your original content.

- Reduced traffic to your website or app becomes problematic, as users getting answers directly through ChatGPT and its plugins no longer need to find or visit your pages.

- Data breaches, or even the accidental broad distribution of sensitive data, are becoming more likely by the second. Not all “public-facing” data is intended to be redistributed or shared outside of the original context, but scrapers do not know the difference. The results can include anything from a loss in competitive advantage to severe damages to your brand reputation.

Depending on your business model, your company should consider ways to opt out of having your data used to train LLMs.

3 Most Impacted Industries

The most at-risk industries for ChatGPT-driven damage are those in which data privacy is a top concern, unique content and intellectual property are key differentiators, and ads, eyes, and unique visitors are an important source of revenue. These industries include:

- E-Commerce: Product descriptions and pricing models can be key differentiators.

- Streaming, Media, & Publishing: All about providing the audience with unique, creative, and entertaining content.

- Classified Ads: Pay per click (PPC) advertising revenue can be severely impacted by a decrease in website traffic (as well as other bot issues like click fraud or skewed site analytics due to scrapers).

Guard Your Brand: Defending Against ChatGPT’s Content Scraping

Worried about ChatGPT scraping your content? Learn how to outsmart AI bots, defend your content, and secure your web traffic.

Join the Session

How ChatGPT Gets Training Data

According to a research paper published by OpenAI, ChatGPT3 was trained on several datasets:

- Common Crawl

- WebText2

- Books1 and Books2

- Wikipedia

The largest amount of training data comes from Common Crawl, which provides access to web information through an open repository of web crawl data. The Common Crawl crawler bot, also known as CCBot, leverages Apache Nutch to enable developers to build large-scale scrapers.

The most current version of CCBot crawls from Amazon AWS and identifies itself with a user agent of ‘CCBot/2.0’. But businesses who want to allow CCBot should not rely solely on the user agent to identify it, because many bad bots spoof their user agents to disguise themselves as good bots and avoid being blocked.

To allow CCBot on your website, use attributes such as IP ranges or reverse DNS. To block ChatGPT, your website should, at minimum, block traffic from CCBot.

3 Ways to Block CCBot

- Robots.txt: Since CCBot respects robots.txt files, you can block it with the following lines of code:

- Blocking CCBot User Agent: You can safely block an unwanted bot through user agent. (Not that, in contrast, allowing bot traffic through user agent can be unsafe, easily abused by attackers.)

- Bot Management Software: Whether it’s for ChatGPT or a dark web database, the best way to prevent bots from scraping your websites, apps, and APIs is with specialized bot protection that uses machine learning to keep up with evolving threat tactics in real time.

User-agent: CCBot

Disallow: /

Scrapers Can Always Find Workarounds

LLMs use scraper bots to gather training data. While blocking CCBot might be effective for blocking ChatGPT scrapers today, there is no telling what the future holds for LLM scrapers. Moving forward, if too many websites block OpenAI (for example) from accessing their content, the developers could decide to stop respecting robots.txt and could stop declaring their crawler identity in the user agent.

Another possibility is OpenAI could use its partnership with Microsoft to access Microsoft Bing’s scraper data, making the situation more challenging for website owners. Bing’s bots identify as Bingbot, but blocking them could cause problems by preventing your site from being indexed on the Bing search engine, resulting in fewer human visitors.

You could face similar issues by blocking Google’s LLM Bard (competitor to ChatGPT). Google is vague about the origin and collection of the public data used to train Bard, but it is possible that Bard is, or will be, trained with data collected by Googlebot scrapers. Like with Bingbot, blocking Googlebot would likely be unwise, impacting how your website gets indexed and how the Google search engine drives traffic to your site. The result could mean a serious drop in visitors.

Using Plugins to Access Live Data

One of the main limits of models like ChatGPT is the lack of access to live data. Since it was trained on a dataset that stops in 2021, it is unable to provide the most relevant, up-to-date information. That’s where plugins come in.

Plugins are used to connect LLMs like ChatGPT to external tools and allow the LLMs to access external data available online, which can include private data and real-time news. Plugins also let users complete actions online (e.g. booking a flight or ordering groceries) through API calls.

Some businesses are developing their own plugins to provide a new way for users to interact with their content/services via ChatGPT. But, depending on your industry, letting users interact with your website through third-party ChatGPT plugins can mean fewer ads seen by your users, as well as lower traffic to your website.

You may also notice that users are less willing to pay for your premium features once your features can be replicated through third-party ChatGPT plugins. For example, an unofficial web client interacting with your site could offer premium features through their UI.

How to Identify ChatGPT Plugin Requests

OpenAI documentation states that requests with a specific user agent HTTP header (with token: “ChatGPT-User”) come from ChatGPT plugins. But the documentation does not state that the disclosed user agent is the only user agent that can be used by plugins when making HTTP requests.

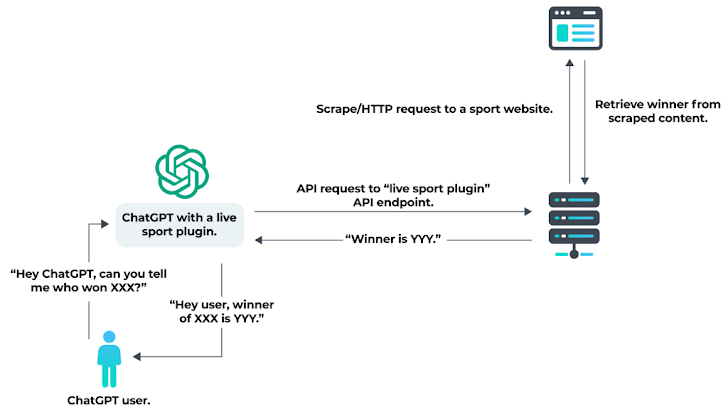

Therefore, as ChatGPT plugins interact with third-party APIs, the APIs can then do any kind of HTTP requests from their own infrastructure. The diagram below shows what happens when a fictitious “Live Sport Plugin” is used with ChatGPT to get an update about a sporting event.

- ChatGPT triggers the Live Sport Plugin, making a request to the API endpoints based on parameters from the user prompt.

- The plugin makes an HTTP request to scrape a sports website to get the latest information about the event.

- The information is then passed back to the end user through ChatGPT.

A plugin can actually make a request to a sport API without having to scrape the sports website. In fact, when requests are made directly from the server hosting the plugin API, there is no constraint on the user agent.

How to Block ChatGPT Plugin Requests

In a process similar to blocking ChatGPT’s web scrapers, you can block requests from plugins that declare their presence with the “ChatGPT-User” substring by user agent. But blocking the user agent could also block ChatGPT users with the “browsing” mode activated. And, contrary to what OpenAI documentation might indicate, blocking requests from “ChatGPT-User” does not guarantee that ChatGPT and its plugins can’t reach your data under different user agent tokens.

In fact, ChatGPT plugins can make requests directly from the servers hosting their APIs using any user agent, and even using automated (headless) browsers. Detecting plugins that do not declare their identity in the user agent requires advanced bot detection techniques.

Determining Your Next Steps

Obtaining high-quality datasets of human-generated content will remain of critical importance to LLMs. In the long term, companies like OpenAI (funded partially by Microsoft) and Google may be tempted to use Bingbots and Googlebots to build datasets to train their LLMs. That would make it more difficult for websites to simply opt out of having their data collected, since most online businesses rely heavily on Bing and Google to index their content and drive traffic to their site.

Websites with valuable data will either want to look for ways to monetize the use of their data or opt out of AI model training to avoid losing web traffic and ad revenue to ChatGPT and its plugins. If you wish to opt out, you’ll need advanced bot detection techniques, such as fingerprinting, proxy detection, and behavioral analysis, to stop bots before they can access your data.

Advanced solutions for bot and fraud protection leverage AI and machine learning (ML) to detect and stop unfamiliar bots from the first request, keeping your content safe from LLM scrapers, unknown plugins, and other rapidly evolving AI technologies.

Note: This article is expertly written and contributed by Antoine Vastel, PhD, Head of Research at DataDome.