I want to respond to my buddy Joseph Thacker’s blog post about Prompt Injection and whether or not it’s a vulnerability.

josephthacker.com

Prompt Injection Isn’t a Vulnerability (Most of the Time)

Prompt injection is almost never the root cause of AI vulnerabilities—the actual issue is what the model is permitted to do

So I was one of the friends debating with Joseph about whether prompt injection is a vulnerability or not.

I’m in the “Yes” camp, and I want to give my reasoning.

But first, I want to steel man the opposite position, which is that Prompt Injection is not a vulnerability.

On this view, prompt injection is actually just a delivery mechanism. Not for a vulnerability, but for an attack that targets a vulnerability.

So, this is like a hose sending poison water into a garden.

- The hose is not the vulnerability (it’s the delivery mechanism)

- The poison is not the vulnerability (it’s the attack)

- The suseptability to poison is the vulnerability

- And the plants in the garden are the target

This is pretty compelling, so now let me tell you why I don’t agree.

My favorite analogy for this is the Pope.

The Pope has to walk into crowds and be extremely close to thousands of people. That physical proximity is a vulnerability. The actual attack that might be leveraged against him—poison, a blowgun, shooting, punching—that’s the attack. His frailty might be another vulnerability.

But if we think about this from a risk management perspective, it’s absolutely a vulnerability that he’s going to be anywhere near thousands of people on this given day in the first place.

It doesn’t make it any less of a vulnerability if we have to silently accept it as a course of business.

Same with prompt injection. If we’re going to use AI agents in our application, we have to use human language via voice or text—which ultimately becomes text that can be injected with commands that might cause harm.

That absolutely is a vulnerability that needs to be considered when we’re thinking about the overall risk to the application.

Here’s another way to look at it.

SQL injection is universally classified as a vulnerability (CWE-89). Nobody argues that SQLi is:

Just an attack vector.

Or:

Merely a transmission line.

We call it a vulnerability because the database can’t distinguish between query structure and user-supplied data.

The pattern is identical:

- SQLi: User input gets interpreted as SQL commands

- Prompt injection: User input gets interpreted as system instructions

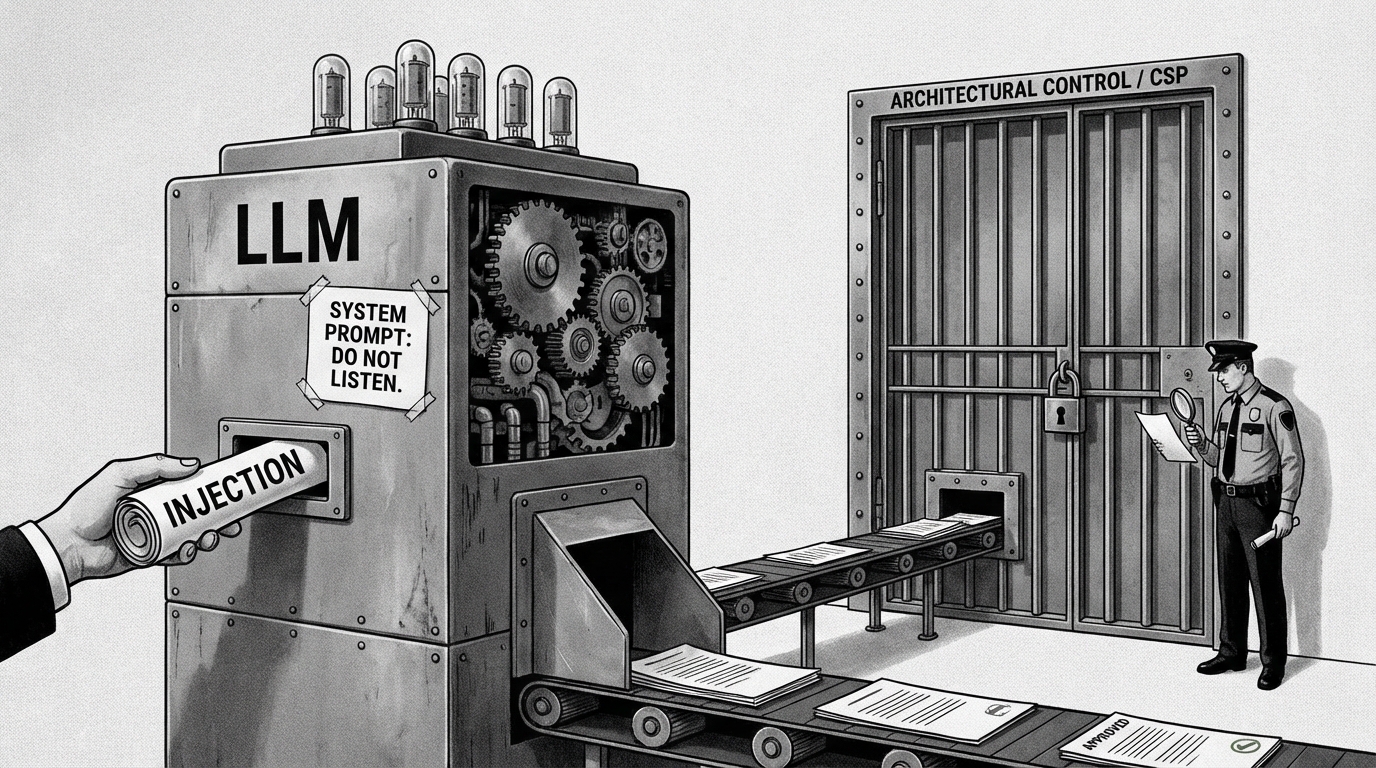

Both stem from the same architectural flaw—mixing the control plane with the data plane. The security industry spent decades recognizing that the channel of input wasn’t the vulnerability; the vulnerability was the underlying confusion of code and data at the parsing layer.

If we accept CWE-89 as a vulnerability, intellectual consistency demands we apply the same classification logic to prompt injection.

Some people push back on the Pope analogy:

Proximity isn’t a vulnerability—it’s just operational exposure. The vulnerability would be inadequate screening or lack of bulletproof glass.

But here’s what that misses.

If we think of proximity a vulnerability, we keep our critical thinking open. I have the option to re-evaluate the goals and maybe:

- Project the Pope holographically

- Use a body double

- Etc.

In other words, we might find new ways of achieving the goal that reduce risk.

If we dismiss proximity as:

Just how things work.

Or:

Inherent to the role.

We close off those lines of creativity.

The same applies to LLMs. If we say:

LLMs have to accept text input, that’s just inherent.

We stop looking for alternatives. But if we say:

The inability to distinguish instructions from data is a vulnerability.

We might discover new architectures—instruction signing, hardware-level separation, entirely new approaches.

My AI friend Kai red-teamed this argument. Yes, really—I actually have a red team skill built for this. He initially made a mistake worth highlighting that actually taught me a lot:

The Pope analogy fails because the Pope could theoretically be isolated from crowds while LLMs can’t be isolated from language input.

But that’s a category error.

The LLM doesn’t have goals—the application has goals. The LLM is just a component, like the Pope’s physical body is a component. You don’t ask “what’s the goal of the Pope’s body”—you ask “what’s the goal of the Pope.”

The correct parallel:

| Entity with Goals | Component | Vulnerability in Component |

|---|---|---|

| The Pope | Physical body/presence | Must be near crowds |

| The Application | LLM | Can’t distinguish instructions from data |

Both the Pope and the application have goals (serve the faithful / serve the user). Both use components with inherent limitations that create risk. Both could potentially be re-architected—the Pope through holograms, the application through different architectures that achieve the same goal without the LLM’s vulnerability profile.

So, on my view, Prompt Injection is a vulnerability because:

- It’s a technical problem that can be attacked and that contributes to the overall risk level of the system

- It’s remarkably similar to SQL injection, i.e., the inability to distinguish instructions from data

- Considering such a system, to be just background risk that can’t be addressed, shuts down creative thinking around how to reduce that risk

The fact that we might have to accept this vulnerability as a cost of doing business (at least in 2025) doesn’t make it less of a vulnerability.

The Pope accepts the risk of proximity because his mission requires it. Organizations accept Prompt Injection risk because their applications require humans to interact with their AI-powered interfaces. Fair enough.

But accepting this current reality should not be the same as writing it off as background noise.

As defenders, and as researchers, we need to treat it like an active vulnerability so we can mitigate and potentially address the risk it poses over time.