Facebook… err… Meta has been facing a tough battle of curbing misinformation without affecting freedom of expression and has been failing miserably.

In a bid to enhance transparency, the company has modified its penalty system for posts that violate its rules, providing users with more information about why something is being removed.

“Under the new system, we will focus on helping people understand why we have removed their content, which is shown to be more effective at preventing re-offending, rather than so quickly restricting their ability to post,” wrote Monika Bickert, Vice President of Content Policy at Meta, the parent company of Facebook.

“We will still apply account restrictions to persistent violators, typically beginning at the seventh violation, after we’ve given sufficient warnings and explanations to help the person understand why we removed their content. We will also restrict people from posting in groups at lower thresholds where warranted,” she added.

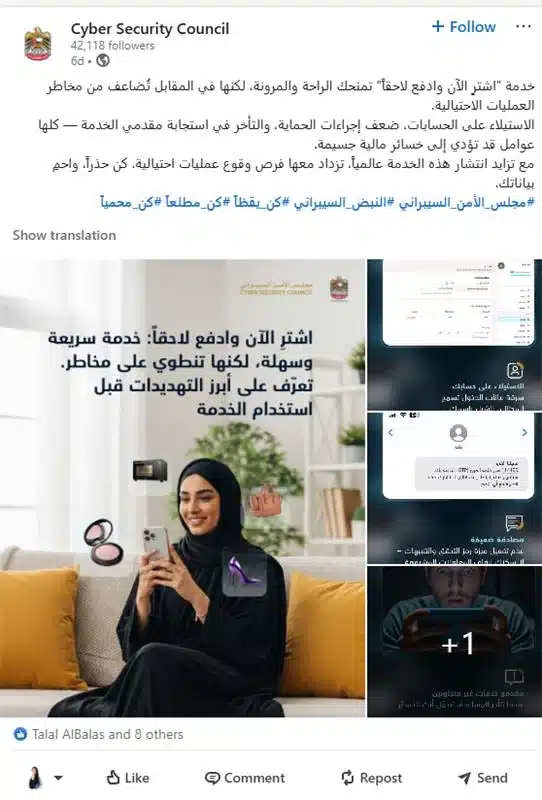

The decision to revise norms, at least peripherally, comes close to controversial politician Donald Trump’s return to Meta’s platforms: Facebook and Instagram.

Facebook, free content, and conditions

Social media platforms, including Facebook, frequently encounter questionable content created by users, which is why they have content guidelines and policies for enforcement.

According to the latest announcement, this change of ways in disciplining those who break the rules came after a self-examination with the aid of an internal Oversight Board.

According to Facebook’s assessment, the new policy will reduce the number of account bans that are deemed excessively harsh and might have been the result of a “mistake.” Rather than sending someone to “Facebook jail” for an inadvertent choice of words, the post or comment will be removed, and the user will receive a warning.

“The vast majority of people on our apps are well-intentioned. Historically, some of those people have ended up in “Facebook jail” without understanding what they did wrong or whether they were impacted by a content enforcement mistake,” wrote Bickert.

The warning will provide specifics about what led to the removal in the first place, with the goal of guiding users and preventing future rule-breaking. Nevertheless, Facebook will continue to crack down on serious infractions such as child exploitation or illegal drug sales.

Although the punishment for minor violations will be lighter, repeat offenders will still face consequences. Facebook will increase the severity of penalties for those who have accumulated seven or more strikes, aiming for a more equitable approach to unintentional and deliberate rule-breaking.

The company also hopes that not blocking some posts will make it easier to identify problematic accounts and deal with them promptly. However, Facebook’s history with moderation points to an entirely different direction.

Misinformation and change of norms

The latest announcement comes weeks after Donald Trump’s return to Meta’s platforms.

After being suspended for more than two years, Donald Trump’s Facebook and Instagram pages became live again on February 9. The ban was enacted after the 2021 Capitol riots, during which the then-president praised those who were “engaged in violence.”

“Like any other Facebook or Instagram user, Mr. Trump is subject to our Community Standards. In light of his violations, he now also faces heightened penalties for repeat offenses — penalties which will apply to other public figures whose accounts are reinstated from suspensions related to civil unrest under our updated protocol,” wrote Nick Clegg, the company’s president of global affairs, in an announcement on 25 January.

“In the event that Mr. Trump posts further violating content, the content will be removed and he will be suspended for between one month and two years, depending on the severity of the violation.”

“As a general rule, we don’t want to get in the way of open debate on our platforms, esp in context of democratic elections. People should be able to hear what politicians are saying – good, bad & ugly – to make informed choices at the ballot box,” he tweeted a day later.

As a general rule, we don’t want to get in the way of open debate on our platforms, esp in context of democratic elections. People should be able to hear what politicians are saying – good, bad & ugly – to make informed choices at the ballot box. 1/4

— Nick Clegg (@nickclegg) January 25, 2023

Trump’s posts that challenge the outcome of the 2020 US presidential election without evidence remain on both platforms. Despite the suspension, Trump has millions of followers on both platforms and announced his candidacy for the presidency in 2024.

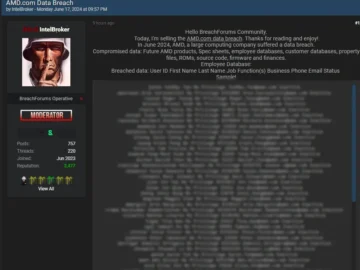

Bigger the platform, faster the spread of misinformation

From election manipulation to spreading COVID scare, Facebook’s reach has been causing more harm than good, warn reports from across the world.

“As a researcher who studies social and civic media, I believe it’s critically important to understand how misinformation spreads online. But this is easier said than done,” wrote Ethan Zuckerman, Associate Professor of Public Policy, Communication, and Information, University of Massachusetts Amherst.

“Simply counting instances of misinformation found on a social media platform leaves two key questions unanswered: How likely are users to encounter misinformation, and are certain users especially likely to be affected by misinformation? These questions are the denominator problem and the distribution problem.”

The root cause lies in the business interests of social media majors, points out a study by MIT Technology Review, a bimonthly magazine wholly owned by the Massachusetts Institute of Technology.

The study blames Facebook and Google’s algorithms for allowing disinformation and clickbait to spread across their platforms, resulting in negative consequences for society. Even worse, “Facebook isn’t just amplifying misinformation. The company is also funding it,” said the report.

According to the investigation, Facebook and Google are paying millions of advertisement dollars to bankroll clickbait actors, “fuelling the deterioration of information ecosystems around the world”.

“In 2015, six of the 10 websites in Myanmar getting the most engagement on Facebook were from legitimate media, according to data from CrowdTangle, a Facebook-run tool. A year later, Facebook (which recently rebranded to Meta) offered global access to Instant Articles, a program publishers could use to monetize their content,” read an instance listed in the report.

“One year after that rollout, legitimate publishers accounted for only two of the top 10 publishers on Facebook in Myanmar. By 2018, they accounted for zero. All the engagement had instead gone to fake news and clickbait websites. In a country where Facebook is synonymous with the internet, the low-grade content overwhelmed other information sources.”