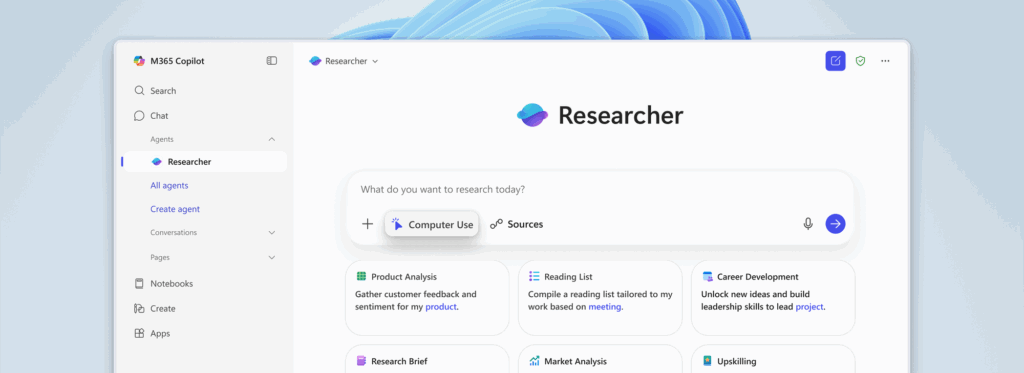

Microsoft has launched Researcher with Computer Use in Microsoft 365 Copilot, marking a significant advancement in autonomous AI technology. This new feature allows the AI assistant to move beyond simple research tasks and actively perform actions on behalf of users through a secure virtual computer environment.

The innovation enables Copilot to navigate public websites, access authenticated content, and interact with web pages while maintaining enterprise-level security standards.

The Computer Use feature empowers Researcher to access premium subscription-based content that requires login credentials, execute tasks by clicking buttons and filling forms, and generate complex outputs such as presentations and spreadsheets.

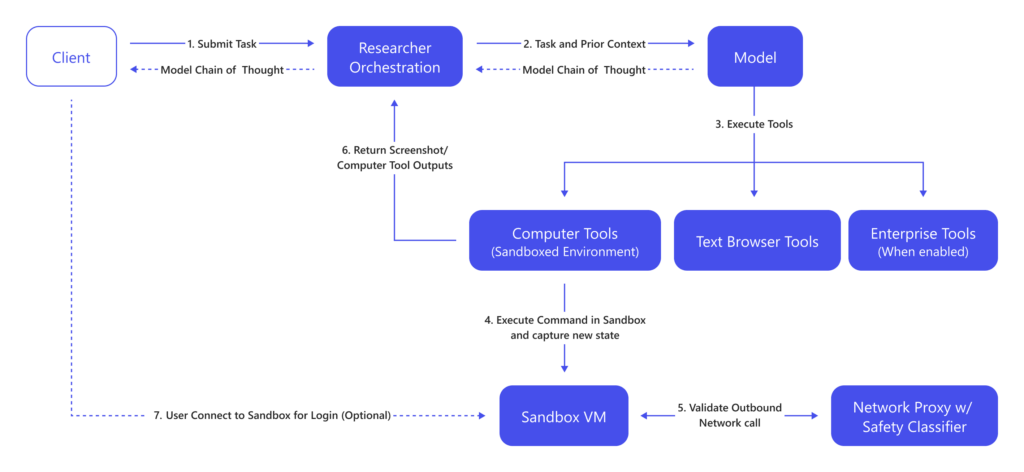

The system operates through a virtual machine running on Windows 365, which functions as a temporary cloud-based computer dedicated to each conversation session.

How Computer Use Transforms Research Capabilities

Users can request Researcher to prepare customer meeting briefs by gathering social media insights, create personalized reading lists based on ongoing projects, analyze industry trends from gated publications, or transform research findings into polished presentations.

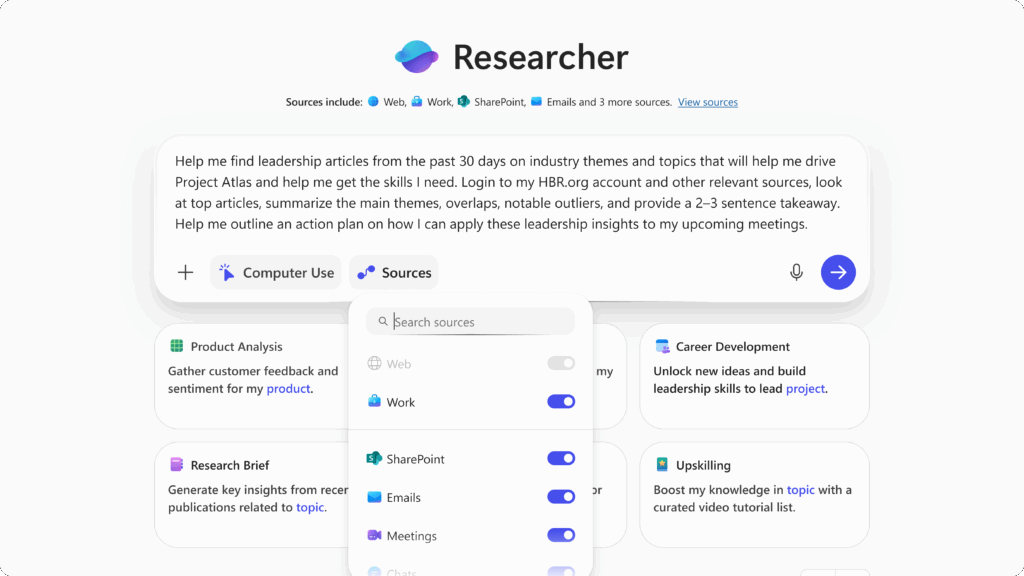

The technology connects to work data, including meetings, files, and chats, while providing users full visibility and control.

When activated, Researcher utilizes visual browsers, text browsers, terminal interfaces, and Microsoft Graph to execute comprehensive workflows.

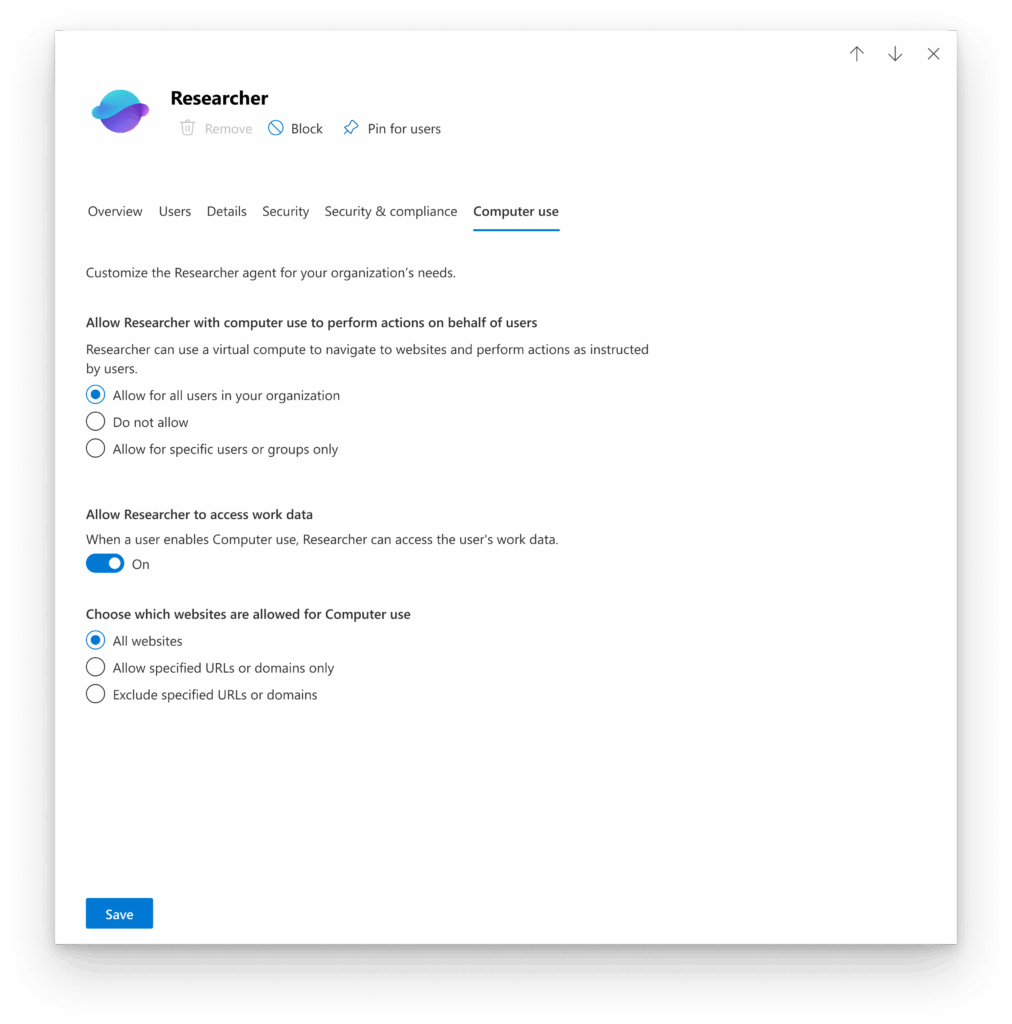

Users can customize which data sources the system accesses, and enterprise data remains disabled by default when Computer Use is activated.

The system requests explicit user confirmation before taking actions and allows users to take control through secure screen-sharing when authentication is required.

Microsoft has implemented robust security measures to address potential risks associated with autonomous AI operations. The virtual machine operates in a fully sandboxed environment, isolated from corporate networks and user devices.

Safety classifiers inspect every network operation to validate domain safety, verify relevance to user queries, and analyze content types. This protection helps prevent cross-prompt injection attacks and jailbreak attempts that might occur during web navigation.

Browser actions performed in the sandbox are fully auditable through standard Microsoft 365 Copilot logging mechanisms.

User credentials never transfer to or from the sandbox environment, and all intermediate files are automatically deleted when sessions end.

Administrators control feature availability through the Microsoft Admin Center, where they can specify which security groups can access Computer Use, manage domain allow and deny lists, and govern whether users can combine enterprise and web data.

Performance testing demonstrates substantial improvements, with Researcher achieving 44% better results on BrowseComp benchmarks for complex browsing tasks and 6% improvement on GAIA evaluations.

These benchmarks measure the system’s ability to reason across multiple information sources, synthesize scattered data, and solve real-world research challenges that require accessing diverse datasets and corporate records.

Follow us on Google News, LinkedIn, and X for daily cybersecurity updates. Contact us to feature your stories.