Microsoft is sounding the alarm on critical security considerations as it introduces agentic AI capabilities to Windows through experimental features like Copilot Actions.

The company is rolling out a new agent workspace feature in private preview that establishes isolated environments for AI agents to operate, but the tech giant is being transparent about the novel cybersecurity threats these autonomous systems introduce.

Agent workspace represents a significant shift in how users interact with AI on Windows. Rather than traditional app execution, agents operate in a separate, contained session with their own dedicated account distinct from the user’s personal account.

This architectural approach enables runtime isolation and scoped authorization, allowing agents to complete tasks in the background while users continue working. However, this autonomy introduces unprecedented security challenges.

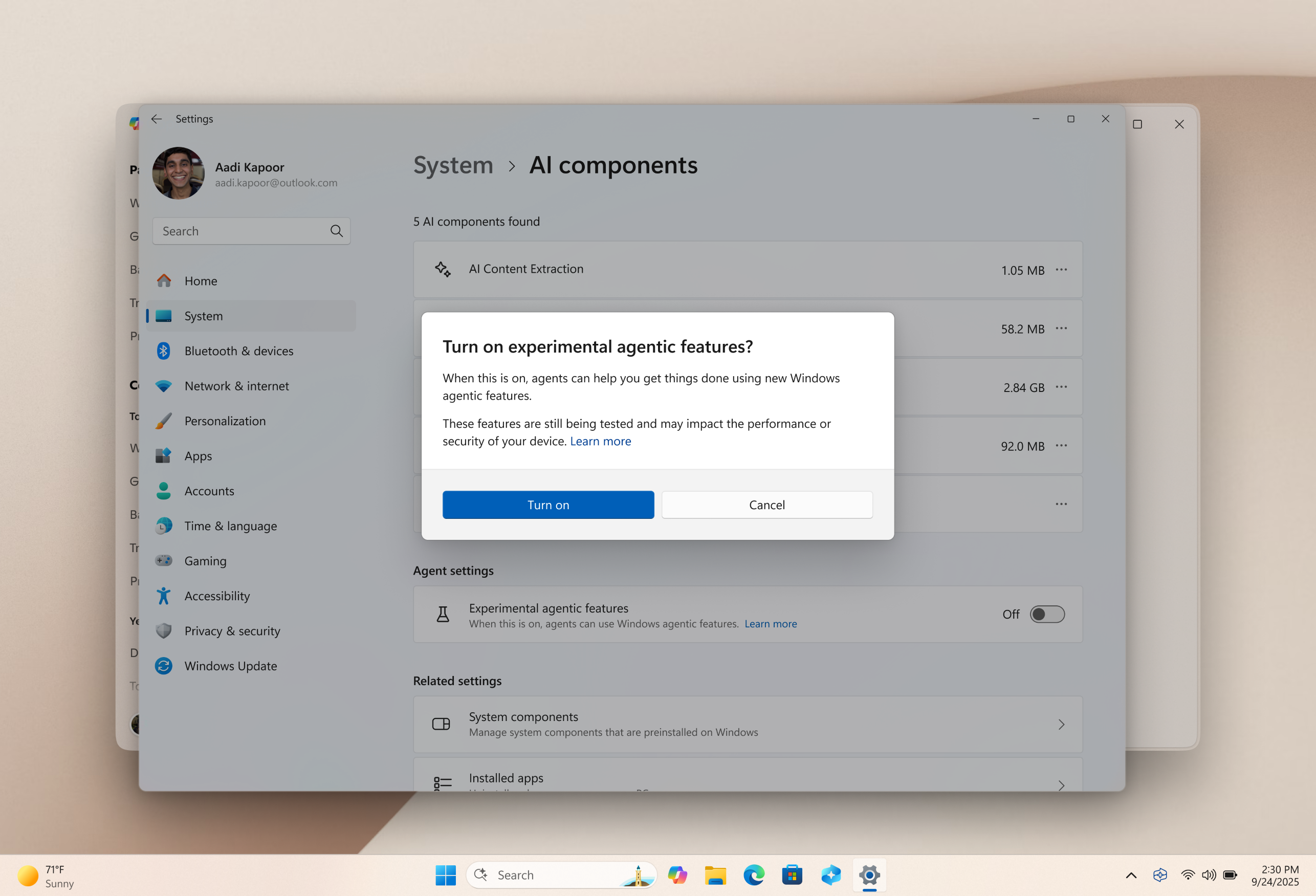

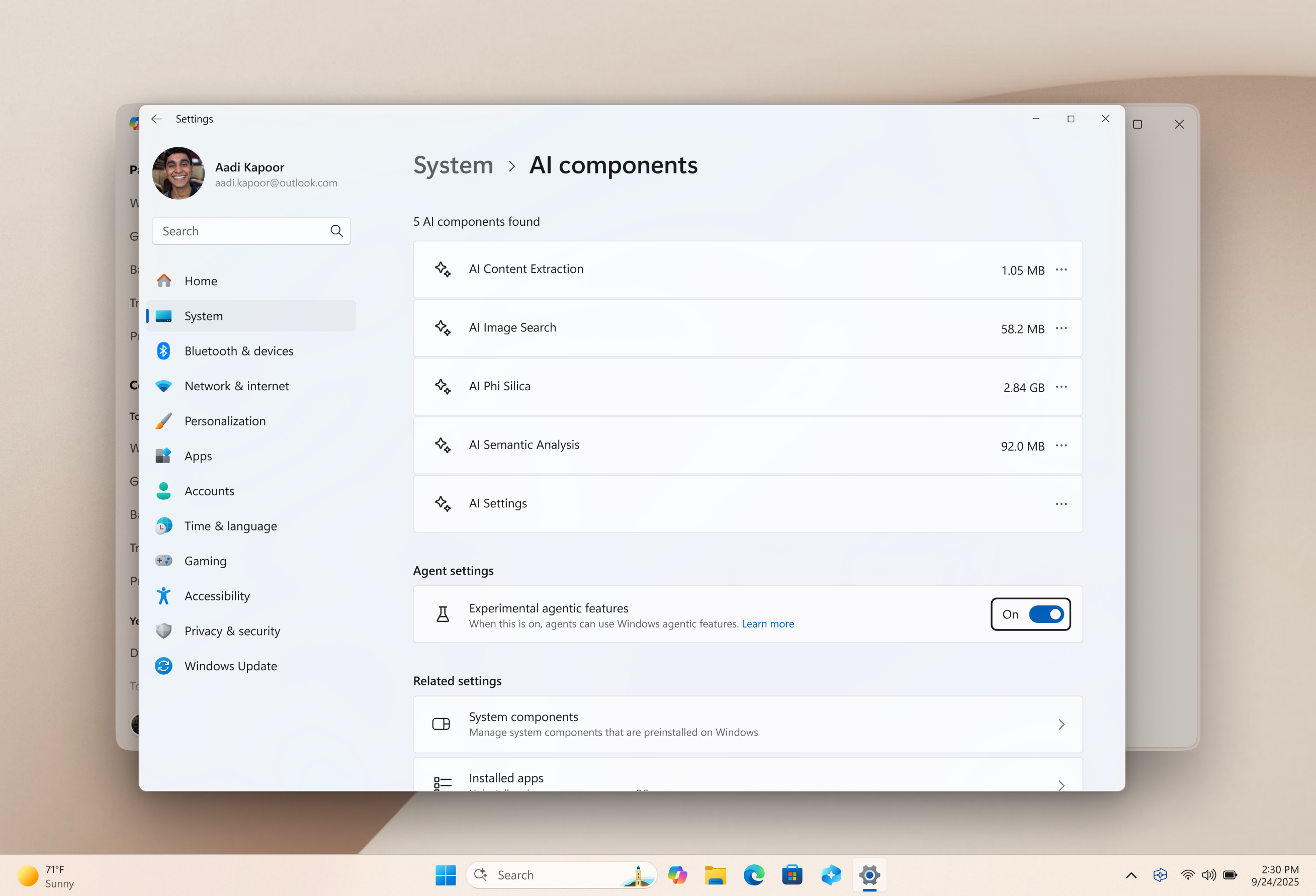

The experimental agentic features setting, which must be enabled by administrators, creates dedicated agent accounts and workspaces that provide access to commonly used folders including Documents, Downloads, Desktop, Music, Pictures, and Videos.

While this separation provides some containment, Microsoft acknowledges that agentic AI applications face novel security risks that traditional software doesn’t encounter.

Cross-Prompt Injection Threat

One of the most concerning vulnerabilities Microsoft highlights is cross prompt injection attacks (XPIA).

In these attacks, malicious content embedded in user interface elements or documents can override agent instructions, potentially leading to unintended consequences such as data exfiltration or malware installation.

This represents a fundamentally different attack vector than traditional cybersecurity threats, as it exploits the reasoning capabilities that make these agents powerful.

Additionally, AI models still face functional limitations, occasionally hallucinating and producing unexpected outputs. These behavioral inconsistencies compound security risks when agents have autonomous access to files and applications on a user’s device.

To address these concerns, Microsoft is implementing security principles centered on three core commitments: non-repudiation, confidentiality, and authorization.

The company emphasizes that all agent actions must be observable and distinguishable from user actions, with tamper-evident audit logging capabilities.

Agents are restricted to operating under the principle of least privilege, prevented from exceeding the permissions of the user initiating them and explicitly barred from administrative rights.

The company is also implementing granular, time-bound access controls. Agents can only access sensitive information in specific, user-authorized contexts, such as when interacting with particular applications or websites.

Critically, administrative entities cannot gain special access to agents beyond the account owner they represent.

Phased Rollout and Continuous Evolution

Microsoft is adopting a deliberately cautious approach, starting with limited preview access to gather feedback before broader availability.

The company acknowledges that security in agentic AI contexts is not a one-time feature but rather a continuous commitment that evolves as capabilities mature.

The agent workspace is currently more efficient than full virtual machine solutions like Windows Sandbox while still providing security isolation and parallel execution capabilities.

The initial preview release reflects Microsoft’s recognition that agentic AI represents a fundamentally new security frontier.

As part of its Secure Future Initiative, the company is actively participating in security research partnerships to address these challenges.

The experimental agentic features setting is disabled by default, and enabling it requires administrative privileges.

Microsoft recommends that users carefully review security implications before activating agentic capabilities.

The feature remains in preview specifically to gather user feedback before adding more granular security and privacy controls for general availability.

As agentic AI capabilities become mainstream, Microsoft’s transparent approach to security risks demonstrates the industry’s need for careful, principled development of autonomous AI systems.

Follow us on Google News, LinkedIn, and X to Get Instant Updates and Set GBH as a Preferred Source in Google.