Close to 12,000 valid secrets that include API keys and passwords have been found in the Common Crawl dataset used for training multiple artificial intelligence models.

The Common Crawl non-profit organization maintains a massive open-source repository of petabytes of web data collected since 2008 and is free for anyone to use.

Because of the large dataset, many artificial intelligence projects may rely, at least in part, on the digital archive for training large language models (LLMs), including ones from OpenAI, DeepSeek, Google, Meta, Anthropic, and Stability.

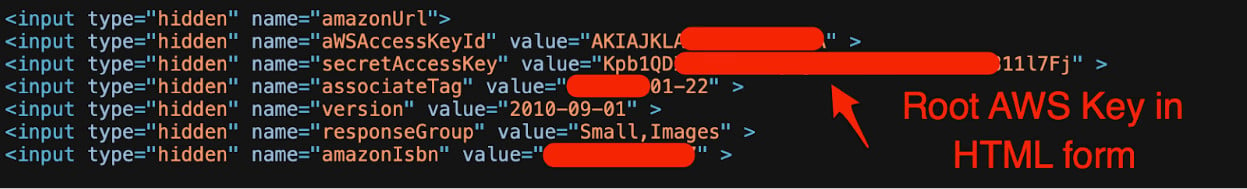

AWS root keys and MailChimp API keys

Researchers at Truffle Security – the company behind the TruffleHog open-source scanner for sensitive data, found valid secrets after checking 400 terabytes of data from 2.67 billion web pages in the Common Crawl December 2024 archive.

They discovered 11,908 secrets that authenticate successfully, which developers hardcoded, indicating the potential of LLMs being trained on insecure code.

It should be noted that LLM training data is not used in raw form and goes through a pre-processing stage that involves cleaning and filtering out unnecessary content like irrelevant data, duplicate, harmful, or sensitive information.

Despite such efforts, it is difficult to remove confidential data, and the process offers no guarantee for stripping such a large dataset of all personally identifiable information (PII), financial data, medical records, and other sensitive content.

After analyzing the scanned data, Truffle Security found valid API keys for Amazon Web Services (AWS), MailChimp, and WalkScore services.

source: Truffle Security

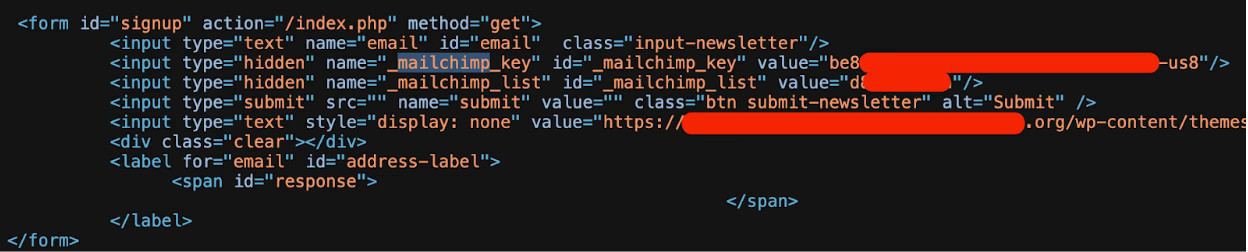

Overall, TruffleHog identified 219 distinct secret types in the Common Crawl dataset, the most common being MailChimp API keys.

“Nearly 1,500 unique Mailchimp API keys were hard coded in front-end HTML and JavaScript” – Truffle Security

The researchers explain that the developers’ mistake was to hardcode them into HTML forms and JavaScript snippets and did not use server-side environment variables.

source: Truffle Security

An attacker could use these keys for malicious activity such as phishing campaigns and brand impersonation. Furthermore, leaking such secrets could lead to data exfiltration.

Another highlight in the report is the high reuse rate of the discovered secrets, saying that 63% were present on multiple pages. One of them though, a WalkScore API key, “appeared 57,029 times across 1,871 subdomains.”

The researchers also found one webpage with 17 unique live Slack webhooks, which should be kept secret because they allow apps to post messages into Slack.

“Keep it secret, keep it safe. Your webhook URL contains a secret. Don’t share it online, including via public version control repositories,” Slack warns.

Following the research, Truffle Security contacted impacted vendors and worked with them to revoke their users’ keys. “We successfully helped those organizations collectively rotate/revoke several thousand keys,” the researchers say.

Even if an artificial intelligence model uses older archives than the dataset the researchers scanned, Truffle Security’s findings serve as a warning that insecure coding practices could influence the behavior of the LLM.