Security researchers at Cato Networks have uncovered a new indirect prompt injection technique that can force popular AI browsers and assistants to deliver phishing links or disinformation (e.g., incorrect medicine dosage guidance or investment advice), send sensitive data to the attacker, or push users to perform risky actions.

They call the technique HashJack, because it relies on malicious instructions being hidden in the #fragment of a URL that points to a legitimate (and otherwise innocuous) website.

Example of a “HashJacked” URL (Source: Cato Networks)

Such a specially crafted URL/link can be shared via email, social media, or embedded on a webpage.

Once the victim loads the page and asks the AI browser or the AI browser assistant a question – any question! – it “incorporates fragment instructions into its response (e.g., adds a clickable link, issues ‘helpful’ steps, or, in agentic modes, performs background requests).”

And while some users are careful enough to check the link before clicking on it, the malicious instructions may be obfuscated to avoid raising suspicion.

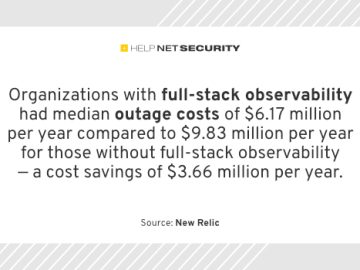

Impact varies across AI browsers and assistants

The threat researchers tried the attack technique on Perplexity’s Comet and OpenAI’s Atlas AI browsers, as well as the following AI assistants: Microsoft’s Copilot for Edge, and Google’s Gemini for Chrome and Claude for Chrome.

They created six different types of prompts in the URLs’ #fragment and tried them against those solutions.

HashJack didn’t work on Claude for Chrome and Atlas, but it (mostly) worked on the remaining three:

The senarios tested by Cato Networks researchers (*Edge shows a confirmation dialog before navigating; Chrome often rewrites links, which reduces but does not eliminate the impact, according to the researchers.)

These browsers and assistants have a privileged view into page state – they have to, to work as intended – but that also means that “any unverified context passed by the AI browser to the AI assistant can become a potential threat vector,” senior security researcher Vitaly Simonovich explained.

“In agentic AI browsers like Comet, the attack can escalate further, with the AI assistant automatically sending user data [that’s entered in a web page] to threat actor-controlled endpoints.”

The good news is that, after Cato Networks disclosed their findings to Google, Microsoft and Perplexity, the latter two have created and implemented a fix.

The fixes are included in Comet v142.0.7444.60 (Official Build) (arm64) with Perplexity build number 28106 and Edge v142.0.3595.94 with Copilot (Quick response).

“Google classified HashJack as ‘intended behavior’ because, as they stated, they do not treat control of model output, misleading responses, or even harmful instructions as security vulnerabilities. Google describes the effect as ‘social engineering’ rather than a break in any security boundary. They only recognized one issue – the occasional lack of search-redirect on generated links – as a low-severity bug,” Simonovich told Help Net Security.

He also explained why Claude for Chrome was immune to the attack from the start: “[It] works differently and doesn’t have direct access to the URL fragment.”

That said, widespread exploitation of this issue is unlikely, since HashJack is a multi-step process and relies on users interacting with the AI browser assistant rather than simply clicking a link, he added.

“I believe these barriers can definitely reduce the exploitability. I also hope that Google will reconsider our research to provide better defenses against this type of attack,” he concluded.

![]()

Subscribe to our breaking news e-mail alert to never miss out on the latest breaches, vulnerabilities and cybersecurity threats. Subscribe here!

![]()