Cybersecurity researchers have uncovered a critical vulnerability in the artificial intelligence supply chain that enables attackers to achieve remote code execution across major cloud platforms including Microsoft Azure AI Foundry, Google Vertex AI, and thousands of open-source projects.

The newly discovered attack method, termed “Model Namespace Reuse,” exploits a fundamental flaw in how AI platforms manage and trust model identifiers within the Hugging Face ecosystem.

The vulnerability stems from Hugging Face’s namespace management system, where models are identified using a two-part naming convention: Author/ModelName.

When organizations or authors delete their accounts from Hugging Face, their unique namespaces return to an available pool rather than becoming permanently reserved.

This creates an opportunity for malicious actors to register previously used namespaces and upload compromised models under trusted names, potentially affecting any system that references models by name alone.

Palo Alto Networks analysts identified this supply chain attack vector during an extensive investigation of AI platform security practices.

.webp)

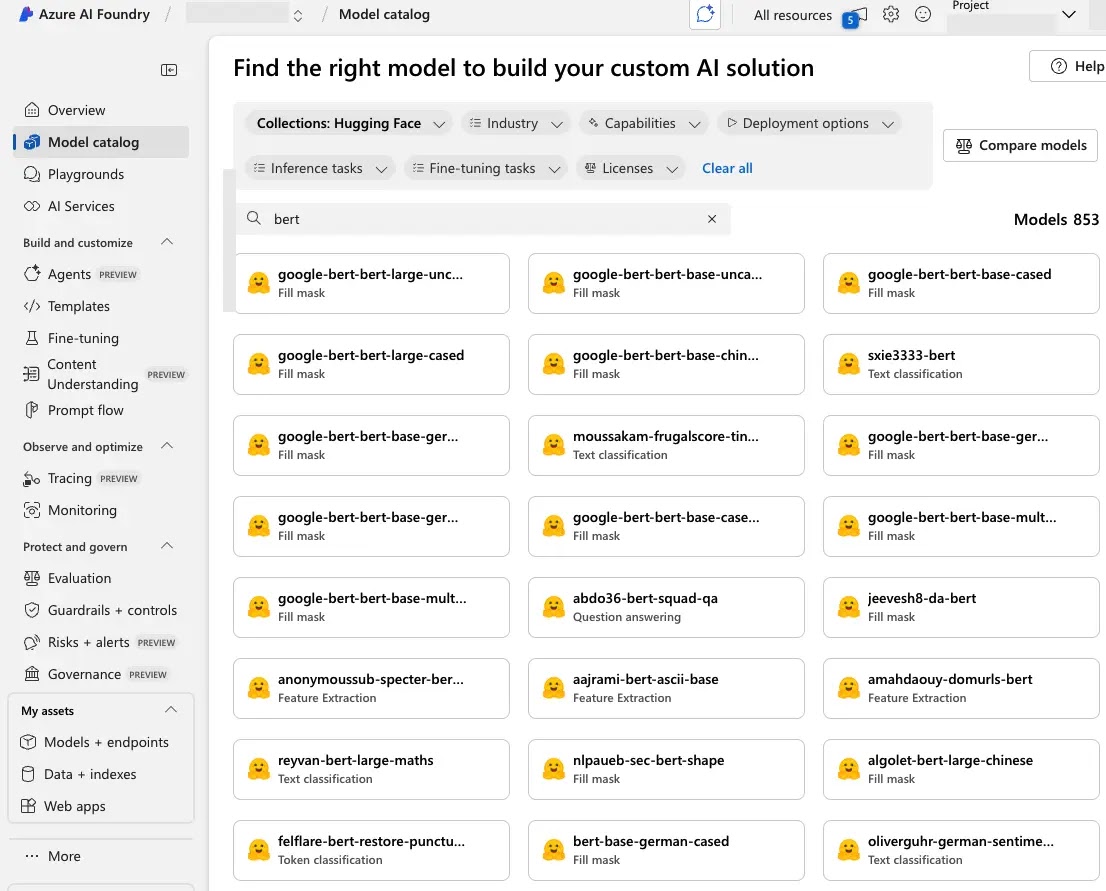

The research revealed that the vulnerability affects not only direct integrations with Hugging Face but also extends to major cloud AI services that incorporate Hugging Face models into their catalogs.

.webp)

The attack’s scope is particularly concerning given the widespread adoption of AI models across enterprise environments and the implicit trust placed in model naming conventions.

The attack mechanism operates through two primary scenarios. In the first, when a model author’s account is deleted, the namespace becomes immediately available for re-registration.

The second scenario involves ownership transfers where models are moved to new organizations, followed by deletion of the original author account.

In both cases, malicious actors can exploit the namespace reuse to substitute legitimate models with compromised versions containing malicious payloads.

Technical Implementation and Attack Vectors

The researchers demonstrated the vulnerability’s practical impact through controlled proof-of-concept attacks against Google Vertex AI and Microsoft Azure AI Foundry.

.webp)

In their testing, they successfully registered abandoned namespaces and uploaded models embedded with reverse shell payloads.

The malicious code executed automatically when cloud platforms deployed these seemingly legitimate models, granting attackers access to underlying infrastructure.

from transformers import AutoTokenizer, AutoModelForCausalLM

# Vulnerable code pattern found in thousands of repositories

tokenizer = AutoTokenizer.from_pretrained("AIOrg/Translator_v1")

model = AutoModelForCausalLM.from_pretrained("AIOrg/Translator_v1")The attack’s effectiveness lies in its exploitation of automated deployment processes. When platforms like Vertex AI’s Model Garden or Azure AI Foundry’s Model Catalog reference models by name, they inadvertently create persistent attack surfaces.

The researchers documented gaining access to dedicated containers with elevated permissions within Google Cloud Platform and Azure environments, demonstrating the severity of potential breaches.

Organizations can mitigate this risk through version pinning, implementing the revision parameter to lock models to specific commits, and establishing controlled storage environments for critical AI assets.

The discovery underscores the urgent need for comprehensive security frameworks addressing AI supply chain vulnerabilities as organizations increasingly integrate machine learning capabilities into production systems.

Boost your SOC and help your team protect your business with free top-notch threat intelligence: Request TI Lookup Premium Trial.