A critical vulnerability in NVIDIA’s Merlin Transformers4Rec library (CVE-2025-23298) enables unauthenticated attackers to achieve remote code execution (RCE) with root privileges via unsafe deserialization in the model checkpoint loader.

The discovery underscores the persistent security risks inherent in ML/AI frameworks’ reliance on Python’s pickle serialization.

NVIDIA Merlin Vulnerability

Trend Micro’s Zero Day Initiative (ZDI) stated that the vulnerability resides in the load_model_trainer_states_from_checkpoint function, which uses PyTorch’s torch.load() without safety parameters. Under the hood, torch.load() leverages Python’s pickle module, allowing arbitrary object deserialization.

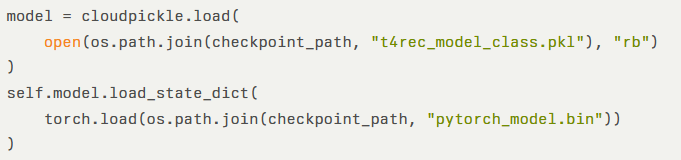

Attackers can embed malicious code in a crafted checkpoint file—triggering execution when untrusted pickle data is loaded. In the vulnerable implementation, cloudpickle loads the model class directly:

This approach grants attackers full control of the deserialization process. By defining a custom __reduce__ method, a malicious checkpoint can execute arbitrary system commands upon loading, e.g., calling os.system() to fetch and execute a remote script.

The attack surface is vast: ML practitioners routinely share pre-trained checkpoints via public repositories or cloud storage. Production ML pipelines often run with elevated privileges, meaning a successful exploit not only compromises the model host but can also escalate to root-level access.

To demonstrate the flaw, researchers crafted a malicious checkpoint:

Loading this checkpoint via the vulnerable function triggers the embedded shell command prior to any model weight restoration—resulting in immediate RCE under the context of the ML service.

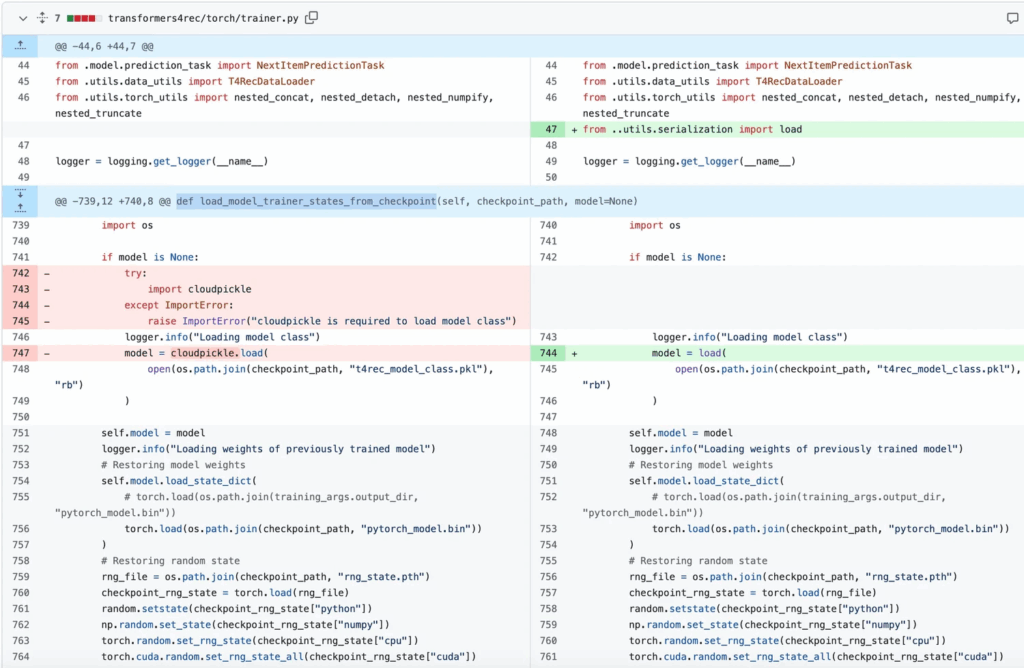

NVIDIA addressed the issue in PR #802 by replacing raw pickle calls with a custom load() function that whitelists approved classes.

The patched loader in serialization.py enforces input validation, and developers are encouraged to use weights_only=True in torch.load() to avoid untrusted object deserialization.

Developers must never use pickle on untrusted data and should restrict deserialization to known, safe classes.

Alternative formats—such as Safetensors or ONNX—offer safer model persistence. Organizations should enforce cryptographic signing of model files, sandbox deserialization processes, and include ML pipelines in regular security audits.

| Risk Factors | Details |

| Affected Products | NVIDIA Merlin Transformers4Rec ≤ v1.5.0 |

| Impact | Remote code execution as root |

| Exploit Prerequisites | Attacker-supplied model checkpoint loaded via torch.load() |

| CVSS 3.1 Score | 9.8 (Critical) |

The broader community must advocate for security-first design principles and deprecate pickle-based mechanisms altogether.

Until pickle reliance is eliminated, similar vulnerabilities will persist. Vigilance, robust input validation, and a zero-trust mindset remain crucial to safeguarding production ML systems against supply-chain and RCE threats.

Follow us on Google News, LinkedIn, and X for daily cybersecurity updates. Contact us to feature your stories.