One thing I’ve never liked about the whole MCP thing is the fact that you have to build a server and host it yourself.

So ever since hearing about this, I’ve been looking for a self-contained solution where I could basically just describe the functionality that I want and the infrastructure could be handled itself.

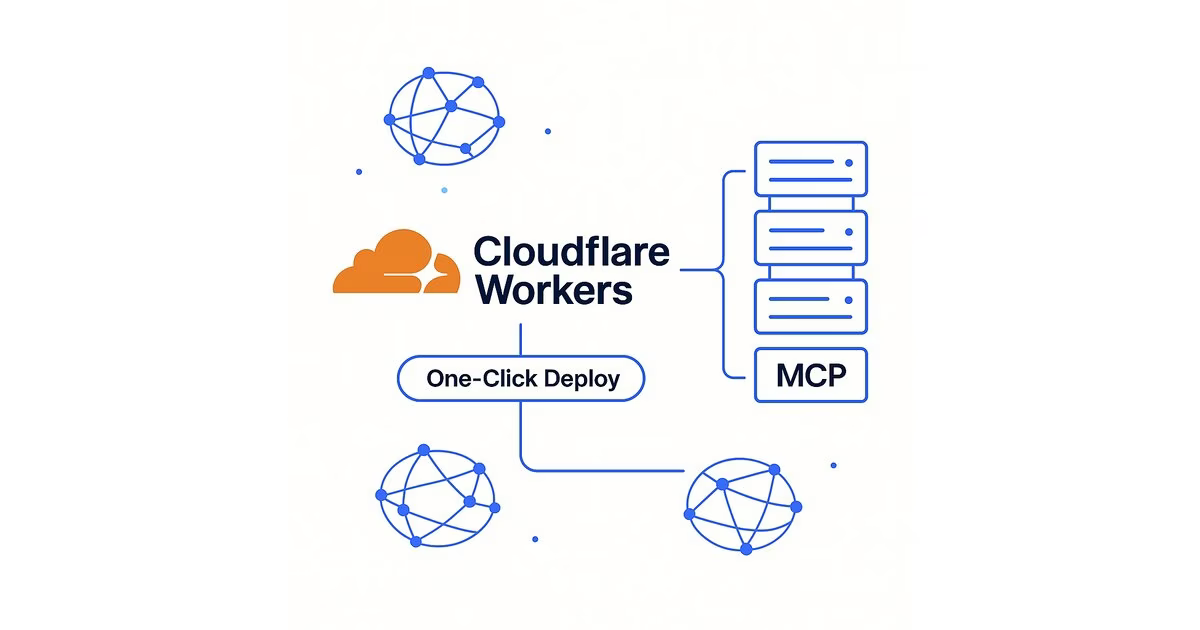

It turns out Cloudflare actually has a solution for doing this, and I just love this about Cloudflare. I’ve actually talked about this elsewhere how they’re doing all sorts of one-off services really well, and just kind of eating the internet.

What are MCP servers?

Model Context Protocol (MCP) servers are a way to extend AI assistants with custom tools and data sources. They let you give your AI assistant access to specific capabilities—like querying databases, calling APIs, or performing specialized tasks. The problem is, traditionally you need to:

- Set up a server

- Handle authentication

- Manage scaling

- Deal with infrastructure

- Maintain uptime

This is a lot of overhead when you just want to add a simple capability to your AI workflow.

Enter Cloudflare’s one-click solution

Cloudflare Workers provides the perfect platform for MCP servers because:

- No infrastructure management – Cloudflare handles all the scaling and distribution

- Global edge network – Your MCP server runs close to users everywhere

- Simple deployment – Push code and it’s live

- Pay-per-use pricing – No paying for idle servers

Building a working MCP server

Let’s build an actual MCP server that I can use. I’ll create a simple “website analyzer” that can fetch and analyze any website’s content.

Step 1: Set up the project

mkdir cloudflare-mcp-analyzer

cd cloudflare-mcp-analyzer

bun init -y

bun add @modelcontextprotocol/sdk wrangler1

2

3

4

Step 2: Create the MCP server

Create src/index.js:

export default {

async fetch(request, env, ctx) {

const url = new URL(request.url);

// CORS headers

const corsHeaders = {

"Access-Control-Allow-Origin": "*",

"Access-Control-Allow-Methods": "GET, POST, OPTIONS",

"Access-Control-Allow-Headers": "Content-Type",

};

if (request.method === "OPTIONS") {

return new Response(null, { headers: corsHeaders });

}

// Root endpoint - server info

if (url.pathname === "/") {

return new Response(

JSON.stringify(

{

name: env.MCP_SERVER_NAME || "website-analyzer",

version: env.MCP_SERVER_VERSION || "1.0.0",

description: "Website analysis MCP server",

endpoints: ["/tools", "/call"],

},

null,

2,

),

{

headers: { "Content-Type": "application/json", ...corsHeaders },

},

);

}

// List available tools

if (url.pathname === "/tools") {

return new Response(

JSON.stringify(

{

tools: [

{

name: "analyze_website",

description: "Analyze a website and extract key information",

inputSchema: {

type: "object",

properties: {

url: { type: "string", description: "The URL to analyze" },

},

required: ["url"],

},

},

],

},

null,

2,

),

{

headers: { "Content-Type": "application/json", ...corsHeaders },

},

);

}

// Execute tool

if (url.pathname === "/call" && request.method === "POST") {

const body = await request.json();

const { name, arguments: args } = body;

if (name === "analyze_website") {

try {

const response = await fetch(args.url);

const html = await response.text();

// Extract basic info

const titleMatch = html.match(/( .*?)<\/title>/i);

const title = titleMatch ? titleMatch[1] : "No title found";

const linkCount = (html.match(/\s/gi) || []).length;

const imageCount = (html.match(/![]() \s/gi) || []).length;

return new Response(

JSON.stringify({

content: [

{

type: "text",

text: JSON.stringify(

{

url: args.url,

title,

stats: {

links: linkCount,

images: imageCount,

contentLength: html.length,

},

},

null,

2,

),

},

],

}),

{

headers: { "Content-Type": "application/json", ...corsHeaders },

},

);

} catch (error) {

return new Response(

JSON.stringify({

content: [

{

type: "text",

text: `Error: ${error.message}`,

},

],

}),

{

headers: { "Content-Type": "application/json", ...corsHeaders },

},

);

}

}

}

return new Response("Not Found", { status: 404 });

},

};

\s/gi) || []).length;

return new Response(

JSON.stringify({

content: [

{

type: "text",

text: JSON.stringify(

{

url: args.url,

title,

stats: {

links: linkCount,

images: imageCount,

contentLength: html.length,

},

},

null,

2,

),

},

],

}),

{

headers: { "Content-Type": "application/json", ...corsHeaders },

},

);

} catch (error) {

return new Response(

JSON.stringify({

content: [

{

type: "text",

text: `Error: ${error.message}`,

},

],

}),

{

headers: { "Content-Type": "application/json", ...corsHeaders },

},

);

}

}

}

return new Response("Not Found", { status: 404 });

},

};1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

Step 3: Configure for Cloudflare

Create wrangler.toml:

name = "mcp-website-analyzer"

main = "src/index.js"

compatibility_date = "2024-01-01"

[vars]

MCP_SERVER_NAME = "website-analyzer"

MCP_SERVER_VERSION = "1.0.0"1

2

3

4

5

6

7

Step 4: Test locally (optional)

# Test your worker locally

wrangler dev1

2

Step 5: Deploy to Cloudflare

# Login to Cloudflare

wrangler login

# Deploy the worker

wrangler deploy1

2

3

4

5

That’s it! Your MCP server is now live on Cloudflare’s global network.

Step 6: Connect to your AI assistant

Add to your MCP configuration:

{

"mcpServers": {

"website-analyzer": {

"url": "https://mcp-website-analyzer.YOUR-SUBDOMAIN.workers.dev",

"description": "Analyzes websites and extracts key information"

}

}

}1

2

3

4

5

6

7

8

Using with OpenCode or Claude Desktop

To use the public HTTPX MCP server (or any Cloudflare Worker MCP) with OpenCode or Claude Desktop:

For OpenCode

- Create or edit

.opencode/settings.jsonin your project:

{

"mcpServers": {

"httpx": {

"url": "https://mcp-httpx-server.danielmiessler.workers.dev"

}

}

}1

2

3

4

5

6

7

- Restart OpenCode. The tools will be available as:

mcp_httpx_httpx_scan– Scan multiple URLsmcp_httpx_httpx_tech_stack– Get technology stack

For Claude Desktop

- Open Claude Desktop settings

- Go to the “Developer” tab

- Edit the MCP servers configuration:

{

"mcpServers": {

"httpx": {

"url": "https://mcp-httpx-server.danielmiessler.workers.dev"

}

}

}1

2

3

4

5

6

7

- Restart Claude Desktop

Testing the connection

Once connected, you can ask your AI assistant to:

- “Use httpx to check what technology stack danielmiessler.com uses”

- “Scan these domains for me: example.com, test.com”

- “What security headers does github.com have?”

The AI will automatically use the MCP server tools to fetch this information.

The beauty of this approach

What I love about this is:

- Zero infrastructure – No servers to manage, no scaling to worry about

- Global performance – Runs on Cloudflare’s edge network

- Simple pricing – Pay only for what you use

- Easy updates – Just push new code

This is exactly what I’ve been looking for—a way to extend AI capabilities without the infrastructure overhead.

Real Example: HTTPX MCP Server

I’ve created a working example that provides HTTP reconnaissance capabilities inspired by Project Discovery’s httpx. It’s live at:

https://mcp-httpx-server.danielmiessler.workers.dev

This server provides two powerful tools:

httpx_scan

Quick HTTP scanning for multiple targets at once:

{

"targets": ["example.com", "test.com", "demo.com"]

}1

2

3

httpx_tech_stack

Comprehensive technology stack detection that analyzes:

- Server software (Nginx, Apache, Cloudflare)

- Backend technologies (PHP, ASP.NET, Express.js)

- Frontend frameworks (React, Vue.js, Angular)

- CMS platforms (WordPress, VitePress, Ghost)

- Analytics tools (Google Analytics, Plausible)

- Security headers (HSTS, CSP, X-Frame-Options)

- CDN providers (Cloudflare, CloudFront, Fastly)

Example usage:

{

"target": "danielmiessler.com"

}1

2

3

The entire implementation is a single JavaScript file that runs on Cloudflare’s edge network, providing instant global availability without managing servers.

Summary

- MCP servers traditionally require managing your own infrastructure

- Cloudflare Workers eliminates this overhead with one-click deployment

- You can focus on functionality while Cloudflare handles the backend

- The example website analyzer shows how simple it can be

- This approach makes MCP servers accessible to everyone, not just infrastructure experts