New AI products are coming onto the market faster than we have seen in any previous technology revolution. Companies’ free access and right to use open source in AI software models has allowed them to prototype an AI product to market cheaper than ever and at hypersonic speed.

We started only a year ago with chatbots and uncanny low-quality images; now, AI is being rapidly integrated into new and more sensitive sectors like healthcare, insurance, transport, human resources, and IT operations.

What those AI companies often ignore, and even hide from buyers and users, is that the speed of new products coming to market is due to a lack of security diligence on their part. Many of the underlying open-source projects are unvetted for the purpose of AI. In return for the massive financial benefits corporations receive by leveraging open source in AI, it is in their best interest to contribute towards community efforts and to the foundational security of the open-source components up front.

Security threats to AI products

According to the 2024 AI Index report, the number of AI patent grants increased by 62,700 between 2021 and 2022 – that’s just the patents granted. Companies are engaged in a frantic race to the top as products are quickly launched to beat competitors to market and to win the large contracts waiting for those who can promise the best AI products now.

Using open source is the only way to prototype new AI products at this rate. By exploiting the free license nature of open-source software, companies can save money and time in developing their products while self-soothing by relying on the perceived safety of communally reviewed and used code.

However, public concern is growing, and rightfully so, as the AI industry ignores the growing risk of utilizing extensive open-source projects to handle sensitive data without the code benefiting from AI-specific security work.

In interviews conducted for the report Cybersecurity Risks to Artificial Intelligence for the UK Department of Science, Innovation, and Technology, executives from various industries stated that no specialized AI data integrity tools are being used in their software development. Furthermore, they do not practice any internal security protocols on AI models outside of existing data leak prevention systems in some exceptional cases. The industry fails to respond to the growing risks of utilizing open-source projects that have not yet been scrutinized for AI applications.

Most of these open-source libraries in AI development significantly predate the generative AI boom. Understandably, their developers at the time of inception didn’t consider how their projects may be used in AI products. This issue is now manifesting as components accepting untrusted inputs that are assumed to be safe, or error handling systems encountering error states that were never considered possible prior to the project being used for AI. Additionally, a NIST report mentions the looming threat of AI being misdirected, listing four different types of attacks that can misuse or misdirect the AI software itself.

A result of not vetting or testing these projects specifically for usage in and with AI is the project’s attack surface changing, which in turn changes the threats that you need to consider when using open-source projects for AI.

Further threatening the security of these projects are new classes of bugs that are AI-specific and need to be considered across all AI-relevant projects. Since AI has many applications and private corporations adhere to different security rules for their models, there is no universal or shared understanding between these corporations as to what these new bug classes are or where they could be found.

Securing open-source projects that are the cornerstones of AI

These products are proprietary software, and the only way to review the code completely would be to review any open-source software it relies on. Anything less provides only a limited insight into a portion of the security health of the software. While extensive research is ongoing to understand new threats in AI applications, OWASP has created an extension to educate on large language model application vulnerabilities.

To generate impactful change in open-source AI security, private firms utilizing open-source projects must invest time and resources into supporting the security of that software, especially in cases where the project’s risk profile has changed with the advent of AI.

By funding independent developers time to work on open source, sponsoring maintainers to improve maintenance hours on the project, or sponsoring security audits, we can improve the security of the open-source girders that are the cornerstones of AI. By working with the existing open-source ecosystem to fund methods of security work that directly and quickly impact projects, AI companies can effectively support not only their interests but also increase the impact of their funding to include goodwill towards them in the space.

Making deep and lasting positive change for security universally will require collaboration across industry participants, both for ease and financial gain, as well as to avoid the involvement of further oversight by governmental organizations in both the open source and private sectors.

For example, in a report from the U.S. Department of the Treasury, researchers suggest the creation of a comprehensive means of communication in the financial sector around AI software through a universal AI lexicon. Such an effort could result in deeper conversations around AI between invested parties able to articulate AI software behavior.

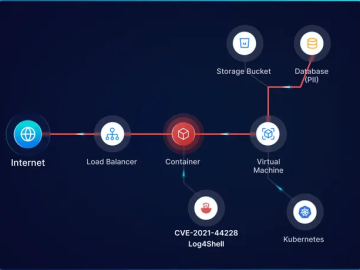

Companies can organize with foundations or other firms and cost-split security efforts to achieve this and higher security health. This joint effort could also develop a unified front on AI security with global organizations to reduce systemic risks and improve the overall security landscape. Through concerted, proactive efforts, the industry could reduce the risk of the next “log4shell” in AI and avoid a billion-dollar security disaster that can potentially release the sensitive data of users and AI models across many sectors. That renewable and pervasive change starts with investing in open-source security.

Conclusion

As companies avoid time-consuming and costly hurdles of AI development by leveraging open-source technologies, they forgo important security checks while introducing uncountable vulnerabilities to the market.

This is an imminent threat that companies need to correct to prevent monumental disasters immediately. To sustainably and effectively make an impact requires funding, something that the AI industry has shown it already has, with billions invested in the sector. Some foundations and other organizations already allow companies to cost-split security efforts to achieve higher security health for the projects they utilize.

This joint effort could also develop a unified front on AI security with global organizations to reduce systemic risks, saving them millions and potentially billions of dollars in costs spent fixing exploited AI vulnerabilities. Invest in the security of your open-source infrastructure and prevent the next billion-dollar security incident.