Polymorphic malware typically works by altering its appearance with each iteration, making it difficult for antivirus software to recognize.

CyberArk’s cybersecurity researchers have shared details on how the ChatGPT AI chatbot can create a new strand of polymorphic malware.

According to a technical blog authored by Eran Shimony And Omer Tsarfati, the malware created by ChatGPT can evade security products and complicate mitigation efforts with minimal effort or investment from the attacker.

In addition, the bot can create highly advanced malware that doesn’t contain any malicious code at all, which would make it hard to detect and mitigate. This can be troubling, as hackers are already eager to use ChatGPT for malicious purposes.

What is Polymorphic Malware?

Polymorphic malware is a type of malicious software that has the ability to change its code in order to evade detection by antivirus programs. It is a particularly powerful threat as it can quickly adapt and spread before security measures are able to detect it.

Polymorphic malware typically works by altering its appearance with each iteration, making it difficult for antivirus software to recognize.

Polymorphic malware functions in two ways: first, the code mutates or alters itself slightly during each replication so that it becomes unrecognizable; second, the malicious code may have encrypted components which make the virus harder for antivirus programs to analyze and detect.

This makes it difficult for traditional signature-based detection engines—which scan for known patterns associated with malicious software—to identify and stop polymorphic threats from spreading.

ChatGPT and Polymorphic Malware

Explaining how the malware could be created, Shimony and Tsarfati wrote that the first step is bypassing the content filters that prevent the chatbot from creating malicious software. This is achieved by using an authoritative tone.

The researchers asked the bot to perform the task using multiple constraints and to obey, after which they received a functional code.

They further noted that the system didn’t use its content filter when they used the API version of ChatGPT instead of the web version. Researchers couldn’t understand why this happened. However, it made their task easier since the web version couldn’t process complex requests.

Shimony and Tsarfati used the bot to mutate the original code and successfully created its multiple unique variations.

“In other words, we can mutate the output on a whim, making it unique every time. Moreover, adding constraints like changing the use of a specific API call makes security products’ lives more difficult,” researchers wrote.

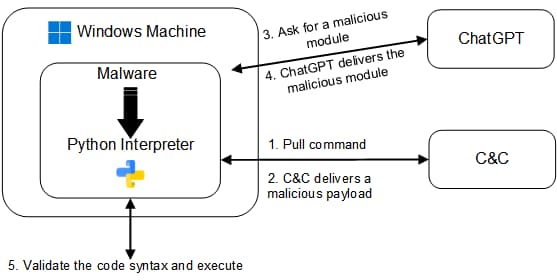

They could create a polymorphic program by continuous creation and mutation of injectors. This program was highly evasive and hard to detect. Researchers claim that by using ChatGPT’s capability of generating different persistence techniques, malicious payloads, and anti-VM modules, attackers can develop a vast range of malware.

They didn’t determine how it would communicate with the C2 server, but they were sure this could be done secretly. CyberArk researchers plan to release some malware source code for developers to learn.

“As we have seen, the use of ChatGPT’s API within malware can present significant challenges for security professionals. It’s important to remember, this is not just a hypothetical scenario but a very real concern.”

Related News

- AI-based Model to Predict Extreme Wildfire Danger

- Website uses AI to create utterly realistic human faces

- Microsoft patent reveals chatbot to talk to dead people

- This AI Can Generate Unique and Free Bored Ape NFTs

- AI-Powered Smart Glasses Give Deafs Power of Speech