My neighbor texted me the other day and said she’d pre-ordered two AI toys for her kids that supposedly used an LLM to dynamically generate content for talking to the child. This was super fascinating to me. I’ve always thought something like that seemed awesome as kids can ask questions about anything, and get contextual answers back.

She said it was from Bondu toys and asked if I could check if they were safe. She knows what I do, so she wanted my opinion before they arrived. I told her I’d take a look.

Later, I spent a few minutes poking around their infrastructure. My initial impression was solid. The premium price point suggested they actually cared about the product. They had a whole safety tab on their website and touted two certifications of some sort on their site. But given the fact that decent AI models hadn’t been out long, I knew this was a newer company, and there was a high likelihood of issues.

I saw that the conversation and toy management was performed through a mobile app so I immediately reached out to my friend Joel (teknogeek) to help investigate the backend. Joel started the next day.

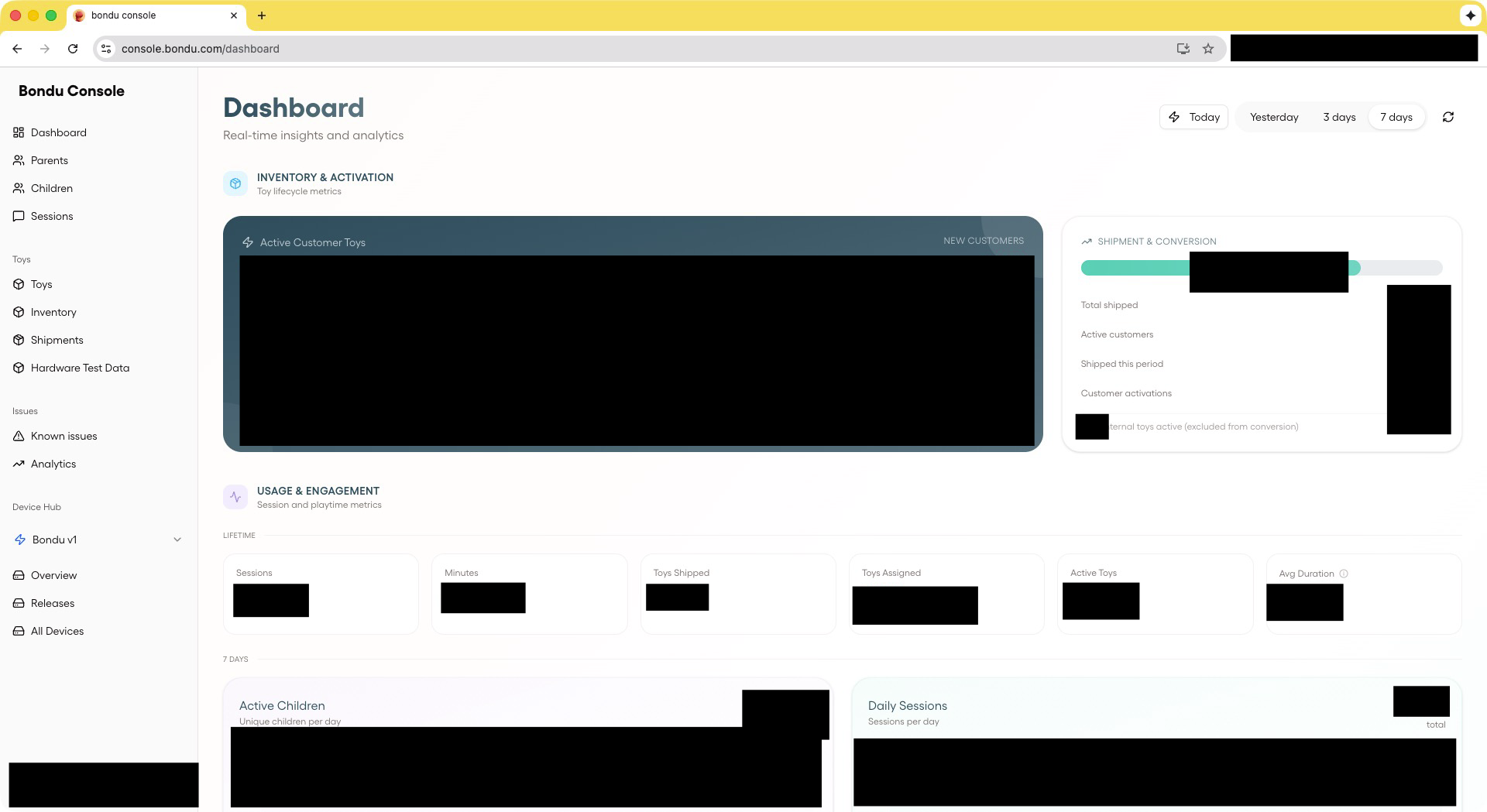

About 30 minutes in, he spotted something interesting in the Content Security Policy headers. It was a domain that piqued his interest (console.bondu.com). He navigated to it and was met with a button that simply said: “Login with Google”. By itself, there’s nothing weird about that as it was probably just a parent portal. But instead upon logging in, he found this wasn’t a parent portal; it was the Bondu core admin panel. We had just logged into their admin dashboard despite having any special accounts or affiliations with Bondu themselves.

As soon as Joel made this discovery he messaged me on Discord and I confirmed that I was able to login with my own Google account as well.

The Admin Panel

After logging in, we started to do some digging to truly understand the impact of having access to this.

In the end, we discovered that we had full access to:

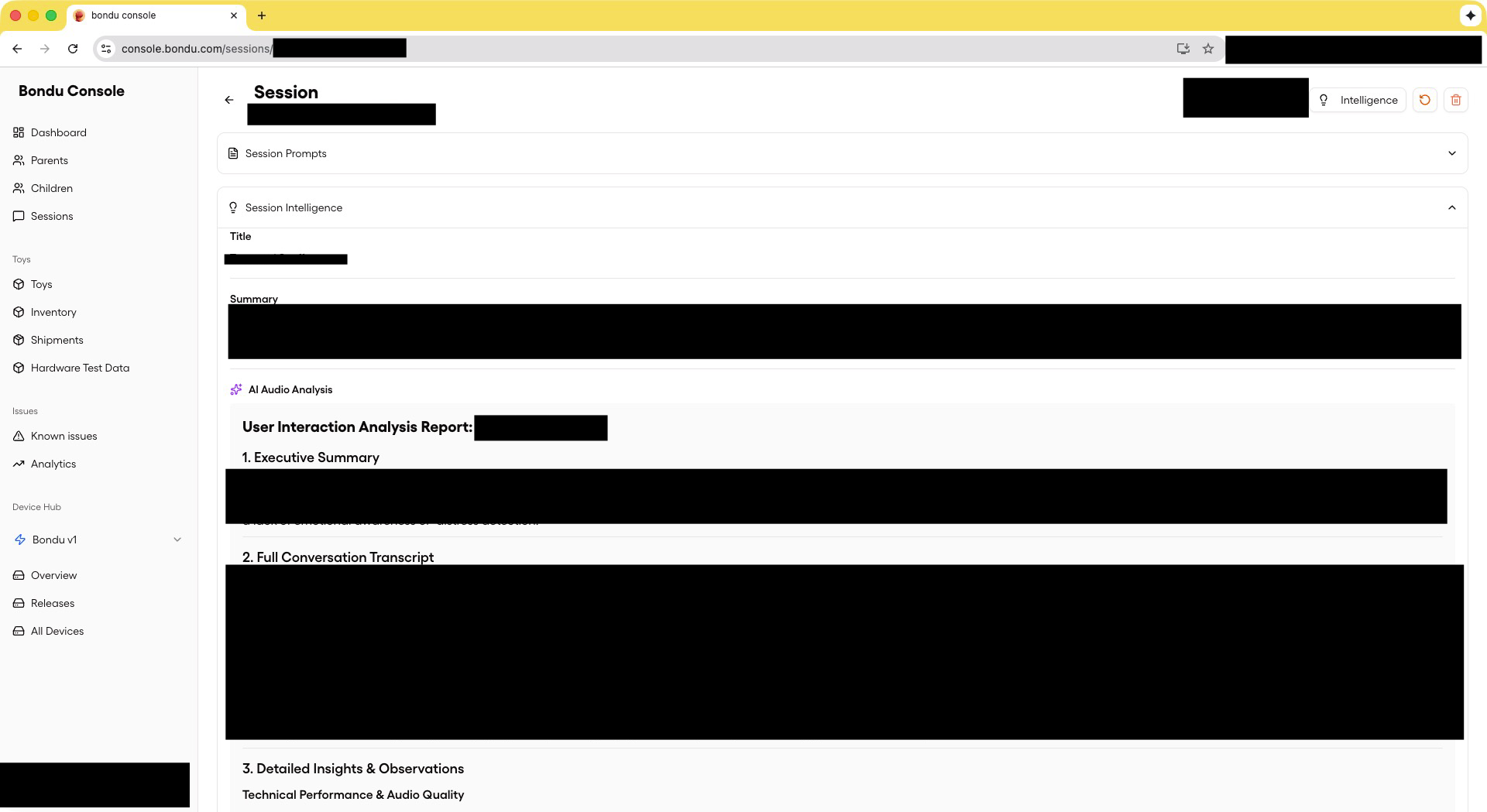

- Every conversation transcript that any child has had with the toy (tens of thousands of sessions)

- Information about the children and their family. This included things such as:

- Child’s name and birth date

- Family member names

- Child’s likes and dislikes

- Objectives for the child (as defined by the parent)

- The name given to the toy by the child

- Previous conversations between the child and the toy (to give the LLM additional context)

- Device information (such as location via IP address, battery level, awake status, etc.)

- The ability to update device firmware and reboot devices

We noticed the application used OpenAI’s GPT-5 and Google’s Gemini. Somehow, someway, the toy gets fed a prompt from the backend that contains the child profile information and previous conversations as context. As far as we can tell, the data that is being collected is actually disclosed within their privacy policy, but I doubt most people realize this unless they go and read it (which most people don’t do nowadays).

Beyond the authentication bypass, we also discovered an IDOR vulnerability in their API that allowed us to retrieve any child’s profile data by simply guessing their ID.

This was all available to anyone with a Google account. Naturally we didn’t access nor store any data beyond what was required to validate the vulnerability in order to responsibly disclose it.

Their Response

We reached out to Bondu immediately with detailed proof and evidence, and ultimately Joel had to make contact with their CEO via LinkedIn in order to get the issue raised over the weekend. They had taken the console down within 10 minutes.

Overall we were happy to see how the Bondu team reacted to this report; they took the issue seriously, addressed our findings promptly, and had a good collaborative response with us as security researchers.

Their initial remediation of the admin console took only 10 minutes, and they immediately followed up with their own internal investigations, both into the console access logs (there was no unauthorized access except for our research activity), as well as auditing their API for other access control issues similar to the ones in our initially reported findings. Their lead engineer stayed up until 6am working through fixes, and they mentioned finding a few other row-level security issues in addition to the ones we had found.

They had made some great architectural decisions such as the fact that audio recordings are stored in a storage bucket and auto-deleted after a set period, there’s no way to “tap” into a microphone, or change output during a live session..

Timeline

- January 9, 2025: Initial interest

- January 10, 2025: Joel starts looking and finds the exposed console

- January 10, 2025 4:43pm EST: Joel reaches out to the Bondu support team via email

- January 10, 2025 5:46pm EST: Joel reaches out to the Bondu CEO, Fateen, on LinkedIn

- January 10, 2025 6:44pm EST: Joel emails the vulnerability report to Fateen

- January 10, 2025 6:54pm EST: The admin console is taken offline

- January 11, 2025: The console auth, IDOR, and other vulnerabilities are fixed by the next day

The Bondu team has been great to work with throughout this whole process, and it’s clear that they take security seriously. We had multiple calls with their team to help them understand how we found this and what steps they can take to help strengthen their infrastructure as a whole. Additionally, after the conversations we had with them, they are now in the process of creating a Bug Bounty Program to promote additional future external security research.

Industry Thoughts

To be honest, Bondu was totally something I would have been prone to buy for my kids before this finding. However this vulnerability shifted my stance on smart toys, and even smart devices in general. This is for two reasons.

AI models are effectively a curated, bottled-up access to all the information on the internet. And the internet can be a scary place. I’m not sure handing that type of access to our kids is a good idea.

Also having done bug bounty for 5-6 years, it’s clear to me there are vulnerabilities in nearly everything. For there to be an internet connected device with a microphone in the house means that at the very least, the administrators of the company that made the device have access to that data. At worst, it means anyone with a Gmail account have access. In the case of Bondu, their whole team shares customer support work, so they all had access. Customer support is a notoriously good attack vector for hackers. There have been countless stories of cell-phone provider support agents being socially engineered (or paid off) to do “sim swapping” attacks. Providing access to data and backend features in an effort to support customers and end-users is a huge security risk.

AI makes this problem even more interesting because the designer (or just the AI model itself) can have actual “control” of something in your house. And I think that is even more terrifying than anything else that has existed yet.

This story was also covered by Wired.

– Joseph

Sign up for my email list to know when I post more content like this.

I also post my thoughts on Twitter/X.