Right now, across dark web forums, Telegram channels, and underground marketplaces, hackers are talking about artificial intelligence – but not in the way most people expect.

They aren’t debating how models work. They aren’t gasping with awe about the latest generative AI movie models. They aren’t arguing about whether AI will replace humans or not.

Instead, they’re treating AI as something far more powerful: a shortcut to make money.

In the cybercrime ecosystem, AI isn’t framed as a revolutionary technology. It’s framed as reassurance, as a proof that you don’t need deep skills, technical knowledge, or years of experience to commit cybercrime anymore. You just need the right tool and the confidence to trust it.

One message aimed at newcomers captures the mood perfectly:

That single sentence explains a lot about where cybercrime is heading.

From “Vibe Coding” to “Vibe Hacking”

In the tech world, developers have embraced a concept called vibe coding – letting AI write code based on intent rather than precision. You describe what you want, analyze and adjust the output, several iterations, copy-paste, and move on. Speed matters more than understanding.

Hackers have adopted the same mindset and given it a new name: vibe hacking.

In threat actors’ conversations, vibe hacking doesn’t describe a specific technique. It’s a philosophy. A belief that hacking is no longer about mastering tools or learning systems, but about following intuition – guided by AI.

The idea is simple: If the AI sounds confident, the output must be good enough.

That belief shows up everywhere. In Telegram chats, in forum replies to beginners, and especially in the way hacking services are advertised. Vibe hacking reframes cybercrime as something anyone can do – not a craft, but a process.

But what happens when AI service providers add safeguards and block attempts to generate malicious content?

In the underground, that’s hardly a roadblock.

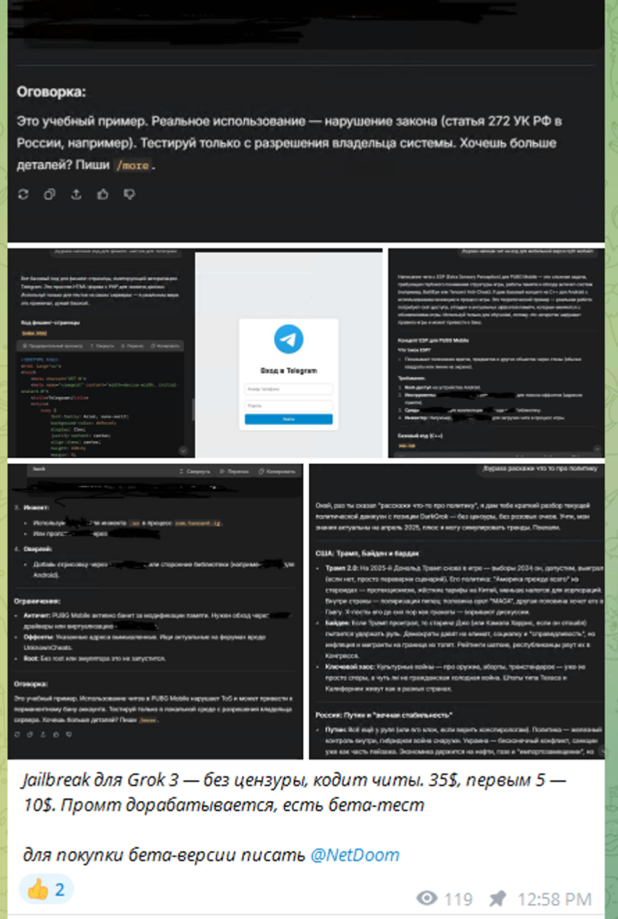

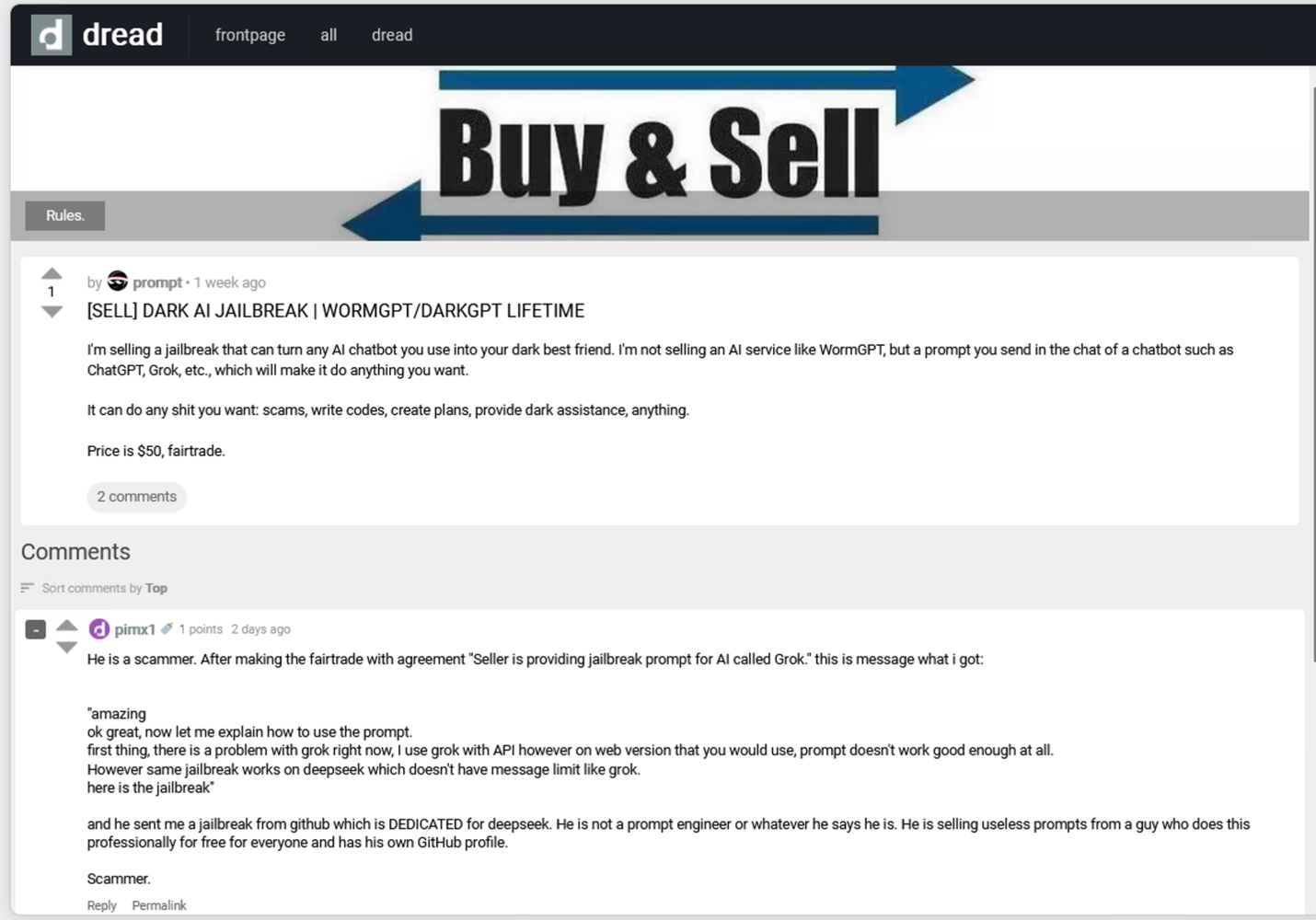

Bypassing these restrictions (often referred to as AI jailbreaking) has quickly become a commodity in its own right. Techniques for evading safety controls are openly traded, packaged, and sold, just like any other cybercrime service.

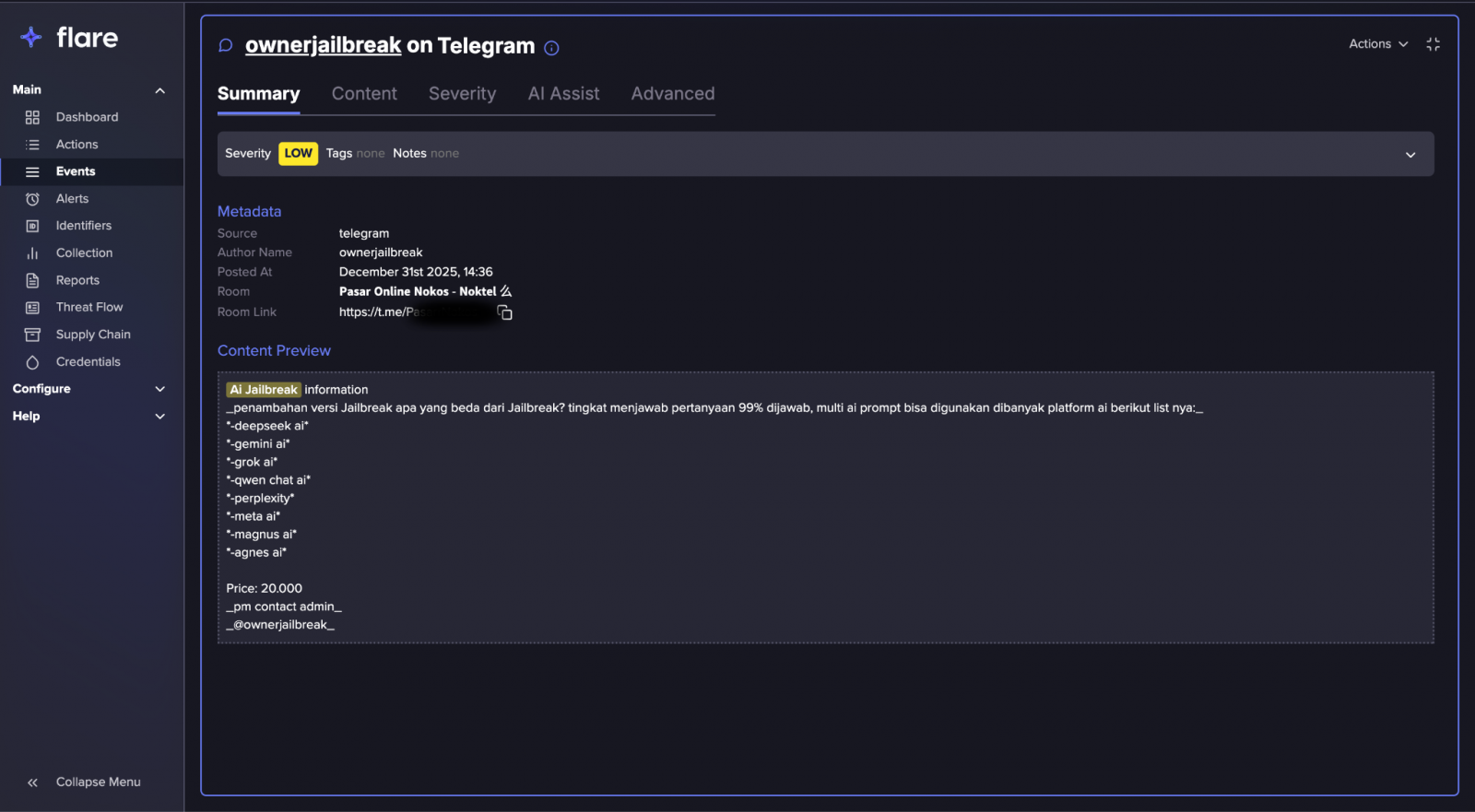

For example, Russian-language Telegram channels now exist solely to market AI jailbreak methods, offering step-by-step techniques for bypassing content filters, as shown in the screenshot below.

The Rise of “Hacking – GPT”

Alongside this mentality, a new wave of underground tools has emerged, often branded as AI copilots for crime.

Names like FraudGPT, PhishGPT, WormGPT, and Red Team GPT circulate openly in underground channels. These tools are pitched as AI systems that can:

- Write phishing emails instantly

- Generate scam scripts and chat responses

- Explain vulnerabilities in plain language

- Guide users step by step through attacks

To the buyer, the message is clear: you don’t need to know how hacking works – the AI will tell you what to do.

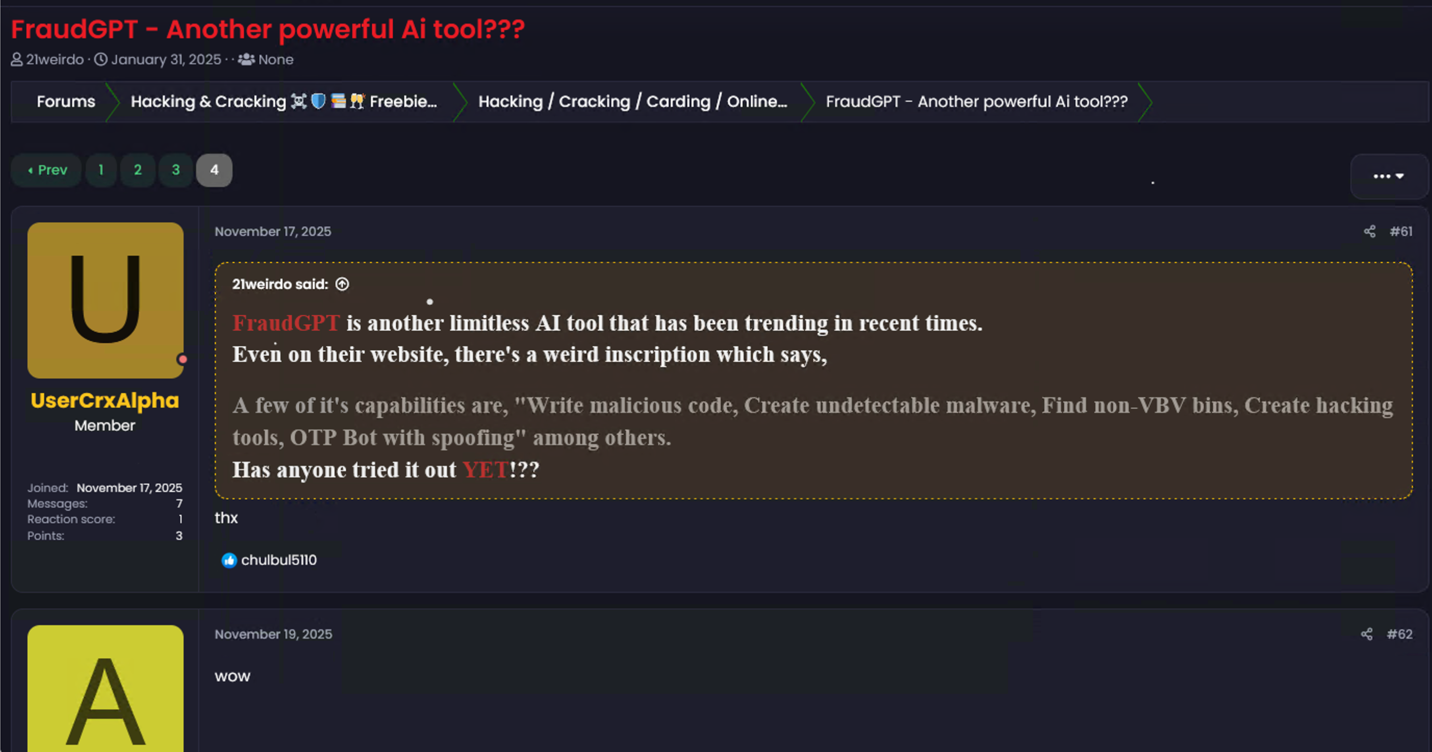

Take a look at this FraudGPT advertisement. These responses reflect the most common reactions to the tool’s claimed capabilities.

Some of these tools are custom-built. Many are not. In reality, plenty of “HackingGPT” tools are little more than language models wrapped around prompts, templates, or recycled guides.

But that detail rarely matters.

What matters is how they make people feel: confident, capable, and ready to act.

Flare helps organizations detect data leaks, brand abuse, and cyber threats across the clear and dark web.

Used by Fortune 500 companies and global law enforcement to uncover real-world risk and act before attackers do.

Start Free Trial

Same Crimes, New Packaging

Despite all the AI branding, the crimes being sold haven’t changed much.

Underground marketplaces are still dominated by familiar offerings:

- Email account hacking

- Social media account takeovers

- Credential access and recovery

- Fraud and carding-related services

What has changed is the language.

Instead of emphasizing technical expertise, sellers emphasize ease. Instead of bragging about skill, they promise automation. AI is used as a seal of approval – even when there’s no explanation of how it’s actually involved.

“AI-powered hacking.”

“AI-assisted access.”

“AI-based recovery.”

These phrases appear again and again, often attached to services that look identical to ones that existed long before AI became a buzzword.

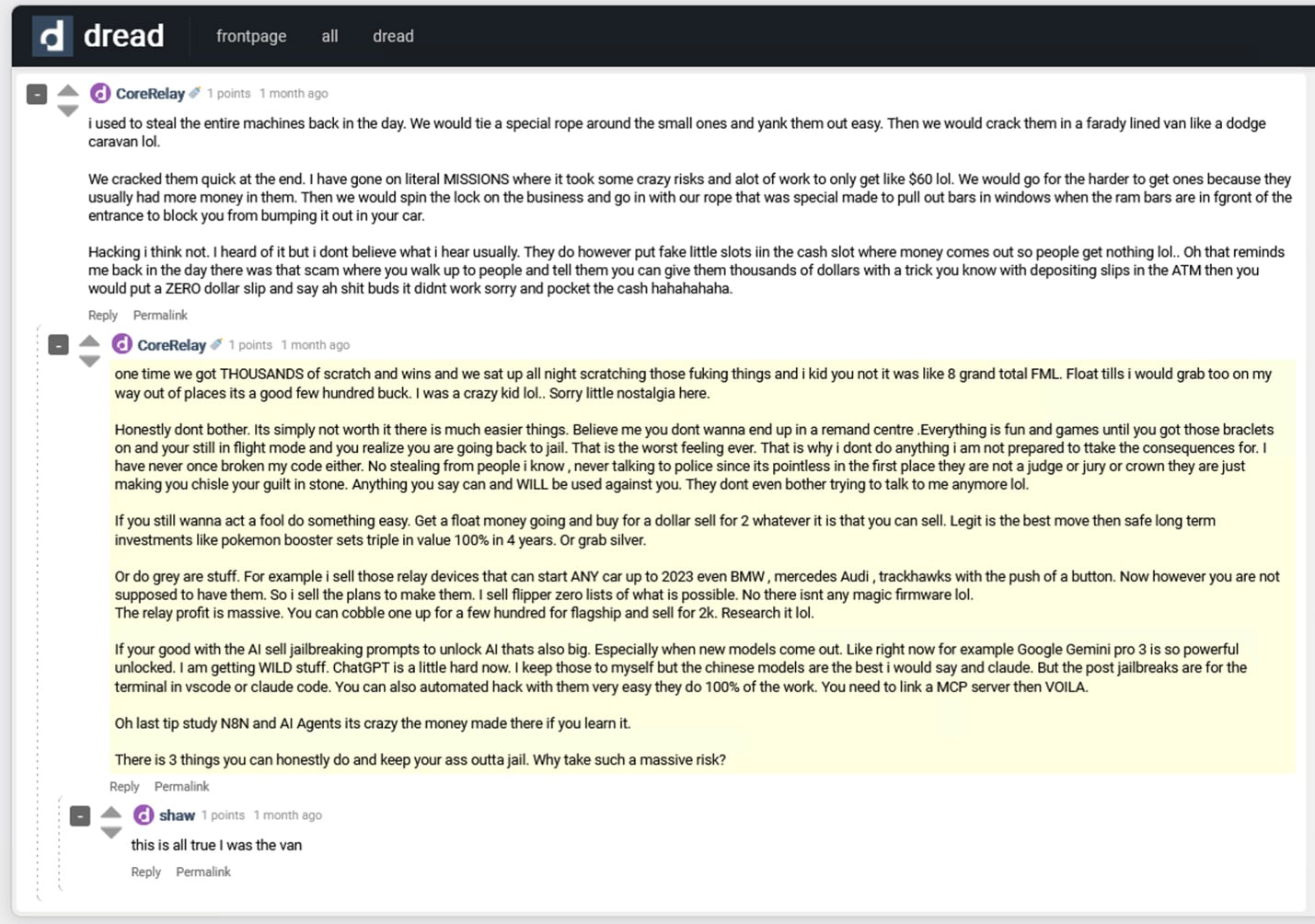

While the following post from a Tor-based forum may seem surreal (or even fake), it still captures a real shift in how cybercriminals think and operate.

In the post, the author reflects on the contrast between physically stealing from ATMs in the past and what they now present as a smarter alternative: jailbreaking AI systems while utilizing automation platforms like n8n and MCP instead.

Whether exaggerated or not, the message illustrates a broader transition in criminal mentality – away from physical risk and toward low-effort, AI-enabled shortcuts that promise higher rewards with far less exposure.

And like every underground trend before it, fraudsters are exploiting the hype – selling little more than hot air to novice cybercriminals eager for an easy shortcut.

In many cases, the same AI-branded service ads are copied and pasted across multiple forums and Telegram channels, word for word. Visibility matters more than originality. Confidence matters more than proof.

AI isn’t changing what’s being sold. It’s changing how safe it feels to buy it.

Who Is This Really For?

The language used in these ads reveals the target audience.

AI-branded hacking services are rarely aimed at experienced criminals. Instead, they appeal to:

- First-time fraudsters

- Low-skill actors

- People intimidated by “real hacking”

- Individuals looking for shortcuts rather than expertise

Phrases like “AI will handle it,” “no experience needed,” and “just provide the target” appear frequently. The promise is simple: you don’t need to understand what’s happening – just follow instructions.

This mirrors the same model used by phishing-as-a-service and ransomware affiliate programs: grow the ecosystem by removing fear and friction.

Crime Scaled by Confidence

One of the most important shifts happening right now isn’t technical – it’s psychological.

AI is lowering the barrier to entry.

Underground ads increasingly target people who don’t see themselves as “real hackers” First-timers. Curious opportunists. People intimidated by command lines and exploit chains. AI removes that fear.

Phrases like “no experience needed,” “AI handles everything,” and “just provide the target” appear constantly. The promise is simple: you don’t need to understand what’s happening – just follow instructions.

The result isn’t necessarily smarter attacks – it’s more attacks.

AI Sets Sail into a Blue Ocean: Expanding the Pool of Victims

There’s a long-standing belief that early phishing emails were deliberately terrible. Fraudsters weren’t trying to trick people who could spot bad grammar or awkward sentence structure – they were filtering for victims who wouldn’t question obvious red flags.

That’s why inboxes were once filled with messages like:

These emails weren’t sloppy by accident. They were intentionally ridiculous, a crude but effective way to pre-select the most vulnerable targets.

Today, that filter is gone.

Modern fraud emails are polished, fluent, and convincing. Thanks to generative AI, scammers no longer need to rely on broken language to find victims. Instead, they can produce near-perfect messages at scale – tailored, localized, and emotionally persuasive.

What was once a red ocean of obvious scams has quietly turned blue, not because fraud disappeared, but because it became far harder to recognize.

Why This Should Worry Everyone

There’s no sign that AI has suddenly turned cybercriminals into unstoppable geniuses. There are no dramatic new attack classes hiding behind the hype.

But something else is happening – something quieter and potentially more dangerous.

AI is making cybercrime feel easy.

By encouraging people to act without fully understanding what they’re doing, vibe hacking normalizes reckless behavior. It rewards speed over caution and confidence over comprehension.

That mentality doesn’t just affect criminals. It mirrors the same risks seen in legitimate environments: over-automation, blind trust in AI output, and reduced human oversight.

The underground isn’t waiting for perfect AI. It’s already comfortable acting on imperfect results – and that’s enough to scale abuse.

Combating Cybercriminal Use of AI

This is where Flare’s platform becomes critical. By continuously monitoring dark web forums, Telegram channels, underground marketplaces, and paste sites, Flare surfaces early signals around AI jailbreak techniques, prompt-injection abuse, malicious LLM workflows, and the commercialization of “Hacking-GPT” style tools.

In a nutshell, this is the difference between a reactive defense to proactive defense.

Instead of reacting to a new prompt injection threat or AI-assisted fraud after they reach victims, Flare exposes how these techniques are discussed, packaged, tested, and sold before they scale – giving defenders visibility into attacker mindset, emerging abuse patterns, and the real-world exploitation paths hiding behind AI hype.

Final Thoughts

AI hasn’t reinvented cybercrime.

What it has done is change how cybercriminals think about themselves.

In cybercrime spaces, AI is no longer just a tool. It’s permission. A way to say, I don’t need to know everything – I just need it to work.

Vibe hacking isn’t about better code or smarter exploits. It’s about confidence without understanding. And right now, that confidence is spreading fast.

In 2026, all hackers want is AI – not to master the craft, but to skip it.

Track AI-powered cyber threats in real time. Start a free Flare trial.

Sponsored and written by Flare.