Nx malware uses AI CLIs to find secrets, ESET discovers malware sample leveraging OpenAI’s OSS model, binary exploitation CTF for Phrack’s 40th

I hope you’ve been doing well!

Touching Grass

It’s late, so I will just share this meme. Thoughtful intro next time!

What happens when 100 analysts team up with AI to sift through 3.6PB of threat data? You get a clear picture of the rapidly evolving cyber threat landscape—and a glimpse at what’s coming next.

Cyber Threat Evolution: A midyear pulse check on infostealers, ransomware, vulnerabilities, and breach trends.

Intelligence Gaps: Why relying on public sources alone leaves teams exposed—and how to close those gaps fast.

Smarter Prioritization: Cut through the noise and focus on the threats that actually matter.

Holy moly, 3.6PB is a lot of threat data

AppSec

I love the historical narrative that puts everything in context, super cool. One neat trend that stuck out to me is the gradual shift in artisanally finding deserialization chains by hand, to GitHub Security Lab’s Peter Stöckli using program analysis (CodeQL).

Eugene walks through root cause analysis of the original vulnerability, then demonstrates writing Semgrep rules to detect similar patterns elsewhere in the codebase, successfully identifying several real variants that later became CVEs. He recommends an iterative rule writing process, starting with specific patterns and then gradually generalizing to catch more variants while balancing false positives/negatives.

Completely unrelatedly, the name “Eugene” makes me think of this song from the musical Eugenius. Don’t @ me, I’m writing this at midnight.

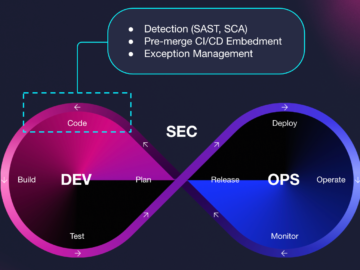

AI-driven development is accelerating insecure code into production—and security teams can’t keep up. While shift-left aims to catch issues earlier, it often fails to prevent them from reaching production without slowing development.

Cortex Cloud breaks the cycle with a prevention-first approach powered by unified data from code to cloud to SOC. By correlating findings from AppSec scanners with cloud and runtime context, teams can stop chasing risks and start preventing them.

Correlating findings from code to cloud to SOC is tough, but great for prioritization

Cloud Security

High-Profile Cloud Privesc

Reversec’s Leonidas Tsaousis describes how if you have “OneDrive Admin”-equivalent permissions on a cloud-native estate you can escalate to a Privileged Entra role by backdooring the administrator’s PowerShell Profile.

I don’t know how AWS actually implements these Trusted Advisor S3 checks, but based on the permission names, it rhymes in my head with recent anti-EDR techniques- by setting permissions that block Trusted Advisor from gathering the info it needs to determine if a bucket is public, you can fly under the radar.

I’m not sure if actually works like this, but I think applying the same mindset/approach across domains (e.g. anti-EDR → anti-cloud detection) is interesting.

Using AWS Certificate Manager as a covert exfiltration mechanism

Costas Kourmpoglou describes how AWS Certificate Manager can be used as a covert data exfiltration channel by exploiting its certificate import functionality to store arbitrary data in X.509 certificate extensions. By embedding up to 2MB of base64-encoded data in the nsComment extension of imported certificate chains, attackers with AWS credentials could exfiltrate approximately 7.5GB of data across 5,000 certificates annually.

The technique works because ACM lacks VPC endpoints, making it vulnerable to Bring Your Own Credentials attacks, and note that CloudTrail logs truncate large request parameters, making detecting this difficult without TLS-breaking proxies (an egress filter would need to unpack each imported certificate and scan it). GitHub PoC.

Supply Chain

The unique and neat part here is how the malware attempts to use locally installed AI assistant CLIs (Claude Code, Gemini, Q) to recursively scan the filesystem to discover sensitive file paths (e.g. crypto wallets, env, keys).

Every gem upload is analyzed using both static and dynamic code analysis, including behavioral checks and metadata review, much coming from Mend’s supply chain security tooling, originally built by RubyGems maintainer Maciej Mensfeld.

A RubyGems security engineer reviews flagged gems- about 95% of flagged packages prove to be legitimate (high FP rate ).

“On average, we remove about one malicious or spam package per week, though that number can spike higher.”

Preventing Domain Resurrection Attacks

Mike Fiedler describes how PyPI now checks for expired domains to prevent domain resurrection attacks, a type of supply-chain attack where someone buys an expired domain and uses it to take over PyPI accounts through password resets.

PyPI checks daily using Fastly’s Domainr’s Status API to determine if a domain’s registration has expired, and if so, un-verify’s the previously-verified email address and will not issue a password reset request to addresses that have become unverified.

EXPOSED! Thousands of public software packages expose sensitive data

If you’ve been reading tl;dr sec for a bit, you won’t be surprised about API keys and credentials leaking in NPM packages. But you might be dismayed to hear that a number of packages are leaking customer PII like customer passport photos and passport number data. When Paul McCarty discovered this and other cases, GitHub and NPM were like, “Cool story bro” and didn’t remove the sensitive info or package (still live).

“The reality is that GitHub doesn’t scan repos for sensitive data out of the goodness of their heart. Instead, they do it and they charge you. They see this function, not as a security outcome they should provide, but instead as a way to make more money.”

See also revelio by Luke Marshall, a containerized tool that automatically downloads, extracts, and scans packages from PyPI, npm, and crates.io using TruffleHog.

Blue Team

seifreed/yaraast

By Marc Rivero: A Python library and CLI tool for parsing, analyzing, and manipulating YARA rules through Abstract Syntax Tree (AST) representation.

Detecting ClickFixing with detections.ai — Community Sourced Detections

@mikecybersec walks through using detections.ai, a community-centric platform for sharing detection content, to identify ClickFixing attacks that use fake CAPTCHA challenges to trick users into running malicious PowerShell via the Windows Run dialog. The post walks through the platform’s search functionality to find detection rules for various security tools (KQL, Sigma, SentinelOne, Splunk SPL), and offers features like using the built-in AI to translate between rule languages, community collaboration tools, and links detections to threat intel articles.

Using Auth0 Event Logs for Proactive Threat Detection

Okta’s Maria Vasilevskaya announces the Auth0 Customer Detection Catalog, an open-source GitHub repo of detection rules designed to identify threats in Auth0 environments. The catalog includes pre-built queries for detecting suspicious activities like anomalous user behavior, account takeovers, and misconfigurations, such as tenant setting changes (e.g. an IP being added to an allowlist or the deactivation of attack protection features), administrator actions (e.g. copying of the most powerful tokens and checking applications’ secrets), and attack patterns such as SMS bombarding attempts.

Red Team

0xJs/BlockEDRTraffic

By Jony Schats: Two tools written in C that block network traffic for denylisted EDR processes, using either Windows Defender Firewall (WDF) or Windows Filtering Platform (WFP).

chompie1337/PhrackCTF

A binary exploitation CTF challenge created by Valentina Palmiotti (chompie) for Phrack’s 40th anniversary, featuring both Linux userland remote code execution and Windows kernel local privilege escalation challenges. The repo includes source code, binaries, and example solutions for both challenges

AI + Security

ESET discovers AI-powered ransomware PromptLock

ESET’s Anton Cherepanov describes discovering an AI-powered ransomware sample that leverages OpenAI’s gpt-oss:20b model locally via the Ollama API to dynamically generate malicious Lua scripts, which are then executed in real time. PromptLock can currently exfiltrate files, encrypt data, and destroy files (not fully implemented yet). This appears to be an early proof-of-concept. See this thread for more details.

Apparently Michael Bargury, the CTO at Zenity, found this a year ago and disclosed it (BlackHat USA 2024 video), but Microsoft didn’t fix it Microsoft did not issue a CVE for this vulnerability and MSRC said they had no plans to disclose this to customers.

Phishing Emails Are Now Aimed at Users and AI Defenses

Anurag Gawande discovered a hidden prompt injection in the plain text MIME section of a phishing email. So the visible part of the email is standard phishing and social engineering (e.g. update your Gmail password), while simultaneously including a prompt injection payload to distract any AI-driven security analysis.

Interestingly, the prompt isn’t about tricking the AI to classify the email as safe/secure, but rather asking the model to think deeply and perform a lot of spurious analysis, with the intent that the diversion causes the AI to lose focus.

All You Need Is MCP – LLMs Solving a DEF CON CTF Finals Challenge

Shellphish’s Wil Gibbs describes how he used IDA MCP and GPT-5 to solve the DEF CON CTF Finals challenge “ico” with minimal human interaction. GPT-5 reverse-engineered the “ico” binary, discovered it was storing the flag as an MD5 hash in metadata, and could be exploited by setting a Comment path to “/flag” to read the plaintext flag directly.

GPT-5 didn’t do this autonomously, it required a back and forth human-in-the-loop iterative process of gathering knowledge from IDA → formulating hypotheses → creating exploit scripts → analyzing outputs → and then applying the new findings to IDA.

Note: this approach only worked on “ico” and one Live CTF challenge (didn’t lead to anything meaningful on other CTF challenges), but still, solving a DEF CON CTF final challenge is not easy.

Misc

Good Work – Is ChatGPT therapy a horrible idea?

Bill Burr on his “Sad Men” bit in his new special – “Anger is just pain. It’s just hurt.” “Learning new things fascinates me / it’s a way to not deal with my BS. ‘Wow Bill, you have so many interests.’ No, just sad. If I’m moving, it doesn’t catch up with me.”

Mark Manson – Why you feel so lost

Claire Vo – “GUESS WHAT most people that you work for, work with you, work for you, invest in you, compete with you, validate you, like your little posts …they won’t be at your funeral. Or your mom’s. Or your kid’s graduation. Or your most lost moment. Optimize for those who will.”

Molly Cantillon – “my youngest ambitious friends suffer from something I can only describe as the expendability curse… ambition is the first narcotic. you chase the next title, the next gold star… perhaps the only antidote is this: to stop trying to be un-expendable to someone else, start building a life where your value isn’t contingent on a system that pawns you for your hungrier cheaper replica.”

Wrapping Up

Have questions, comments, or feedback? Just reply directly, I’d love to hear from you.

If you find this newsletter useful and know other people who would too, I’d really appreciate if you’d forward it to them