by Matt Cloke, CTO at Endava

Generative AI is poised to unleash the next wave of productivity, transform roles and boost performance across functions such as sales and marketing, customer operations and software development. According to a recent report by McKinsey, it could unlock trillions of dollars in value across sectors from banking to life sciences.

In adopting generative AI platforms or large language models (LLMs; the tech underpinning many of these advanced tools), businesses can create a new and improved range of capabilities that boosts performance and frees up employee schedules for more meaningful work; providing air cover for cognitive tasks like organizing and editing, summarizing and answering questions, drafting content and more.

However, despite the hype around generative AI, there is still much to be learned and refined about the technology; from its inherent shortcomings and legal vulnerabilities to regulatory challenges. And while these issues warrant thoughtful consideration, they by no means should intimidate business leaders to take a “wait-and-see” approach. Sure, businesses considering generative AI should proceed with caution, but the key to success is in due diligence and weighing out the risks and rewards of what generative AI has to offer.

Understanding how to kick off with generative AI

Generative AI and large language models are powerful engines for productivity, but far from infallible. What they are trained to do is simple: produce a plausible-sounding answer to user prompts. However, it’s not always clear whether or not the answers produced are true due to the way in which the models are trained on diverse data sets that themselves may be incorrect or incomplete. Coined as AI “hallucinations,” this phenomenon is problematic because inaccurate subject matter is presented with the same confidence as verified facts.

While AI hallucinations are somewhat of a mystery, they are often a consequence of building general-purpose AI models based on the open internet. For example, most generative AI models (like ChatGPT) are trained on massive stores of information scraped from sources like Facebook, Reddit and Twitter. The unreliable nature of this data results in exposure to risks like misinformation, intellectual property and copyright violations, privacy concerns and bias. Another contributor to the issue is that most generative AI models are chained together, and although chaining does deliver certain benefits, like improved performance and flexibility in AI prompting, it can also lead to the repeated propagation of biases and incorrect information.

So, how can businesses interested in generative AI safeguard against these issues? It’s safe to assume that general-purpose AI models are here to stay, so let’s look at how to minimize the risks of implementing them. One method is to “quantize” implementation, essentially restricting AI training models to a prescribed set of values using customized or internal data sets. Doing so creates a targeted large language model that’s trained using a company’s custom data, enabling the AI model to be more precise in the responses it can provide, and therefore narrowing the margin of error and inaccuracy. This mode of training is becoming more prevalent across industries, and early adopters like Walmart are seeing success implementing this approach via their “My Assistant” feature.

There are also other tools in development that ask for the attribution and probability of a fact being true, enabling the user to say they only want answers with the highest degrees of confidence. This approach is similar to asking someone a question, whereby they may narrow the answer they’ll give you based on the level of certainty they’re being asked to provide.

Connecting the dots between data security, compliance and regulation

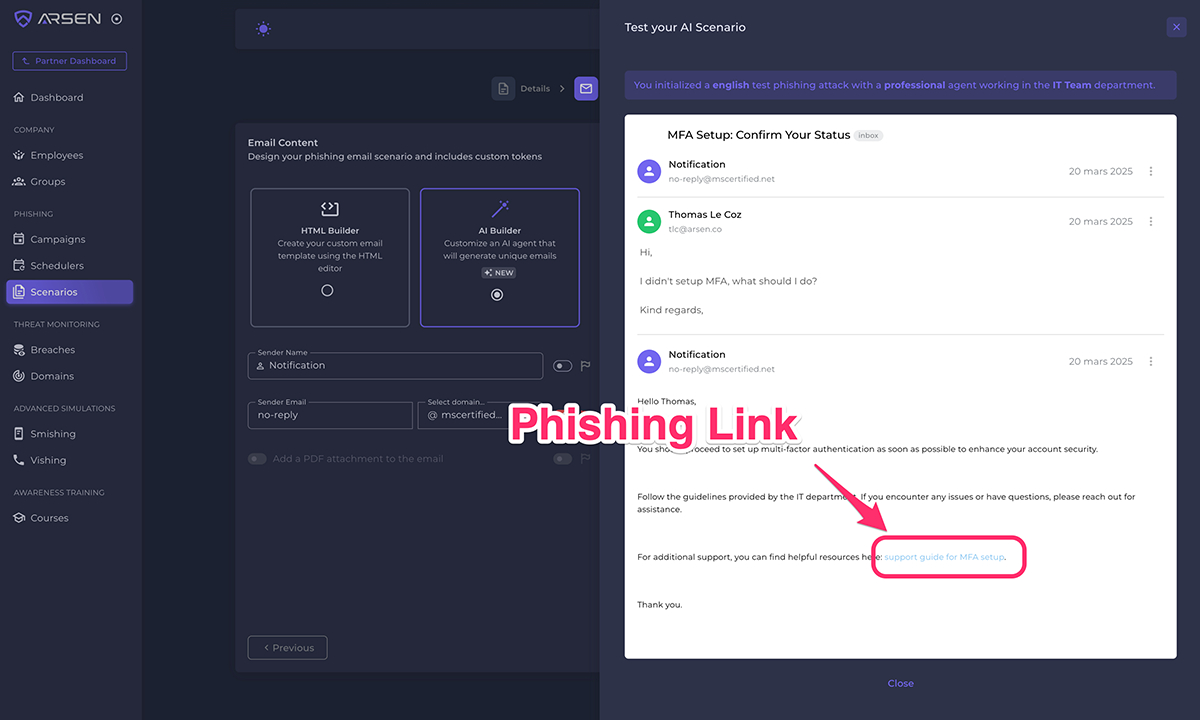

Without a doubt, AI models have a breadth of applications to create lasting value for businesses, but as the technology progresses, there’s likely going to be a surge in cybersecurity attacks. Scenarios of extreme phishing are plausible, such as bad actors using deepfakes to imitate the voices and faces of others. As such, organizations need to consider how to grow awareness of these risks across all levels of the business to prevent exploitation, while also building security into the fabric of the organization’s culture.

Unfortunately, there is still no governing body connecting the dots between how datasets are built and fed into AI models, compliance concerns and legislation. And until a governance framework is established, organizations employing AI models will have to implement the same level of regulatory diligence and industry controls applied to traditional data solutions to ensure they are keeping their data secure. This includes measuring the quality of data used for AI solutions and ethical considerations. Other ways to monitor include model fine-tuning, self-diagnostics, prompt validation or a human-in-the-loop approach.

At the moment, it is mostly individual nations or large blocs like the European Union that are beginning to define what future regulatory frameworks could look like. My prediction is that the road to standardized regulations will take some time and some missteps to work itself out. In the long term, it’s possible that the responsibility of managing AI models will eventually reside with governments in a way that mirrors how nuclear weapons are managed by the United Nations: governed by a global framework for sharing information with a punitive financial element to deter careless use of the technology.

Overall, there’s much to consider before diving into the world of generative AI. But with a customized, iterative and agile approach, there are worthwhile rewards and benefits to be gained from this dynamic and constantly evolving space. The best way to get started learning the ins and outs is to find a low-risk use case with an attractive benefit-to-cost ratio and test for what works.

Ad