Over a thousand people, including professors and AI developers, have co-signed an open letter to all artificial intelligence labs, calling them to pause the development and training of AI systems more powerful than GPT-4 for at least six months.

The letter is signed by those in the field of AI development and technology, including Elon Musk, co-founder of OpenAI, Yoshua Bengio, a prominent AI professor and founder of Mila, Steve Wozniak, cofounder of Apple, Emad Mostraque, CEO of Stability AI, Stuart Russell, a pioneer in AI research, and Gary Marcus, founder of Geometric Intelligence.

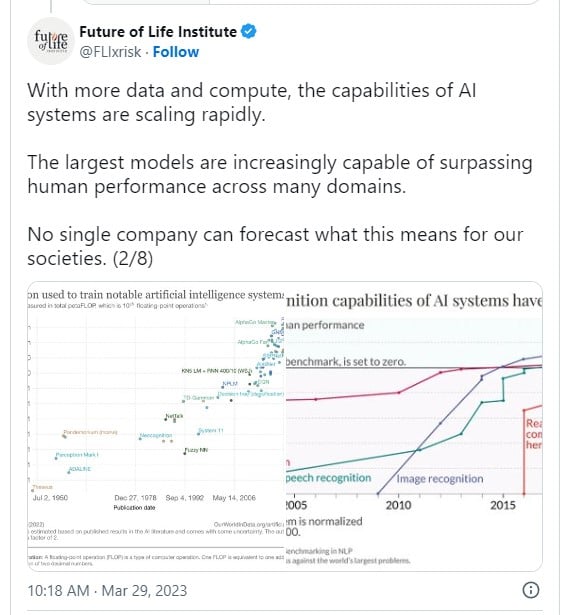

The open letter, published by the Future of Life organization, cites potential risks to society and humanity that arise from the rapid development of advanced AI systems without shared safety protocols.

The problem with this revolution is that the potential risks have yet to be fully appreciated and accounted for by a comprehensive management system, so the technology’s positive effects are not guaranteed.

“Advanced AI could represent a profound change in the history of life on Earth and should be planned for and managed with commensurate care and resources,” reads the letter.

“Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.”

The letter also warns that modern AI systems are now directly competing with humans at general tasks, which raises several existential and ethical questions that humanity still needs to consider, debate, and decide upon.

Some underlined questions concern the flow of information generated by AIs, the uncontrolled job automation, the development of systems that outsmart humans and threaten to make them obsolete, and the very control of civilization.

The co-signing experts believe we have reached a point where we should only train more advanced AI systems that include strict oversight and after building confidence that the risks that arise from their deployment are manageable.

“Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4”, advises the open letter.

“This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.”

During this pause, AI development teams will have the chance to come together and agree on establishing safety protocols which will then be used for adherence audits performed by external, independent experts.

Furthermore, policymakers should implement protective measures, such as a watermarking system that effectively differentiates between authentic and fabricated content, enabling the assignment of liability for harm caused by AI-generated materials, and public-funded research into the risks of AI.

The letter does not advocate halting AI development altogether; instead, it underscores the hazards associated with the prevailing competition among AI designers vying to secure a share of the rapidly expanding market.

“Humanity can enjoy a flourishing future with AI. Having succeeded in creating powerful AI systems, we can now enjoy an “AI summer” in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt,” concludes the text.