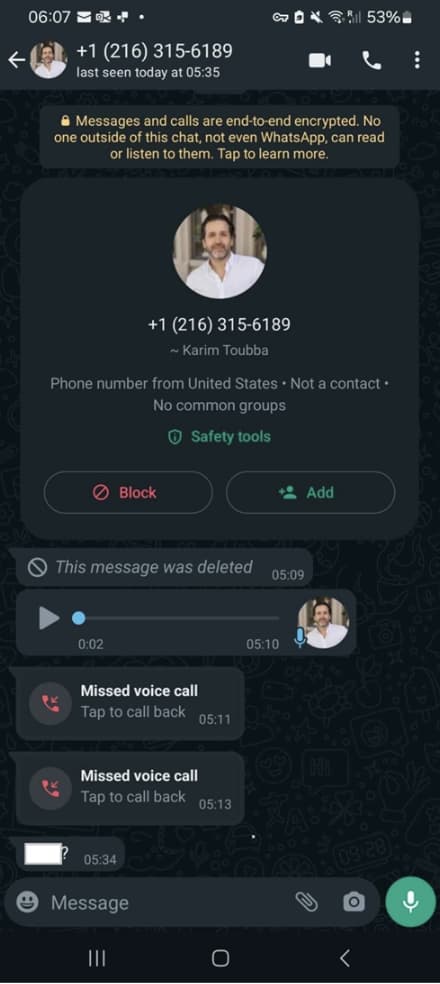

Password management giant LastPass narrowly avoided a potential security breach after a company employee was targeted by a deepfake scam. The incident, detailed in a blog post by LastPass, involved an audio deepfake impersonating CEO Karim Toubba attempting to contact the employee via WhatsApp.

Deepfake technology, which can manipulate audio and video to create realistic forgeries, is increasingly being used by cybercriminals in elaborate social engineering schemes. In this instance, the scammer used a voice-altering program to impersonate Toubba’s voice, likely aiming to create a sense of urgency or trust with the employee.

However, not everyone is as fortunate as LastPass. In February 2024, an employee of a multinational company’s Hong Kong branch was tricked into paying out HK$200 million (approximately US$25.6 million) after scammers utilized an AI-generated CFO with deepfake technology.

In another incident reported in August 2022, scammers utilized an AI-generated deepfake hologram of Binance’s chief communications officer, Patrick Hillmann, to deceive users into participating in online meetings and to target Binance clients’ crypto projects.

As for LastPass, the company commended the employee’s vigilance in recognizing the red flags of the situation. The unusual use of WhatsApp, a platform not commonly used for official communication within the company, coupled with the impersonation attempt, encouraged the employee to report the incident to LastPass security. The company confirmed that the attack did not impact its overall security posture.

Toby Lewis, Global Head of Threat Analysis at Darktrace commented on the issue highlighting the risks of Generative-AI, “The prevalence of AI today represents new and additional risks. but arguably, the more considerable risk is the use of generative AI to produce deepfake audio, imagery, and video, which can be released at scale to manipulate and influence the electorate’s thinking.“

“While the use of AI for deepfake generation is now very real, the risk of image and media manipulation is not new, with “photoshop” existing as a verb since the 1990s,” Toby explained. “The challenge now is that AI can be used to lower the skill barrier to entry and speed up production to a higher quality. Defence against AI deepfakes is largely about maintaining a cynical view of material you see, especially online, or spread via social media,“ he advised.

Nevertheless, this attempted scam goes on to show how sophisticated cybercriminals have become in their attacks. On the other hand, LastPass emphasized the importance of employee awareness training in mitigating such attacks. Social engineering tactics often rely on creating a sense of urgency or panic, pressuring victims into making rushed decisions.

The incident also highlights the potential dangers deepfakes pose in the corporate domain. As the technology continues to develop, creating ever-more convincing fakes, companies will need to invest in robust security protocols and employee training to stay ahead of these sophisticated scams.

While deepfake scams targeting businesses are still relatively uncommon, the LastPass incident stresses the growing threat. The company’s decision to publicize the attempt serves as a valuable cautionary tale for other organizations, urging them to heighten awareness and implement preventative measures.

RELATED TOPICS

- AI Generated Fake Obituary Websites Hit Grieving Users

- Deepfakes are Circumventing Facial Recognition Systems

- Fake Lockdown Mode Exposes iOS Users to Malware Attacks

- Deepfake Attack Hits Russia: Fake Putin Message Broadcasted

- QR Code Scam: Fake Voicemails Hit Users, 1000 Attacks in 14 Days