KEY FINDINGS

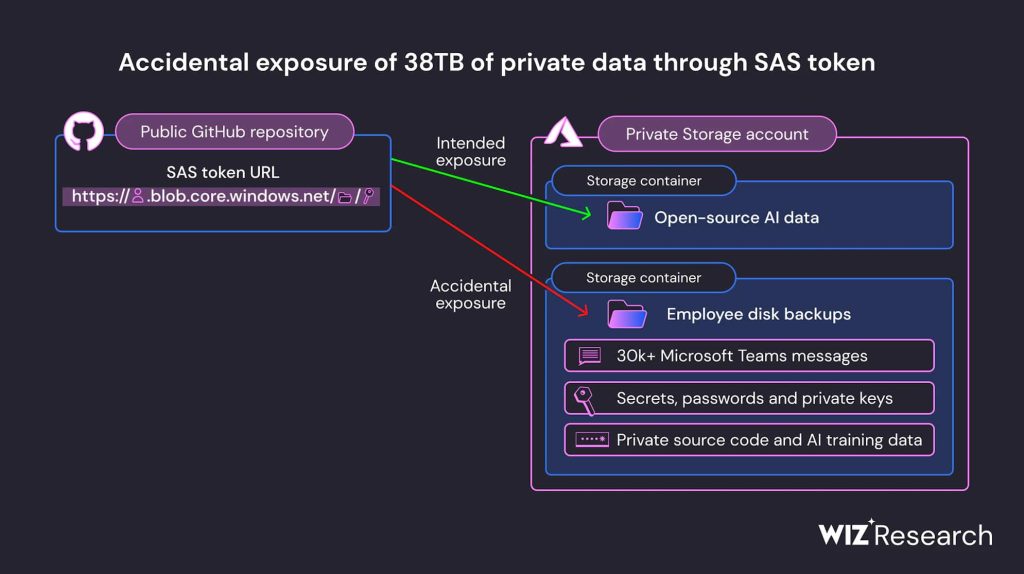

- Microsoft AI researchers accidentally exposed 38 terabytes of private data, including a disk backup of two employees’ workstations, while publishing a bucket of open-source training data on GitHub.

- The backup includes secrets, private keys, passwords, and over 30,000 internal Microsoft Teams messages.

- The data was exposed due to a misconfigured Shared Access Signature (SAS) token.

- SAS tokens can be a security risk if not used properly, as they can grant high levels of access to Azure Storage data.

- Organizations should carefully consider their security needs before using SAS tokens.

As part of their ongoing work on accidental exposure of cloud-hosted data, the Wiz Research Team scanned the internet for misconfigured storage containers. In this process, they found a GitHub repository under the Microsoft organization named robust-models-transfer. The repository belonged to Microsoft’s AI research division, whose purpose is to provide open-source code and AI models for image recognition.

After further digging, it was revealed that Microsoft AI researchers accidentally exposed 38 terabytes of private data, including a disk backup of two employees’ workstations, while publishing a bucket of open-source training data on GitHub. The backup included secrets, private keys, passwords, and over 30,000 internal Microsoft Teams messages.

However, the URL for the repository allowed access to more than just open-source models. It was configured to grant permissions on the entire storage account, exposing additional private data by mistake.

The Wiz scan showed that this account contained 38 terabytes of additional data, including Microsoft employees’ personal computer backups. The backups contained sensitive personal data, including passwords to Microsoft services, secret keys, and over 30,000 internal Microsoft Teams messages from 359 Microsoft employees.

In the hands of threat actors, this data could have been devastating for the technology giant, especially considering the current circumstances. Microsoft has recently revealed how malicious elements are eager to exploit Microsoft Teams to facilitate ransomware attacks.

According to Wiz’s blog post, in addition to the overly permissive access scope, the token was also misconfigured to allow “full control” permissions instead of read-only. This means that not only could an attacker view all the files in the storage account, but they could delete and overwrite existing files as well.

This is particularly interesting considering the repository’s original purpose: providing AI models for use in training code. The repository instructs users to download a model data file from the SAS link and feed it into a script.

The file’s format was ckpt, a format produced by the TensorFlow library. It’s formatted using Python’s pickle formatter, which is prone to arbitrary code execution by design. This means that an attacker could have injected malicious code into all the AI models in this storage account, and every user who trusts Microsoft’s GitHub repository would’ve been infected by it.

In response to the news, Andrew Whaley, Senior Technical Director at the Norwegian cybersecurity firm Promon said: “Microsoft may be one of the frontrunners in the AI race, but it’s hard to believe this is the case when it comes to cybersecurity. The tech titan has taken great technological strides in recent years; however, this incident serves as a reminder that even the best-intentioned projects can inadvertently expose sensitive information.”

“Shared Access Signatures (SAS) are a significant cybersecurity risk if not managed with the utmost care. Although they’re undeniably a valuable tool for collaboration and sharing data, they can also become a double-edged sword when misconfigured or mishandled. When overly permissive SAS tokens are issued or when they are exposed unintentionally, it’s like willingly handing over the keys to your front door to a burglar,” Andrew warned.

He emphasised that “Microsoft may well have been able to prevent this breach if they implemented stricter access controls, regularly audited and revoked unused tokens, and thoroughly educated their employees on the importance of safeguarding these credentials. Additionally, continuous monitoring and automated tools to detect overly permissive SAS tokens could have also averted this blunder.”

This is not the first instance of Microsoft exposing such sensitive data. In July 2020, the Microsoft Bing server inadvertently exposed user search queries and location data, including distressing search terms related to murder and child abuse content.”

Nevertheless, Wiz researchers informed Microsoft about the data leak on June 22, 2023, and the tech giant secured it by August 16, 2023. The researchers published their report earlier today, once they were assured that all security aspects of the exposed servers had been addressed.

RELATED ARTICLES

- Leaky database exposes fake Amazon product reviews scam

- 250 million Microsoft customer support records leaked in plain text

- Microsoft investigating Windows XP, Server 2003 source code leak

- 38 million records exposed in Microsoft Power apps misconfiguration

- Sensitive source codes exposed in Microsoft Azure Blob account leak