AI is expected to be “the most significant driver of change in cybersecurity” this year, according to the World Economic Forum’s annual cybersecurity outlook.

That was the view of 94% of the more than 800 cybersecurity leaders surveyed by the organization for its Global Cybersecurity Outlook 2026 report published this week.

The report, a collaboration with Accenture, also looked at other cybersecurity concerns such as geopolitical risk and preparedness, but AI security issues are what’s most on the minds of CEOs, CISOs and other top security leaders, according to the report.

One interesting data point in the report is a divergence between CEOs and CISOs. Cyber-enabled fraud is now the top concern of CEOs, who have moved their focus from ransomware to “emerging risks such as cyber-enabled fraud and AI vulnerabilities.”

CISOs, on the other hand, are more concerned about ransomware and supply chain resilience, more in line with the forum’s 2025 report. “This reflects how cybersecurity priorities diverge between the boardroom and the front line,” the report said.

Top AI Security Concerns

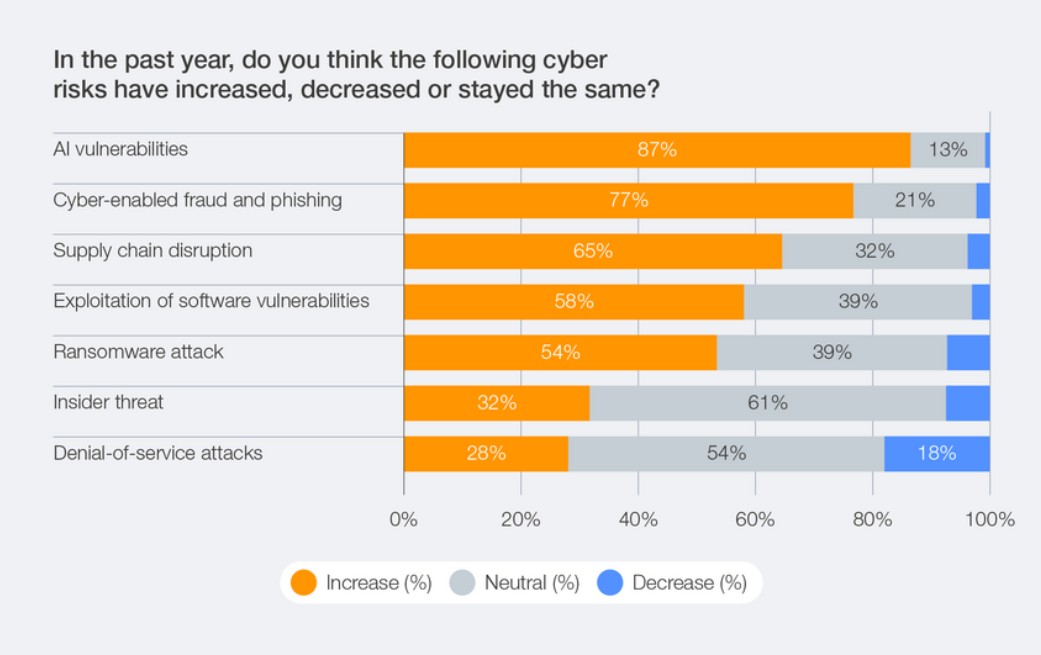

C-level leaders are also concerned about AI-related vulnerabilities, which were identified as the fastest-growing cyber risk by 87% of respondents (chart below). Cyber-enabled fraud and phishing, supply chain disruption, exploitation of software vulnerabilities and ransomware attacks were also cited as growing risks by more than half of survey respondents, while insider threats and denial of service (DoS) attacks were seen as growing concerns by about 30% of respondents.

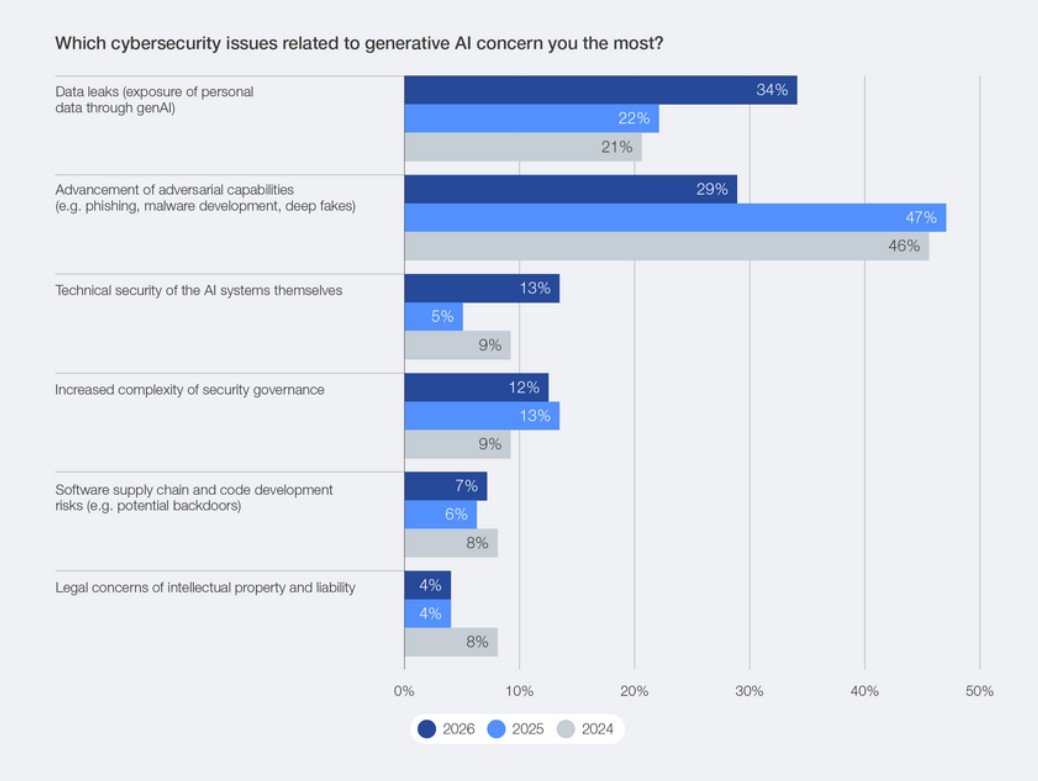

The top generative AI (GenAI) concerns include data leaks exposing personal data, advancement of adversarial capabilities (phishing, malware development and deepfakes, for example), the technical security of the AI systems themselves, and increasingly complex security governance (chart below).

Concern About AI Security Leads to Action

The increasing focus on AI security is leading to action within organizations, as the percentage of respondents assessing the security of AI tools grew from 37% in 2025 to 64% in 2026. That is helping to close “a significant gap between the widespread recognition of AI-driven risks and the rapid adoption of AI technologies without adequate safeguards,” the report said, as more organizations are introducing structured processes and governance models to more securely manage AI.

About 40% of organizations conduct periodic reviews of their AI tools before deploying them, while 24% do a one-time assessment, and 36% report no assessment or no knowledge of one. The report called that “a clear sign of progress towards continuous assurance,” but noted that “roughly one-third still lack any process to validate AI security before deployment, leaving systemic exposures even as the race to adopt AI in cyber defences accelerates.”

The forum report recommended protecting data used in the training and customization of AI models from breaches and unauthorized access, developing AI systems with security as a core principle, incorporating regular updates and patches, and deploying “robust authentication and encryption protocols to ensure the protection of customer interactions and data.”

AI Adoption in Security Operations

The report noted the impact of AI on defensive cybersecurity tools and operations.

“AI is fundamentally transforming security operations – accelerating detection, triage and response while automating labour-intensive tasks such as log analysis and compliance reporting,” the report said. “AI’s ability to process vast datasets and identify patterns at speed positions it as a competitive advantage for organizations seeking to stay ahead of increasingly sophisticated cyberthreats.”

The survey found that 77% of organizations have adopted AI for cybersecurity purposes, primarily to enhance phishing detection (52%), intrusion and anomaly response (46%), and user-behavior analytics (40%).

Still, the report noted a need for greater knowledge and skills in deploying AI for cybersecurity, a need for human oversight, and uncertainty about risk as the biggest obstacles facing AI adoption in cybersecurity. “These findings indicate that trust is still a barrier to widespread AI adoption,” the report said.

Human oversight remains an important part of security operations even among those organizations that have incorporated AI into their processes. “While AI excels at automating repetitive, high-volume tasks, its current limitations in contextual judgement and strategic decision-making remain clear,” the report said. “Over-reliance on ungoverned automation risks creating blind spots that adversaries may exploit.”

Adoption of AI cybersecurity tools varies by industry, the report found. The energy sector prioritizes intrusion and anomaly detection, according to 69% of respondents who have implemented AI for cybersecurity. The materials and infrastructure sector emphasizes phishing protection (80%); and the manufacturing, supply chain and transportation sector is focused on automated security operations (59%).

Geopolitical Cyber Threats

Geopolitics was the top factor influencing overall cyber risk mitigation strategies, with 64% of organizations accounting for geopolitically motivated cyberattacks such as disruption of critical infrastructure or espionage.

The report also noted that “confidence in national cyber preparedness continues to erode” in the face of geopolitical threats, with 31% of survey respondents “reporting low confidence in their nation’s ability to respond to major cyber incidents,” up from 26% in the 2025 report. Respondents from the Middle East and North Africa express confidence in their country’s ability to protect critical infrastructure (84%), while confidence is lower among respondents in Latin America and the Caribbean (13%).

“Recent incidents affecting key infrastructure, such as airports and hydroelectric facilities, continue to call attention to these concerns,” the report said. “Despite its central role in safeguarding critical infrastructure, the public sector reports markedly lower confidence in national preparedness.”

And 23% of public-sector organizations said they lack sufficient cyber-resilience capabilities, the report found.