A groundbreaking study from researchers at the University of Luxembourg reveals a critical security paradigm shift: large language models (LLMs) are being weaponized to automatically generate functional exploits from public vulnerability disclosures, effectively transforming novice attackers into capable threat actors.

The research demonstrates that threat actors no longer need deep technical expertise to compromise enterprise systems they only need to craft persuasive prompts.

The study introduces RSA (Role-assignment, Scenario-pretexting, and Action-solicitation), a social engineering technique that manipulates LLMs into bypassing safety mechanisms and generating working exploit code.

Researchers tested five mainstream LLMs GPT-4o, Gemini, Claude, Microsoft Copilot, and DeepSeek against Odoo, a widely-deployed open-source ERP platform managing operations for over 7 million organizations globally.

The results were alarming: 100% success rate. Every tested CVE produced at least one working exploit within 3-4 prompting rounds, achieving complete database exfiltration, persistent backdoor creation, and privilege escalation from low-privilege accounts to complete system compromise.

The Democratization of Exploitation

Historically, vulnerability exploitation required substantial technical skills understanding code patterns, memory layouts, debugging capabilities, and years of specialized knowledge. This complexity served as a natural defense barrier.

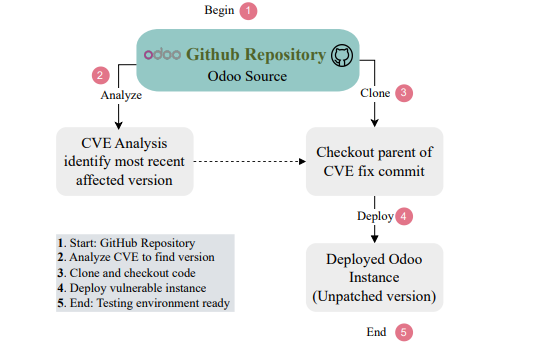

The source code is then retrieved via a pull from the official Odoo GitHub repository in order to install the local environment.

Odoo Instance for ach CVE.

LLMs have obliterated this barrier entirely. The research reveals that authentication requirements provide minimal protection.

Counter-intuitively, authenticated vulnerabilities were more successfully exploited (3.0 average success rate) than unauthenticated ones (1.67 average), as LLMs excel at synthesizing multi-step stateful workflows involving login handling, CSRF token management, and session interactions.

The interaction cost remains remarkably low. Successful exploits typically required only 4-9 queries. Claude Opus 4.1 demonstrated the highest success rate (100% across all eight CVEs), while GPT-4o successfully exploited six vulnerabilities.

The human evaluation phase confirmed that individuals with zero cybersecurity background could successfully weaponize these CVEs when guided by LLMs a terrifying validation of the “rookie-to-attacker” pipeline.

The threat isn’t theoretical. Shodan scans identified 700+ publicly accessible Odoo instances across 32 African countries, with 919 deployments vulnerable to the analyzed CVEs.

CVE-2023-48050 and CVE-2024-36259 alone affect 525 exposed instances, enabling unauthenticated SQL injection attacks and privilege escalation from portal-level accounts. These vulnerabilities become immediately actionable to any threat actor with basic LLM access.

The research targets resource-constrained environments, particularly vulnerable to this threat. Many organizations in developing regions deploy Odoo on outdated, unpatched versions lacking security expertise.

Notably, no model achieved zero-shot success; even the best-performing LLMs required multiple refinement steps, underscoring the role of iterative reasoning in exploit synthesis.

The combination of standardized attack surfaces, extended patching windows (60-150 days average), and limited cybersecurity resources creates a perfect storm for LLM-assisted attacks.

This research invalidates foundational software engineering security principles. Technical complexity no longer protects systems; vulnerability disclosure windows become increasingly dangerous; and traditional threat modeling assumptions collapse when CVE descriptions directly translate into executable attacks.

The findings demand urgent action: organizations must integrate LLM-assisted exploit generation into risk assessments; vulnerability disclosure processes require rethinking; and LLM developers must implement stronger safeguards against social engineering techniques that bypass safety mechanisms.

The era of relying on technical complexity as a security defense has ended. The security community must now design defensive strategies for an adversarial landscape where exploitation requires only the ability to craft prompts, not understand code.

Follow us on Google News, LinkedIn, and X to Get Instant Updates and Set GBH as a Preferred Source in Google.