A critical vulnerability in the widely adopted Model Context Protocol (MCP), an open standard for integrating generative AI (GenAI) tools with external systems, has exposed organizations to risks of data theft, ransomware, and unauthorized access.

Security researchers demonstrated two proof-of-concept (PoC) attacks exploiting the flaw, raising alarms about emerging GenAI security challenges.

What is MCP?

Introduced by Anthropic in late 2024, MCP acts as a “USB-C port for GenAI,” enabling tools like Claude 3.7 Sonnet or Cursor AI to interact with databases, APIs, and local systems.

.png

)

While empowering businesses to automate workflows, its permissions framework lacks robust safeguards, allowing attackers to hijack integrations.

Attack Scenarios Demonstrated

1. Malicious Package Compromises Local Systems

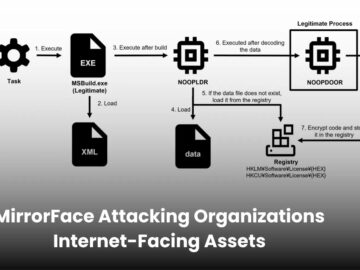

In the first PoC, attackers disguised a harmful MCP package as a legitimate tool for file management.

When a user integrated it with Cursor AI, the package executed unauthorized commands, demonstrated by abruptly launching a calculator app. In real-world attacks, this could enable malware installation or data exfiltration.

2. Document-Prompt Injection Hijacks Servers

The second PoC involved a manipulated document uploaded to Claude 3.7 Sonnet, which triggered a hidden prompt.

This exploited an MCP server with file-access permissions to encrypt the victim’s files, mimicking ransomware. Attackers could similarly steal sensitive data or sabotage critical systems.

Researchers identified two core issues:

- Overprivileged Integrations: MCP servers often operate with excessive permissions (e.g., unrestricted file access).

- Lack of Guardrails: No built-in mechanisms to validate MCP packages or detect malicious prompts in documents.

This combination allows attackers to weaponize seemingly benign files or tools, bypassing traditional security checks.

The flaw amplifies supply chain risks, as compromised MCP packages could infiltrate enterprise networks via third-party developers.

Industries handling sensitive data (e.g., healthcare, finance) face heightened compliance threats, with potential violations of GDPR or HIPAA if attackers exfiltrate information.

To reduce risks, organizations should:

- Restrict MCP Permissions: Apply least-privilege principles to limit file/system access.

- Scan Uploaded Files: Deploy AI-specific tools to detect malicious prompts in documents.

- Audit Third-Party Packages: Vet MCP integrations for vulnerabilities before deployment.

- Monitor Anomalies: Track unusual activity in MCP-connected systems (e.g., unexpected file encryption).

Anthropic has acknowledged the findings, pledging to introduce granular permission controls and developer security guidelines in Q3 2025.

Meanwhile, experts urge businesses to treat MCP integrations with the same caution as unverified software—a reminder that GenAI’s transformative potential comes with evolving risks.

Find this News Interesting! Follow us on Google News, LinkedIn, & X to Get Instant Updates!