A groundbreaking cybersecurity research team has developed a novel defensive technique that renders stolen artificial intelligence databases virtually useless to attackers by deliberately poisoning proprietary knowledge graphs with plausible yet false information.

The research, conducted by scientists from the Institute of Information Engineering at the Chinese Academy of Sciences, National University of Singapore, and Nanyang Technological University, introduces AURA (Active Utility Reduction via Adulteration) a framework designed to protect high-value knowledge graphs that power Graph Retrieval-Augmented Generation (GraphRAG) systems used by major pharmaceutical and technology companies.

Proprietary knowledge graphs represent significant intellectual property investments, with some costing upward of $120 million to construct.

Cybercriminals and malicious insiders increasingly target these databases. Notable incidents include a Waymo engineer who stole over 14,000 proprietary files and the 2020 European Medicines Agency hack that compromised Pfizer-BioNTech’s confidential vaccine data.

Traditional security measures such as watermarking and encryption prove ineffective against private-use scenarios in which attackers operate stolen databases in isolated environments.

Encryption also introduces prohibitive computational overhead and latency issues that make real-time AI systems impractical.

How AURA Works

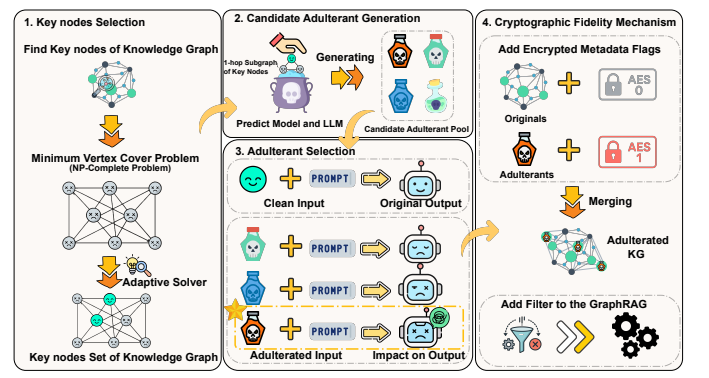

The AURA framework employs a sophisticated four-stage approach to corrupt stolen data while preserving functionality for authorized users.

First, it identifies critical nodes within the knowledge graph using advanced algorithms. Next, it generates structurally plausible but factually incorrect information by combining link prediction models with large language models to create fake data entries that blend seamlessly with authentic details.

The system then selects adulterants with maximum disruptive potential using a Semantic Deviation Score that measures how effectively each false entry corrupts AI-generated outputs.

Finally, a cryptographic mechanism allows authorized users with a secret key to filter out all corrupted data, ensuring legitimate operations remain unaffected.

Testing across four benchmark datasets and multiple AI models including GPT-4o, Gemini-2.5-flash, Qwen-2.5-7B, and Llama2-7B demonstrated AURA’s remarkable effectiveness.

The framework degraded unauthorized system accuracy to just 4.4-5.3%, with harmfulness scores consistently exceeding 94%.

Simultaneously, authorized users experienced perfect fidelity with 100% performance alignment to original systems and minimal overhead, with maximum query latency increases under 14%.

Stealth and Resilience

AURA’s adulterants noted highly stealthy, evading both structural and semantic anomaly detectors with detection rates below 4.1%.

Impact of MVC Heuristic Algorithms: Our adaptive strategy for solving the MVC problem employs a heuristic forlarge graphs to ensure scalability.

Even after sophisticated sanitization attacks, 80.2% of adulterants remained embedded in the knowledge graph, and unauthorized system accuracy stayed below 17.7%.

The framework’s effectiveness increased with query complexity. For multi-hop reasoning questions requiring traversal across multiple data relationships, harmfulness scores rose from 94.7% for simple queries to 95.8% for complex three-hop questions.

This research introduces a paradigm shift from passive detection to active degradation of stolen intellectual property.

By making theft economically pointless rather than focusing solely on prevention, AURA offers organizations a practical defense mechanism against the growing threat of AI database theft in an era where knowledge graphs power critical business applications from drug discovery to manufacturing intelligence.

Follow us on Google News, LinkedIn, and X to Get Instant Updates and Set GBH as a Preferred Source in Google.